The (not so) subtle reason you hate chatbots

"Peter, we're thinking about adding a chatbot into our customer support stack, what'd you think from a UX perspective?".

"Well, do you enjoy using chatbots?, I replied.

They laughed, and with that, my client dismissed the idea.

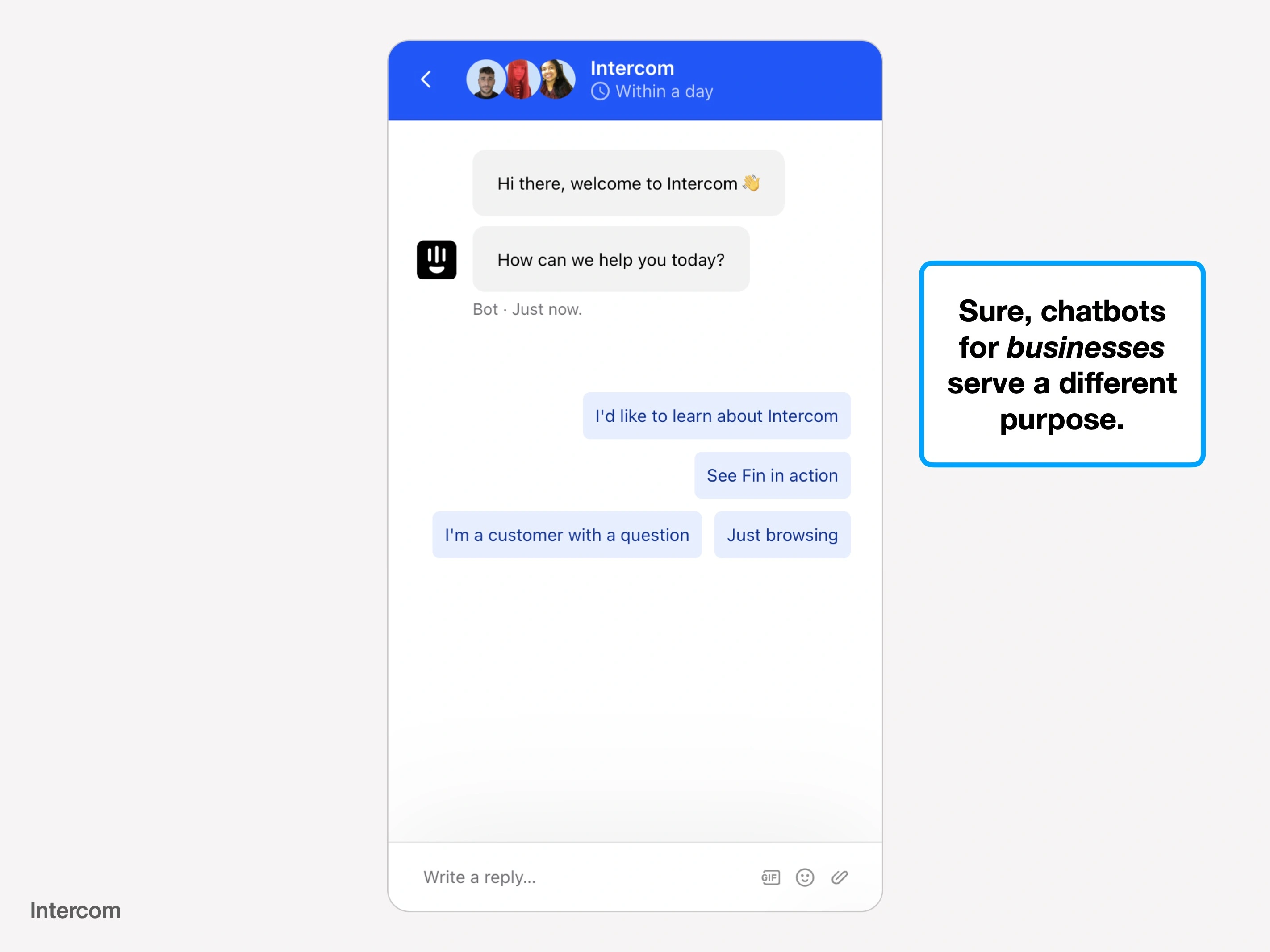

There's a strong arguement that chatbots are mostly a one-sided revolution; increasing efficiency for businesses, whilst generally irritating consumers.

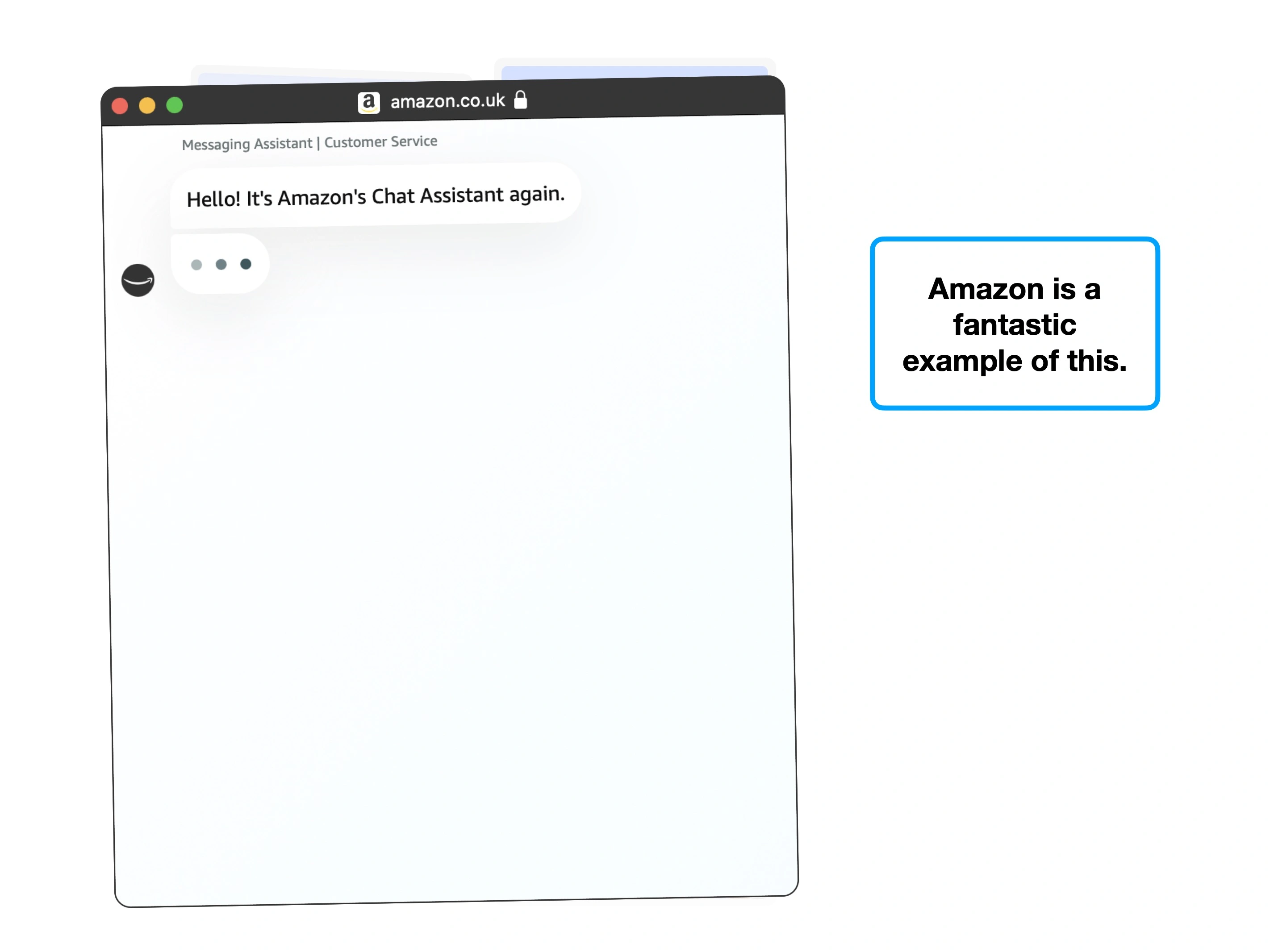

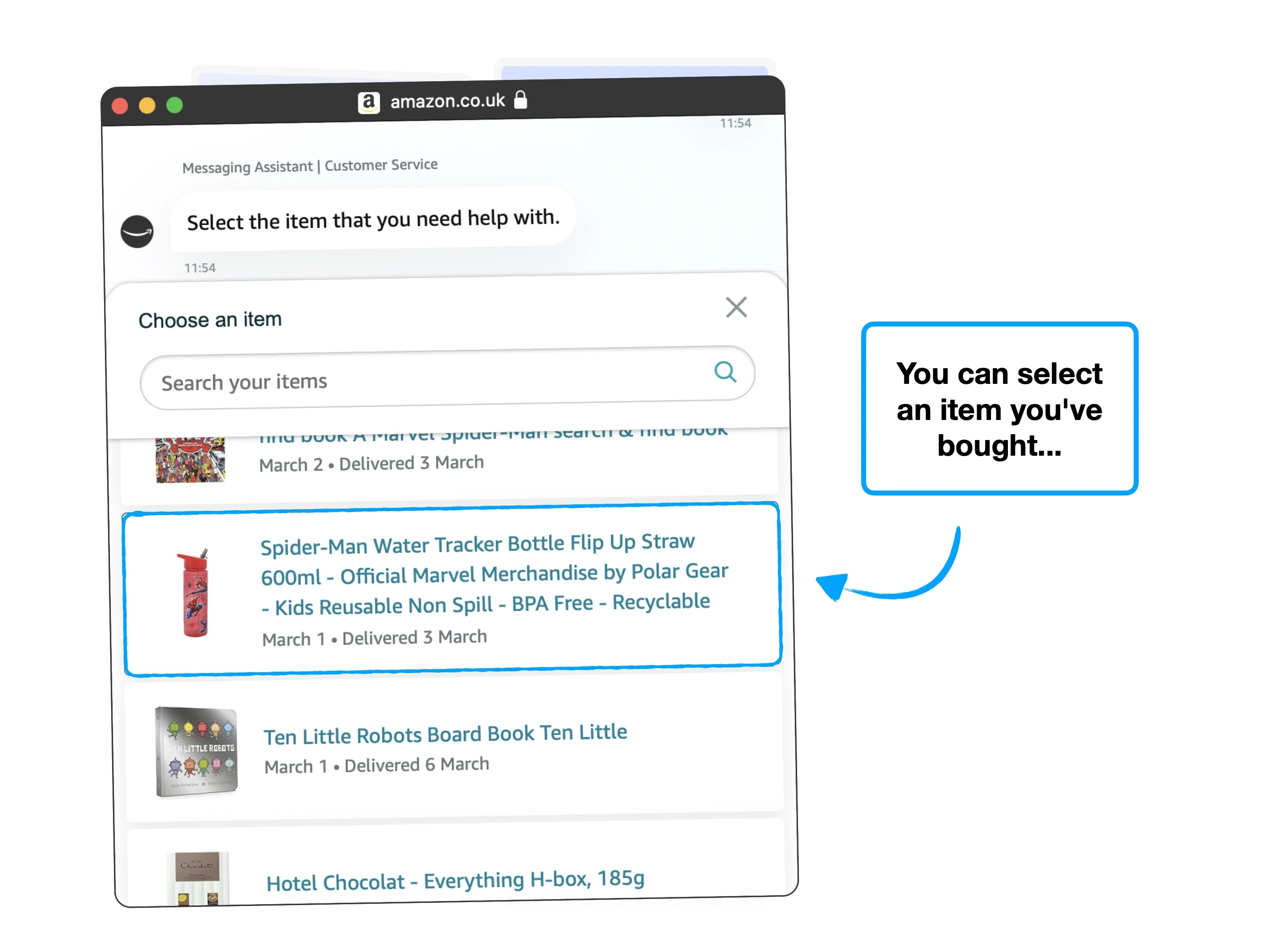

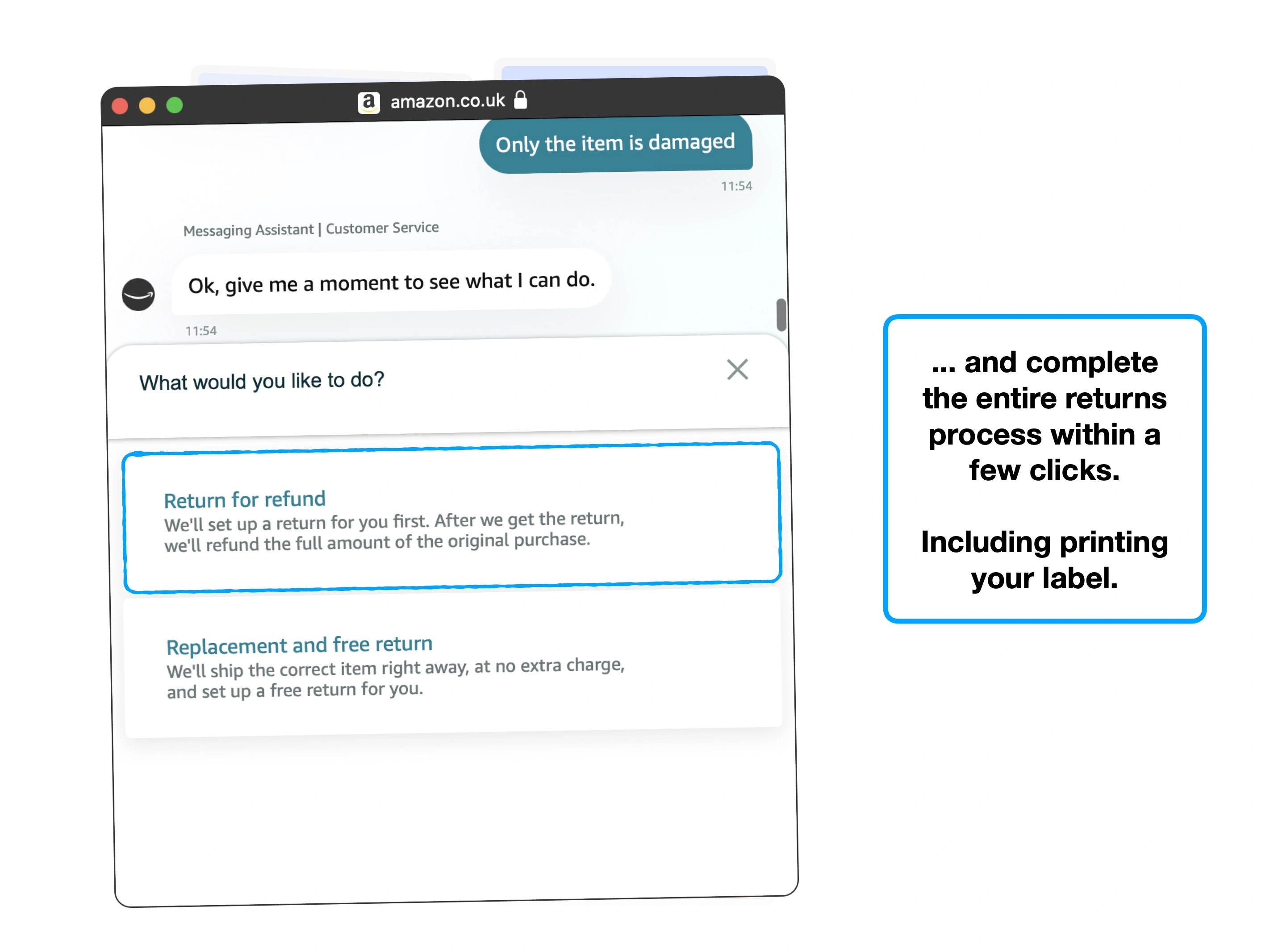

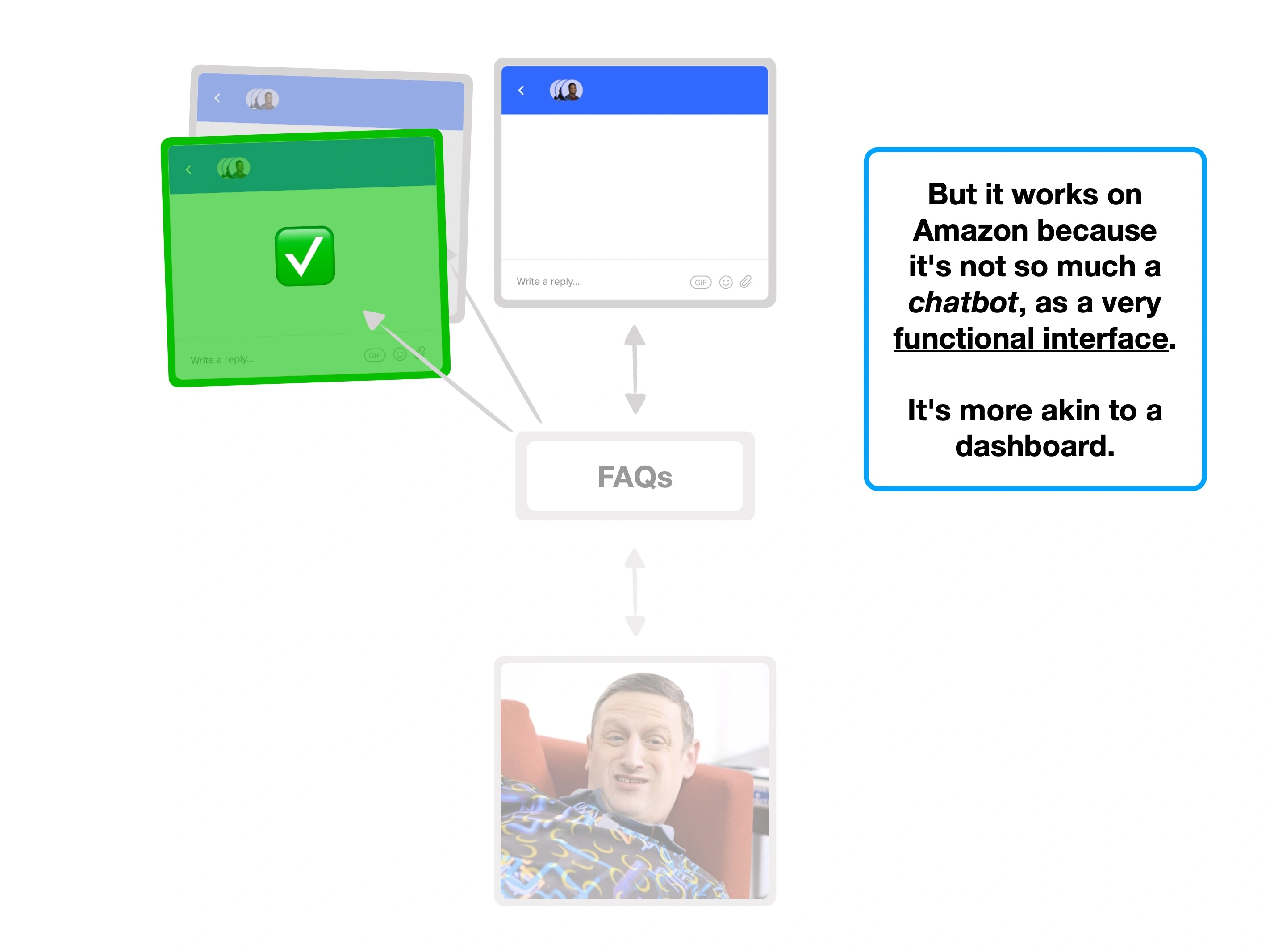

To be clear, they can be fantastic—Amazon's is exceptional.

But broadly, virtual agents, decision trees and ChatGPT-powered conversations aren't exactly the future of customer service that we were promised.

So this month I've been putting a selection of chatbots through their paces; here are some of the subtle reasons you find them annoying.

And ultimately, some tips for how to choose and implement chatbots (think; AI) into your customer experience journey.

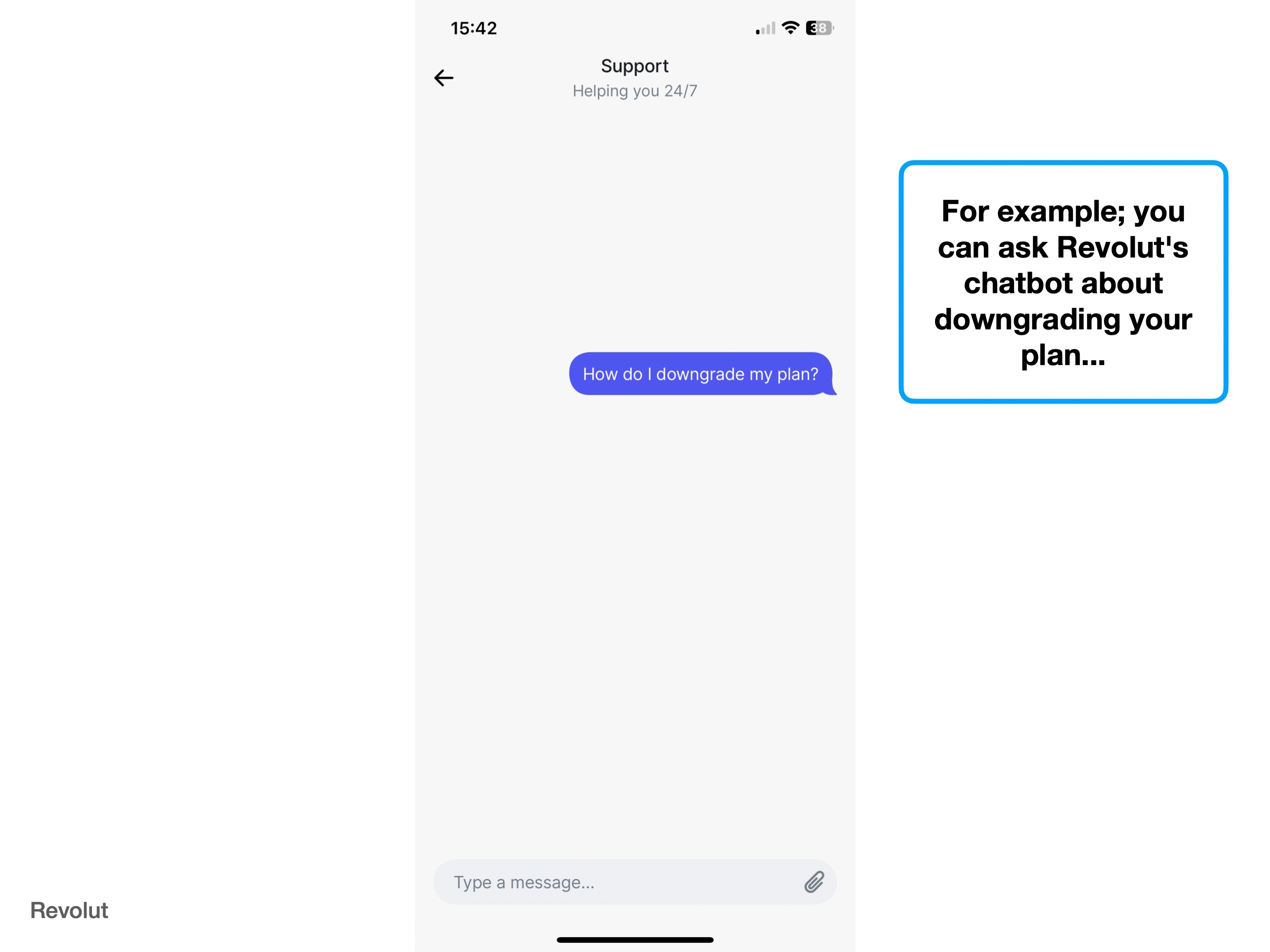

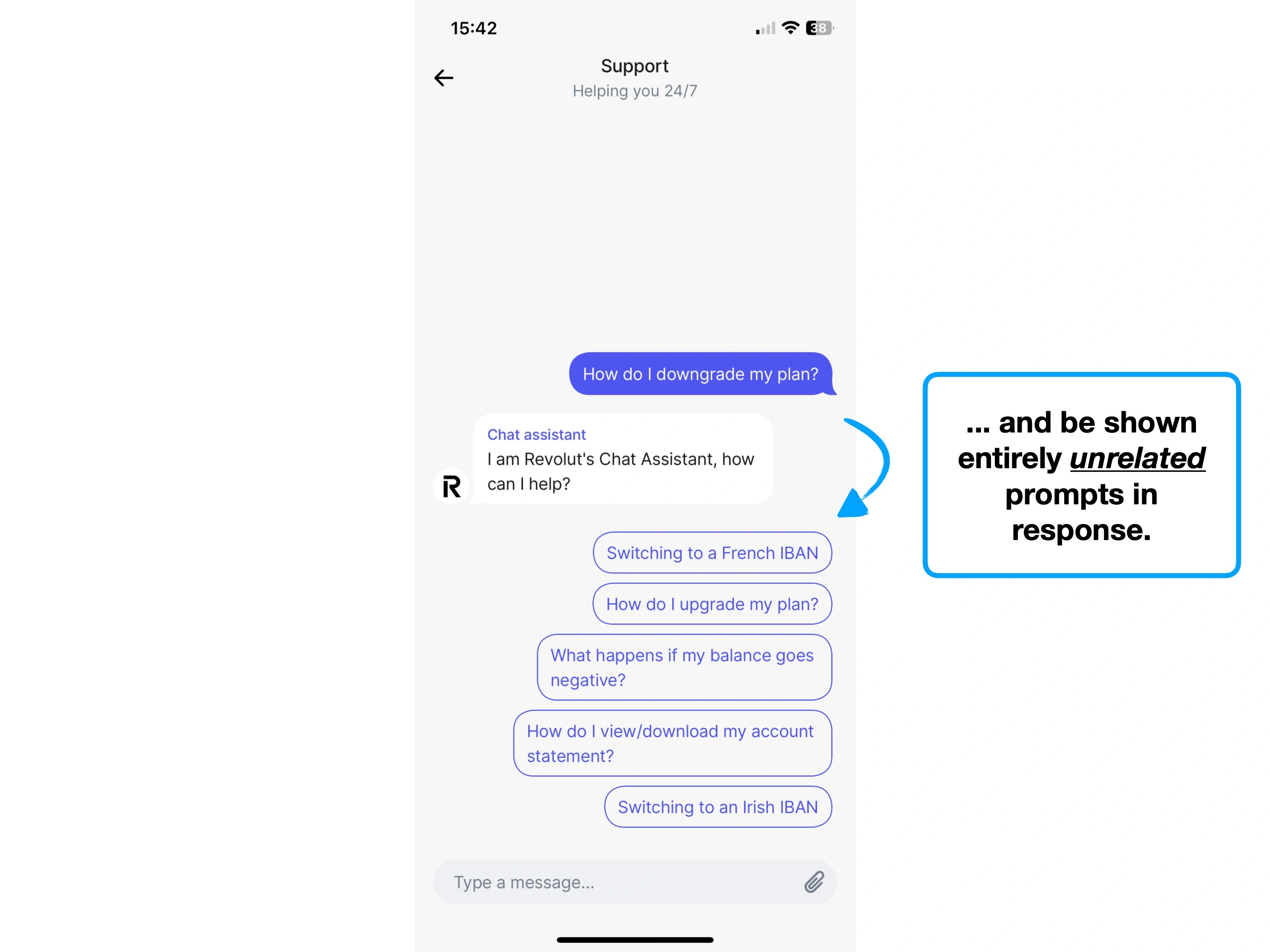

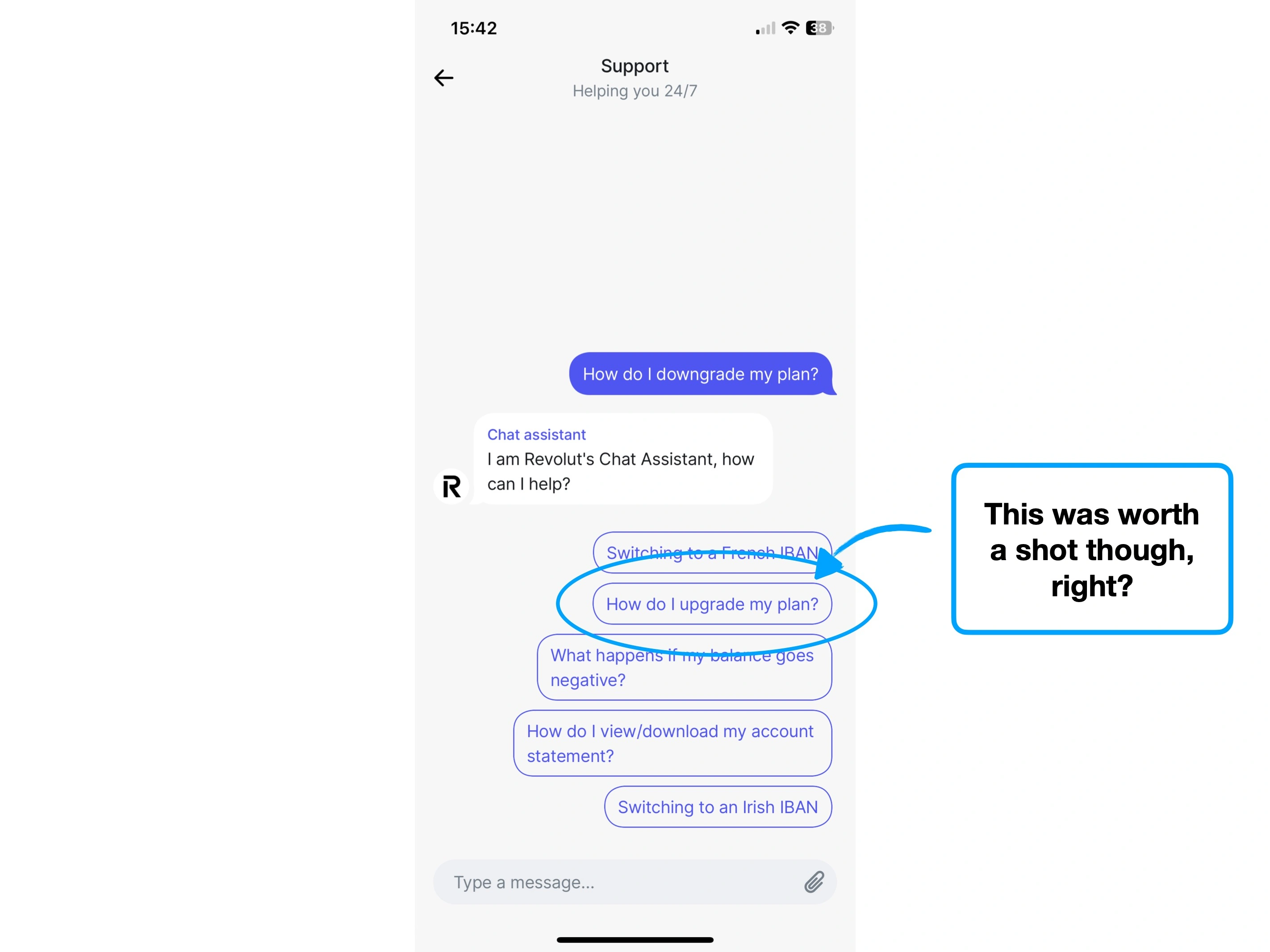

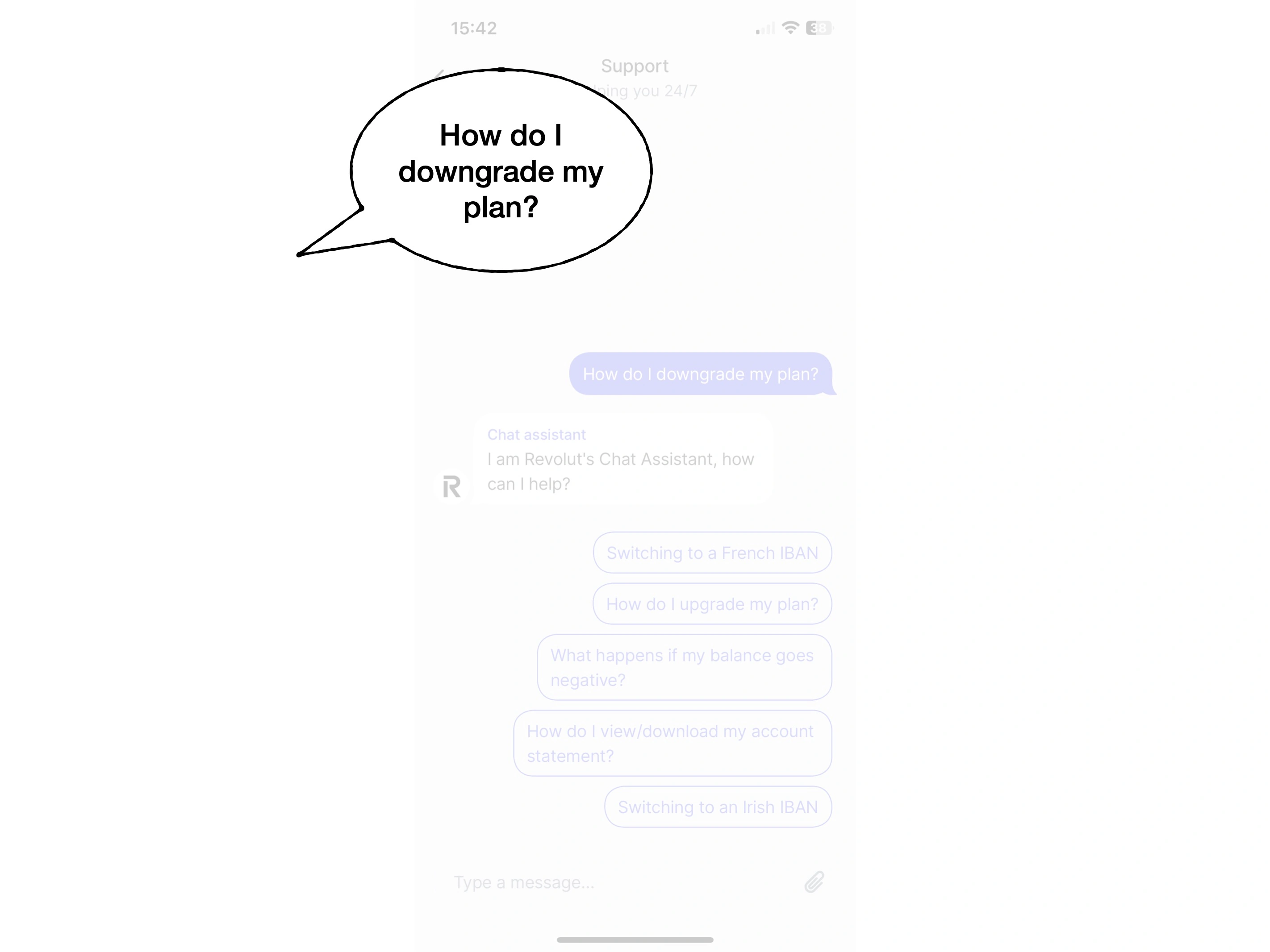

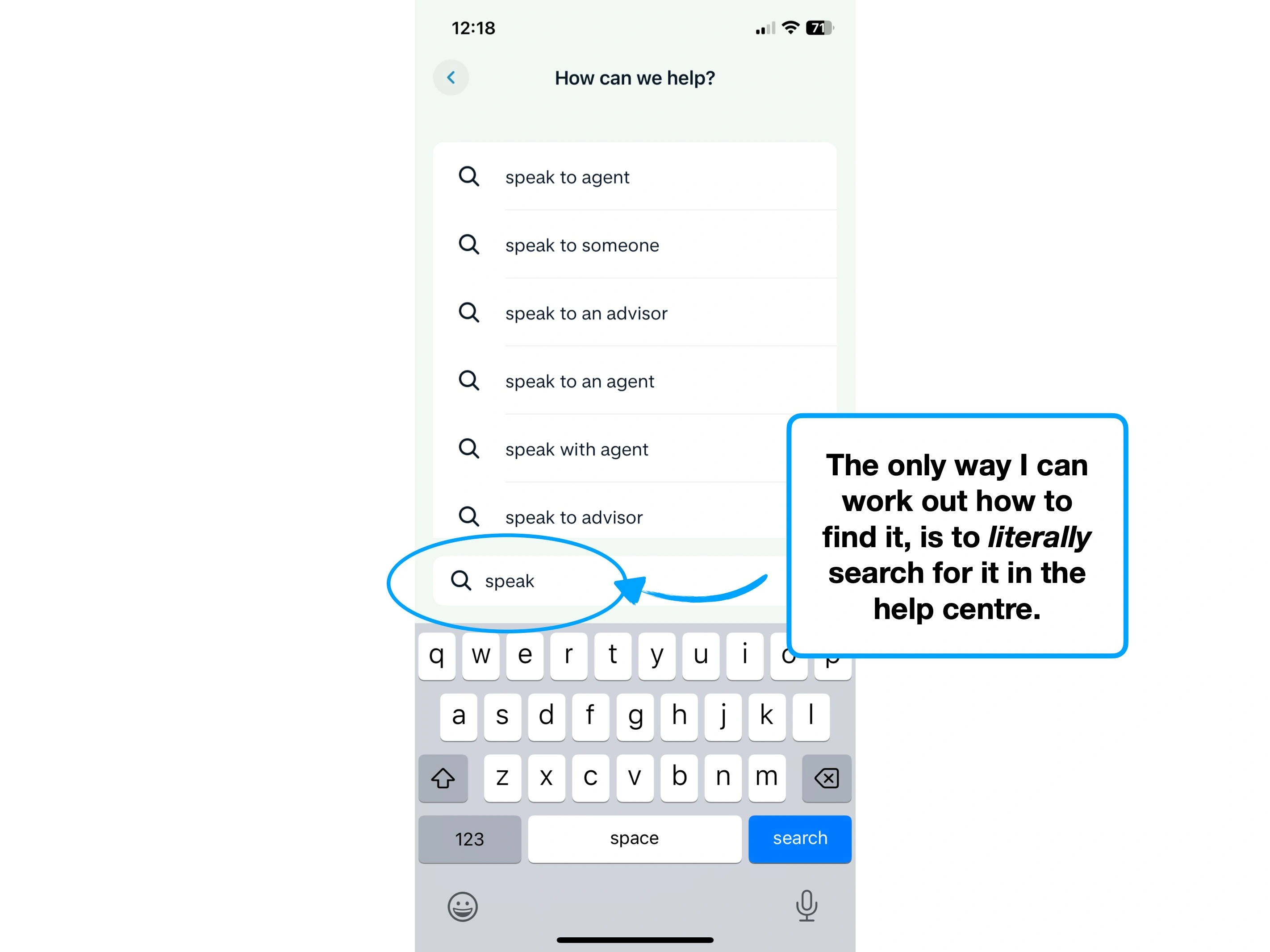

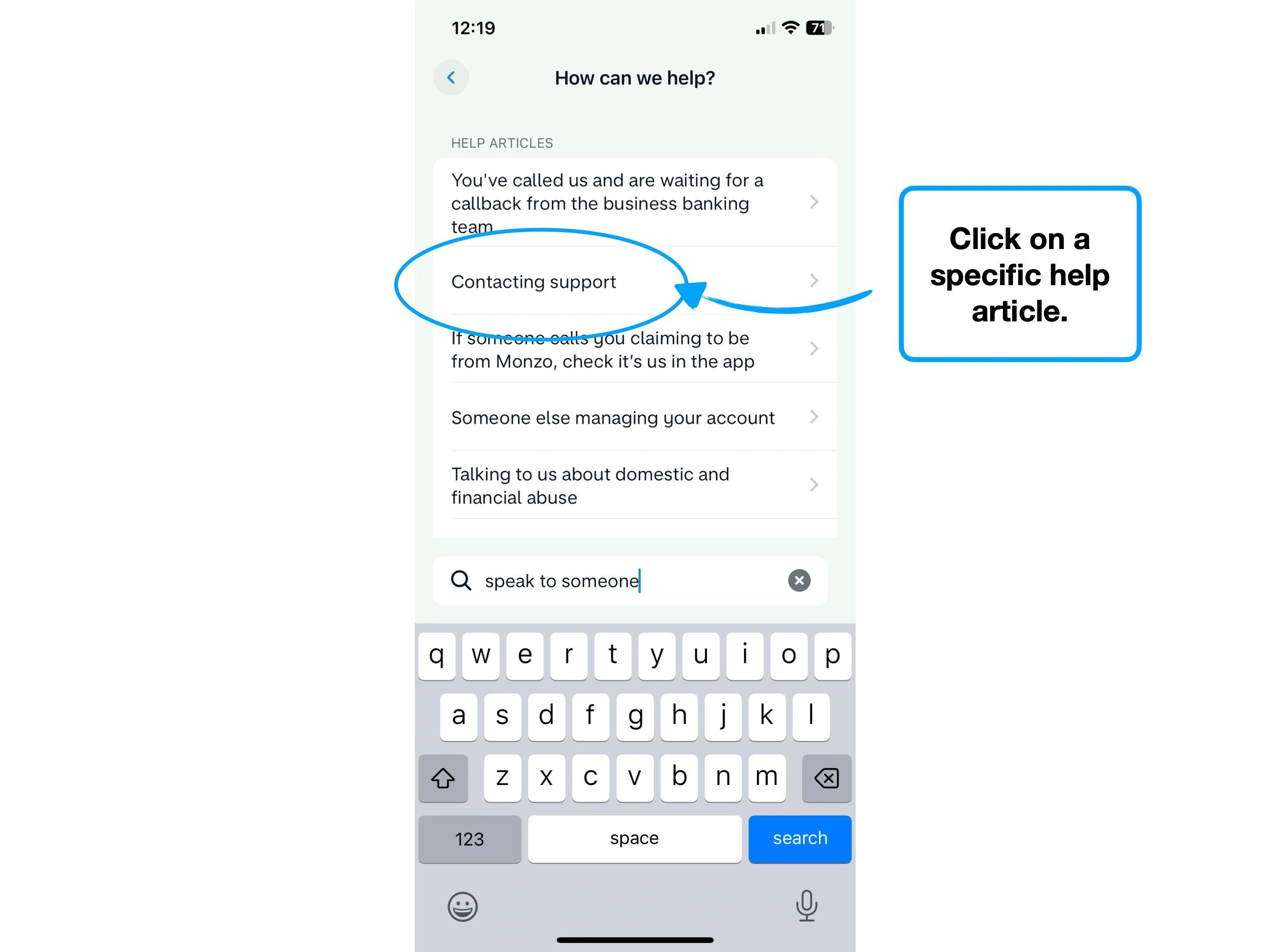

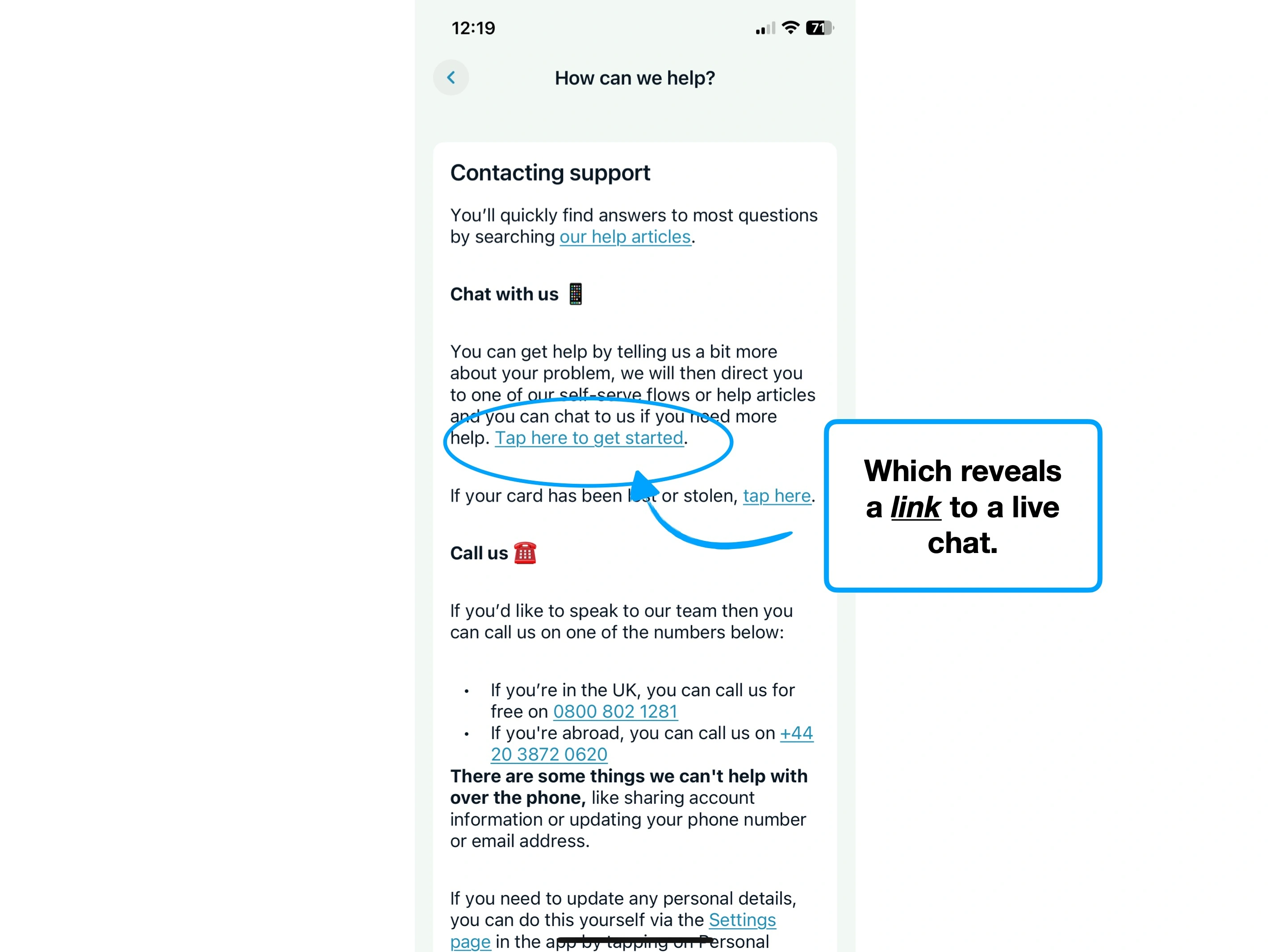

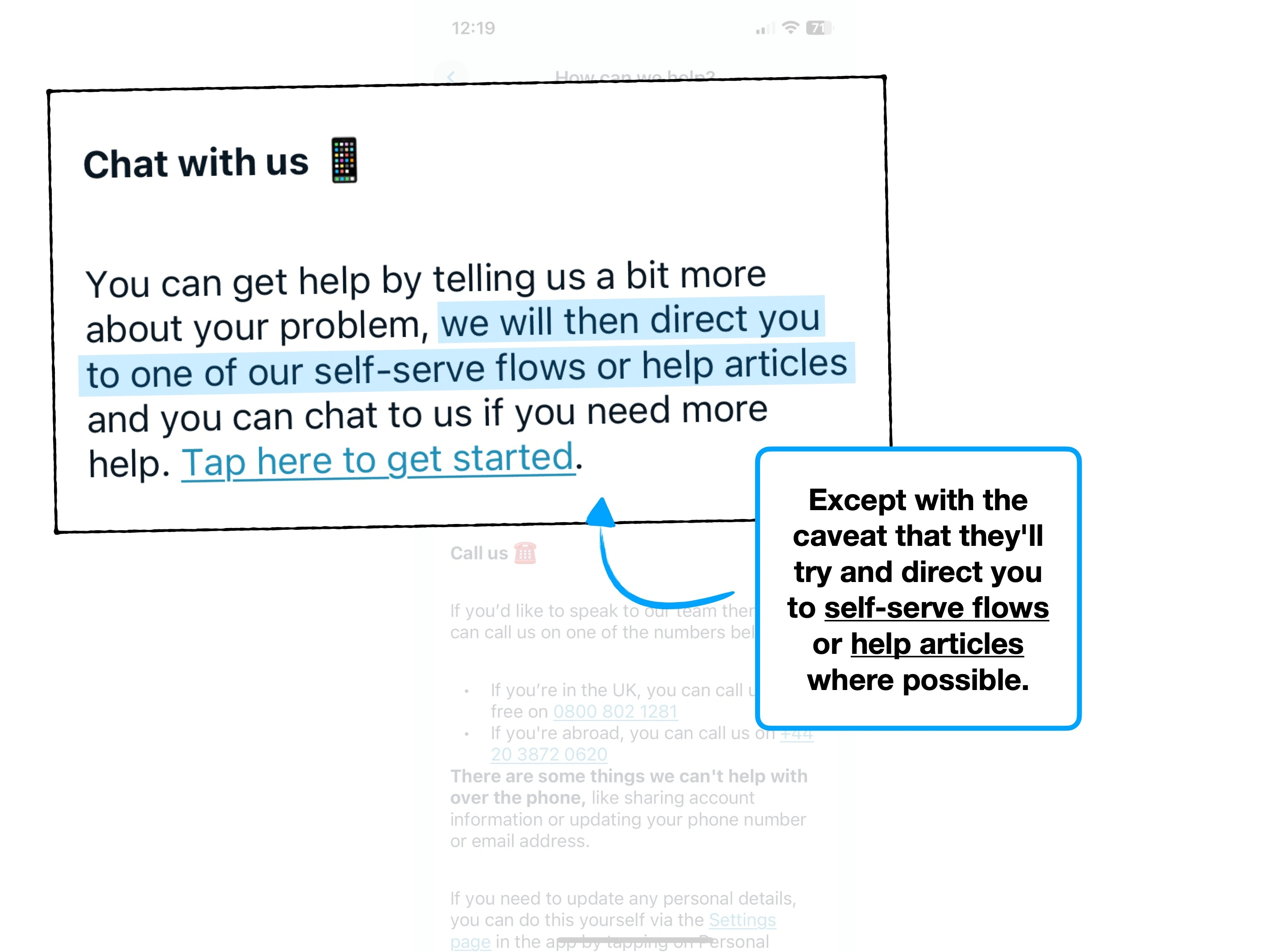

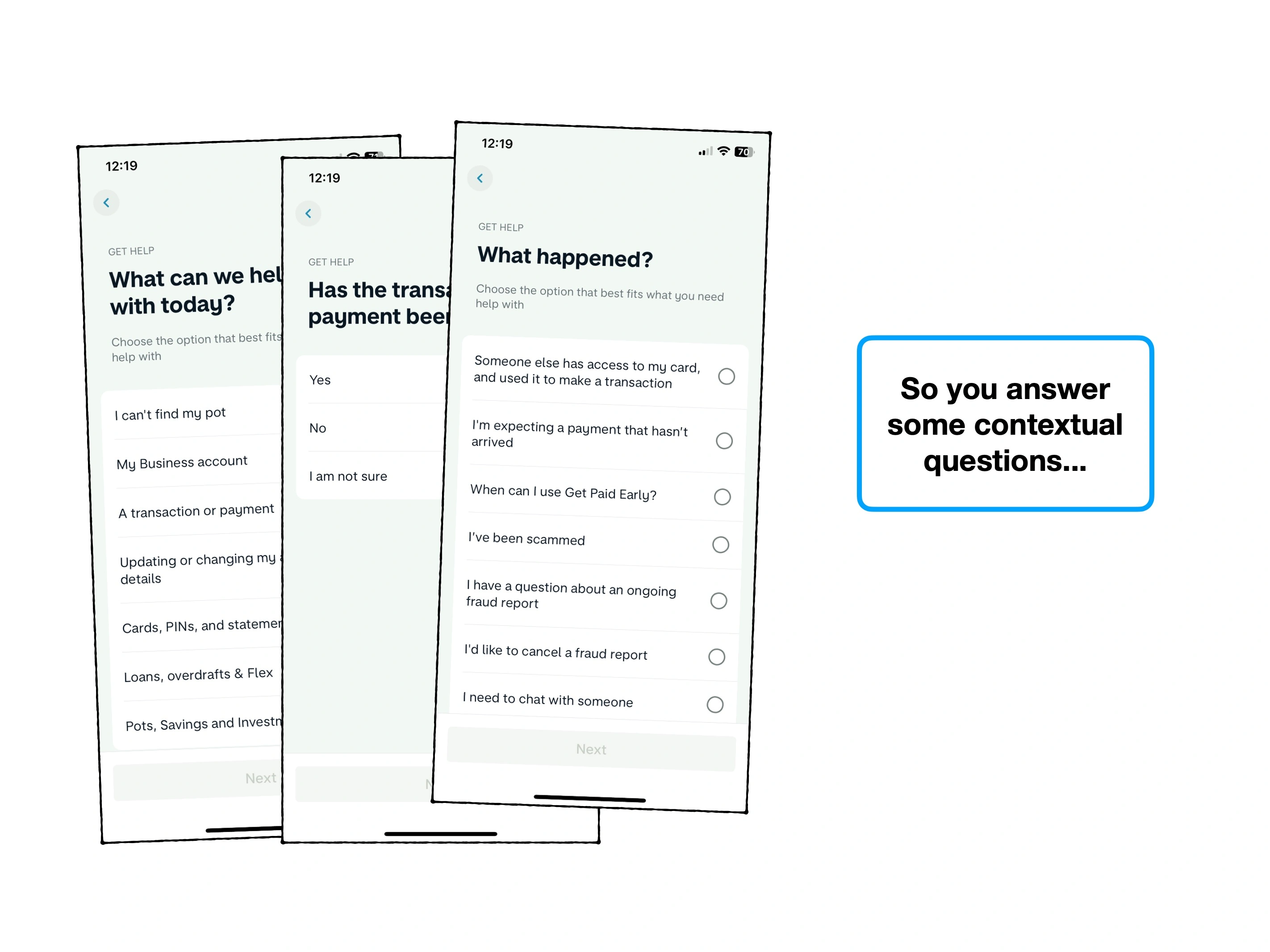

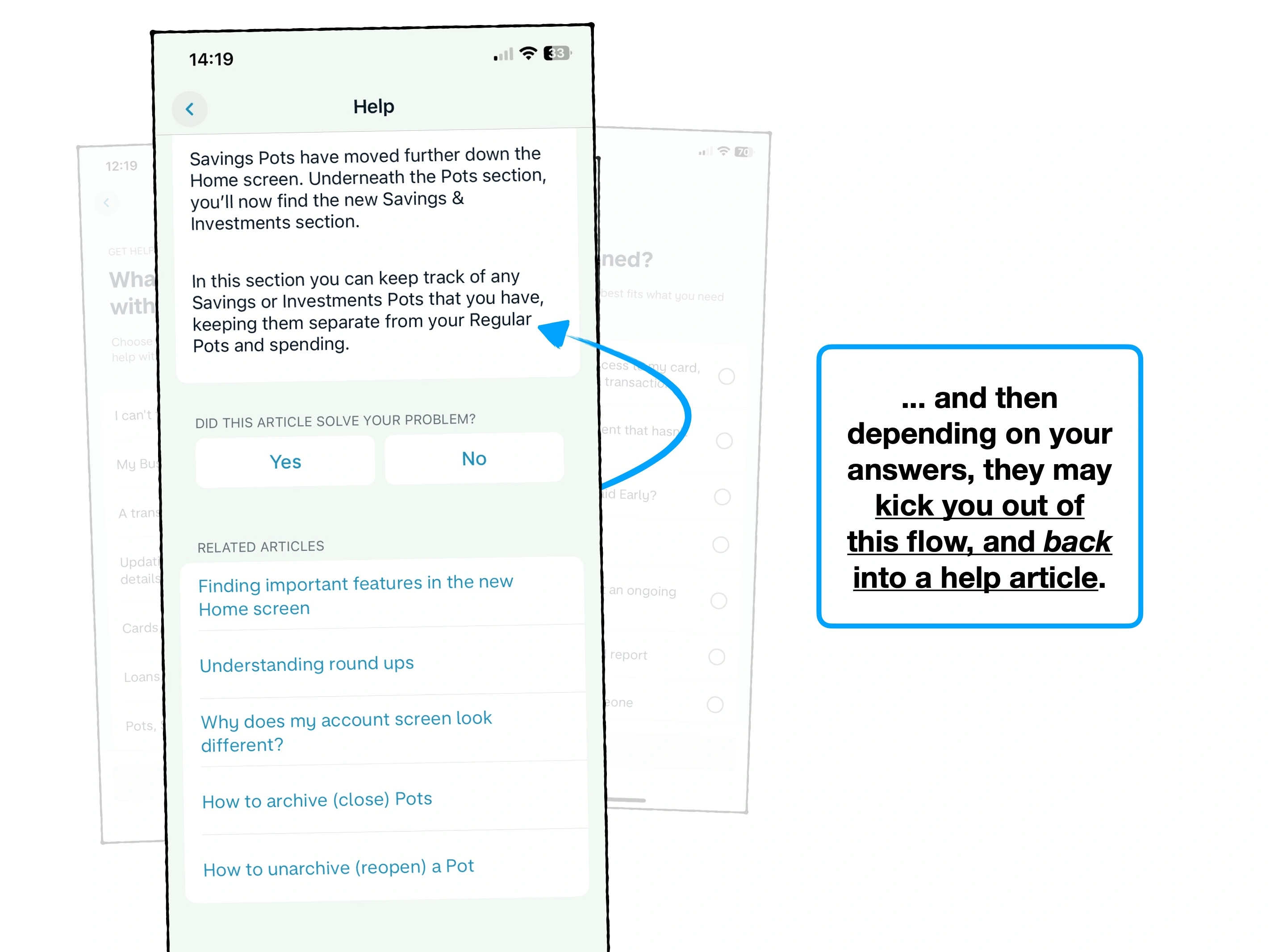

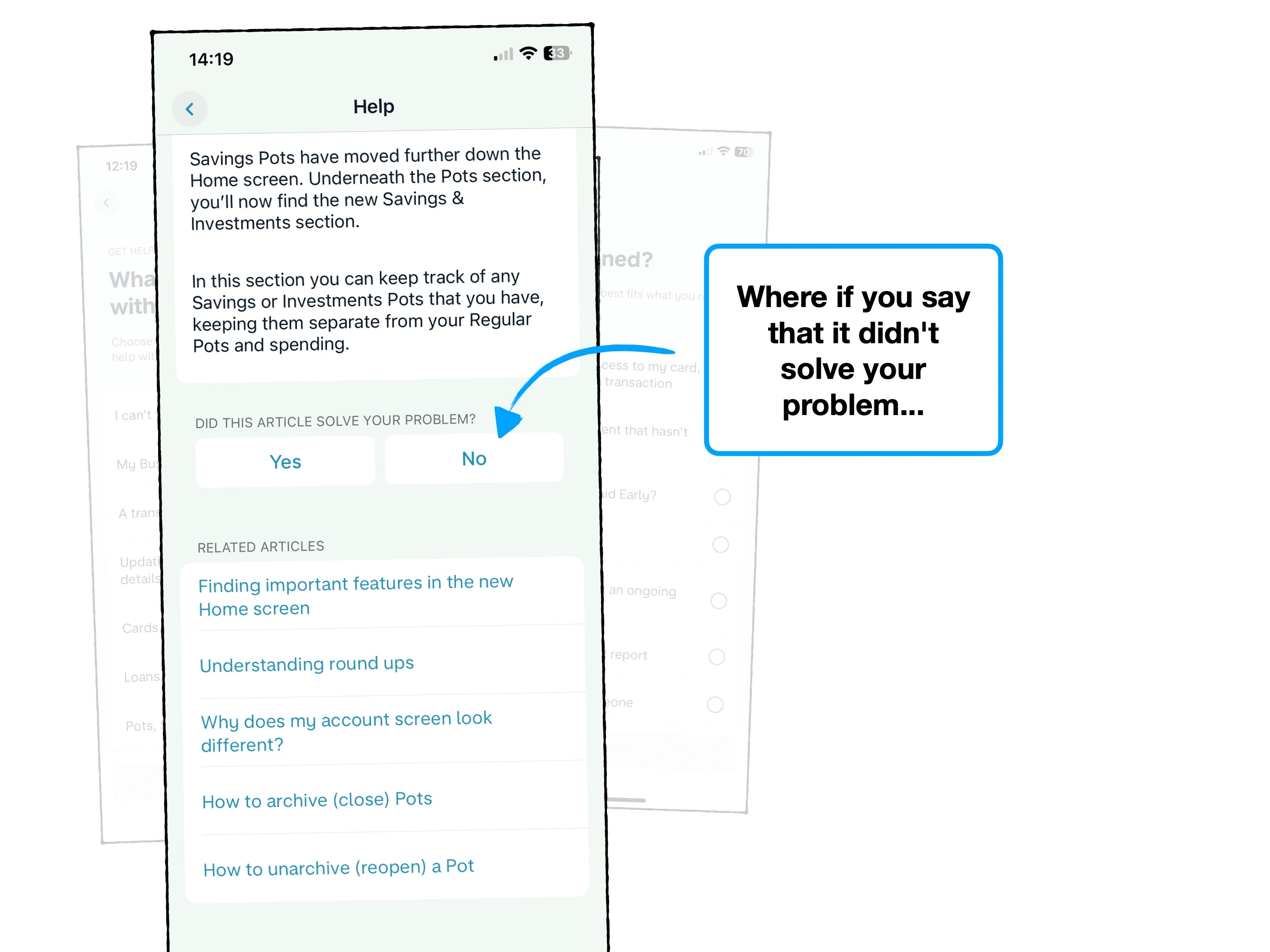

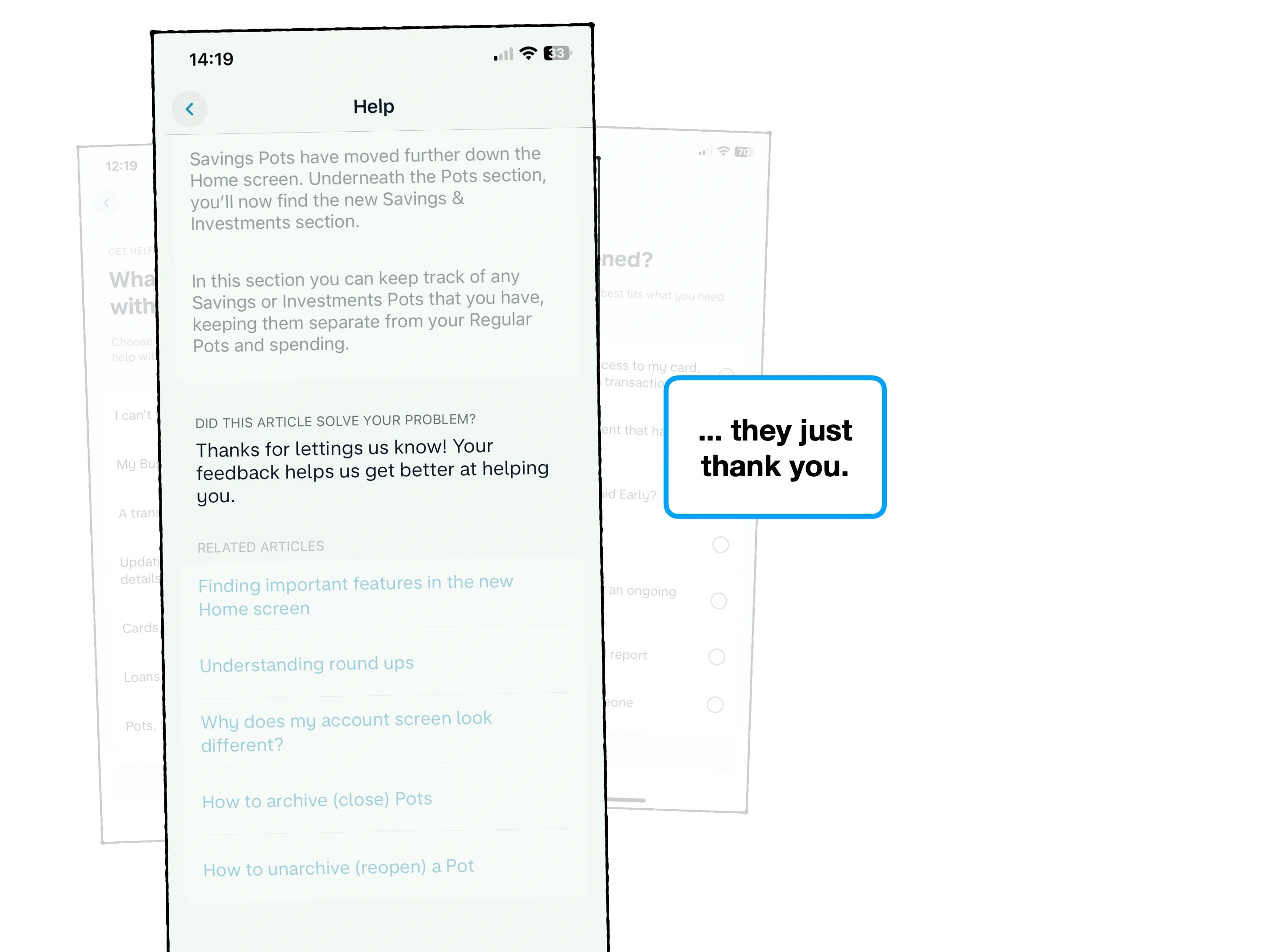

Case study

Diving deeper

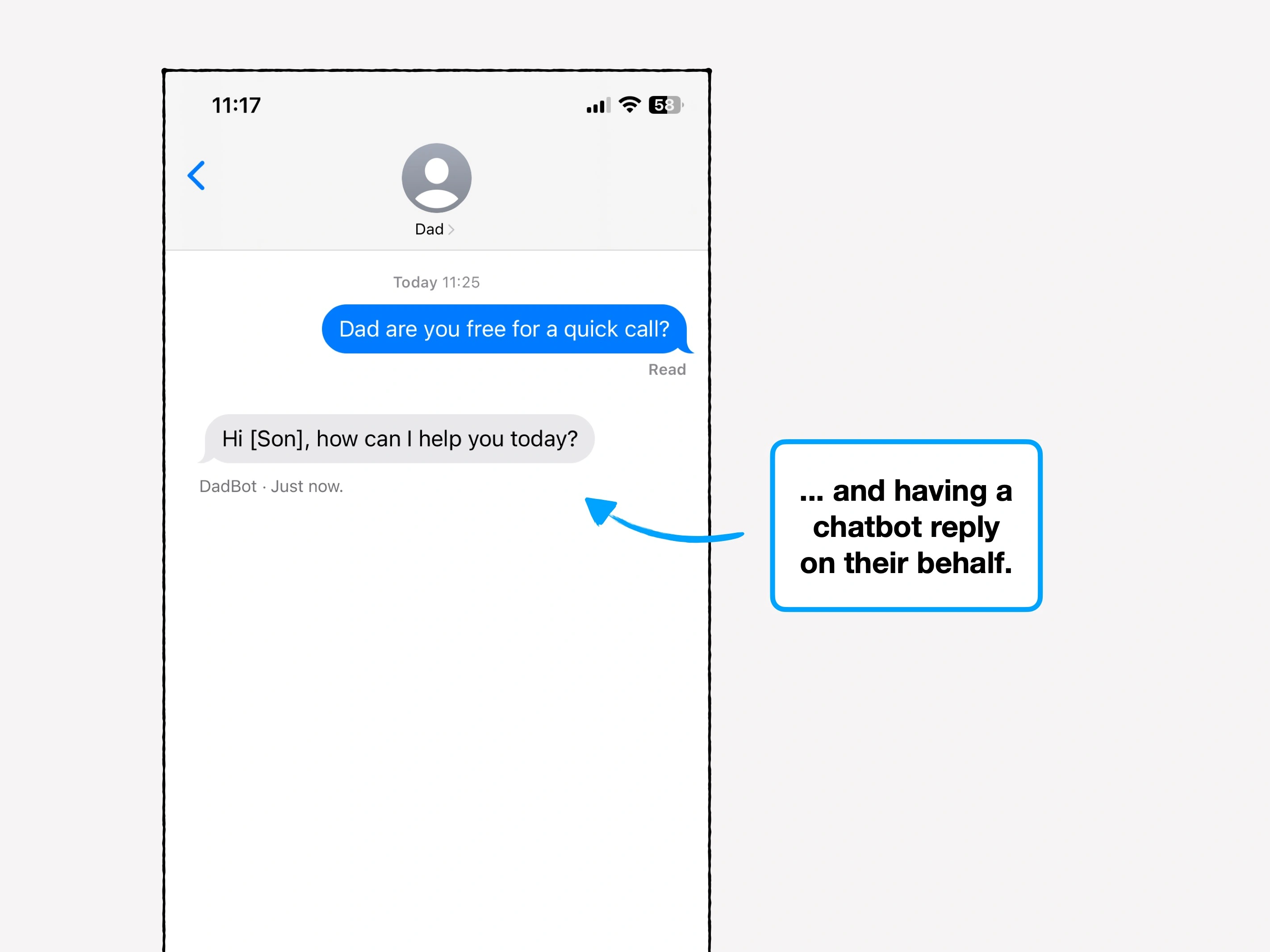

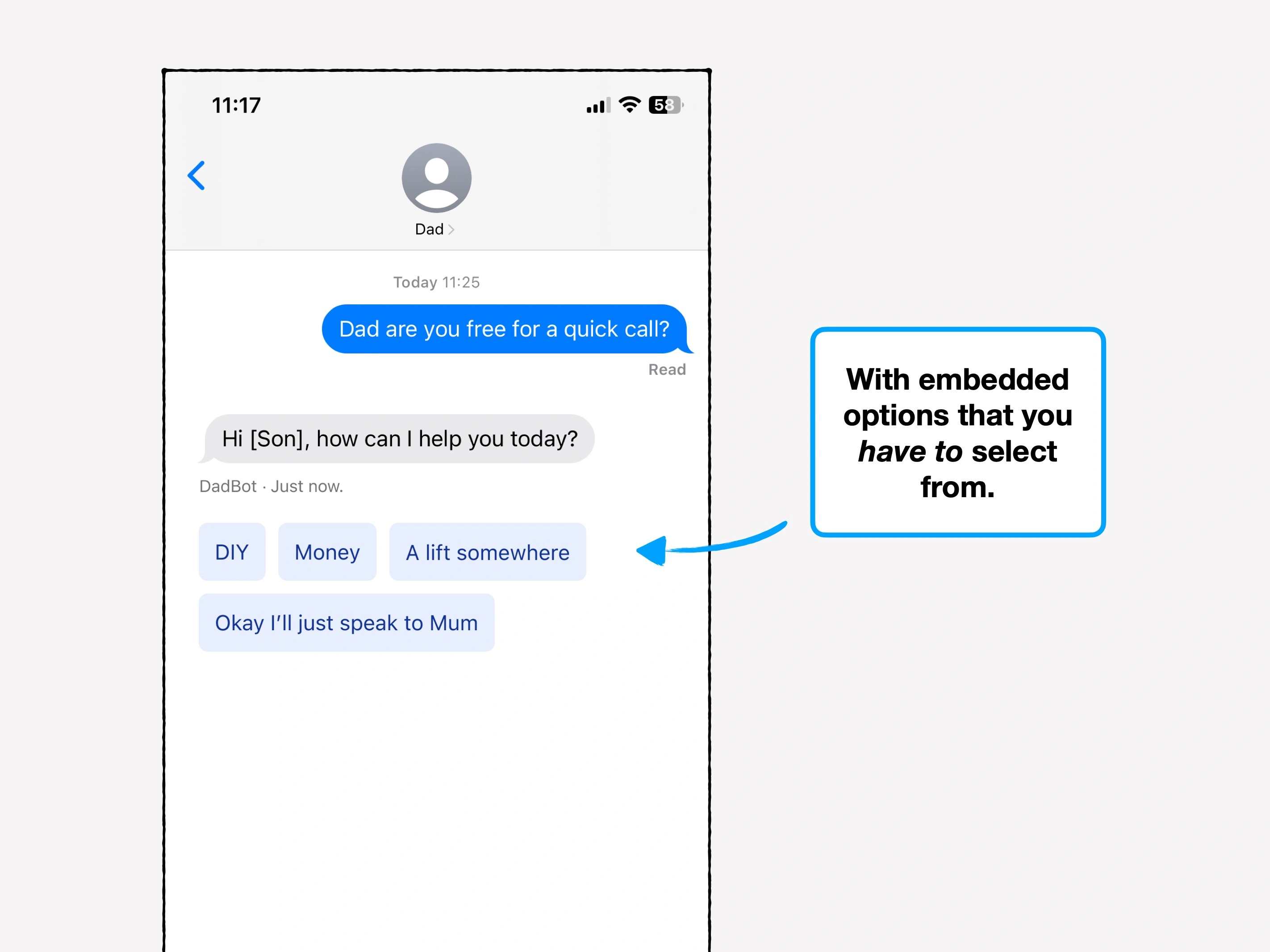

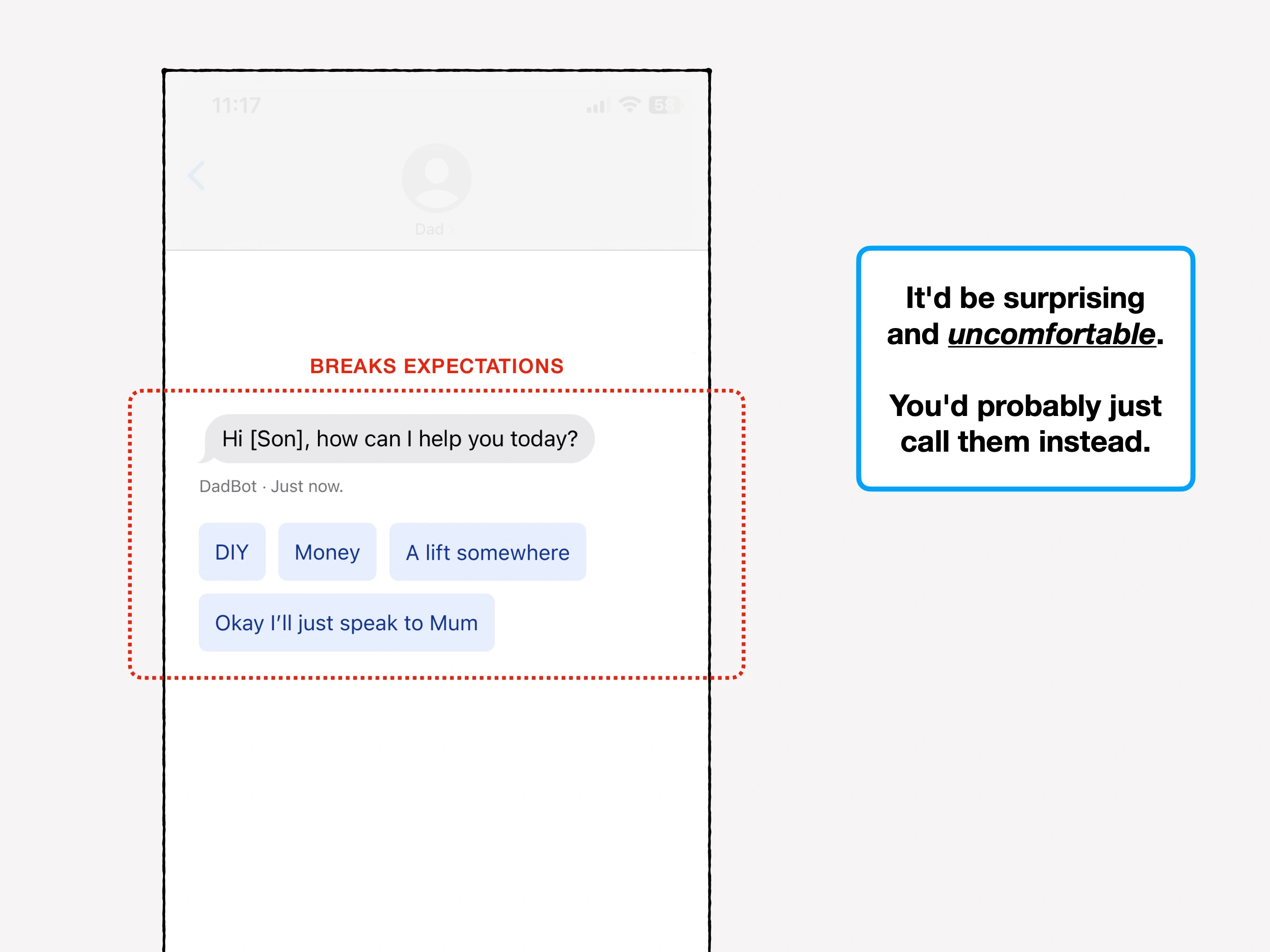

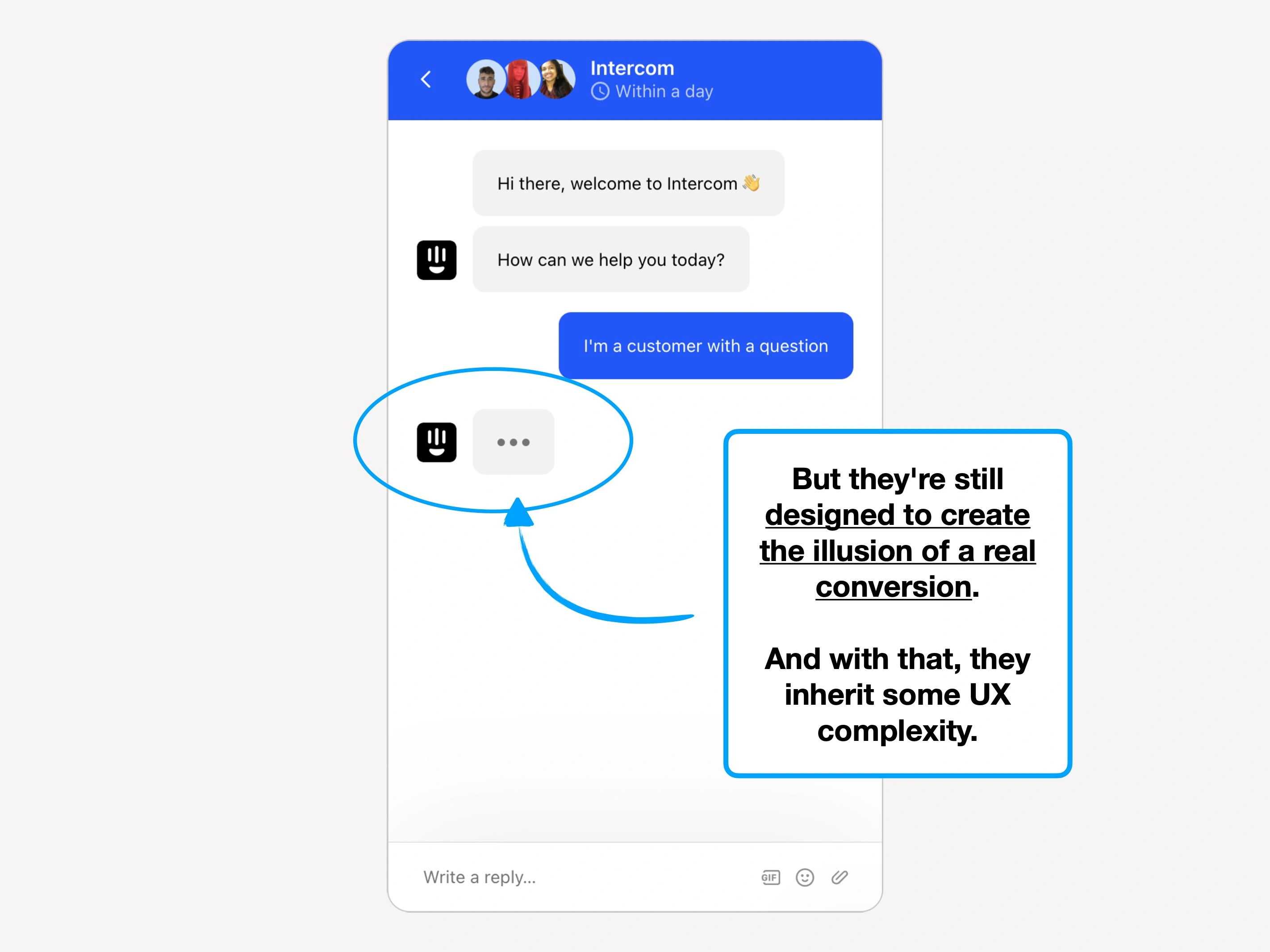

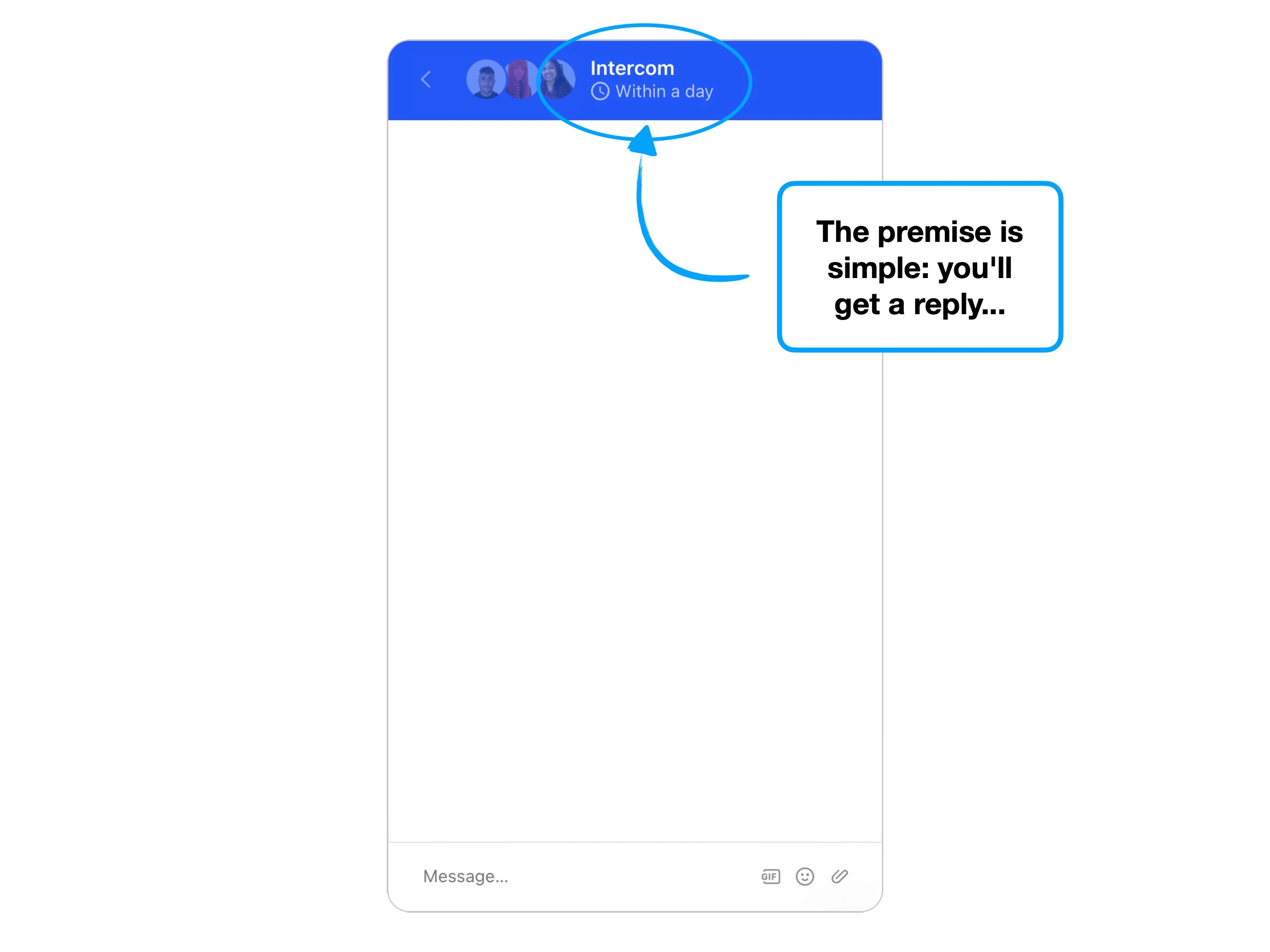

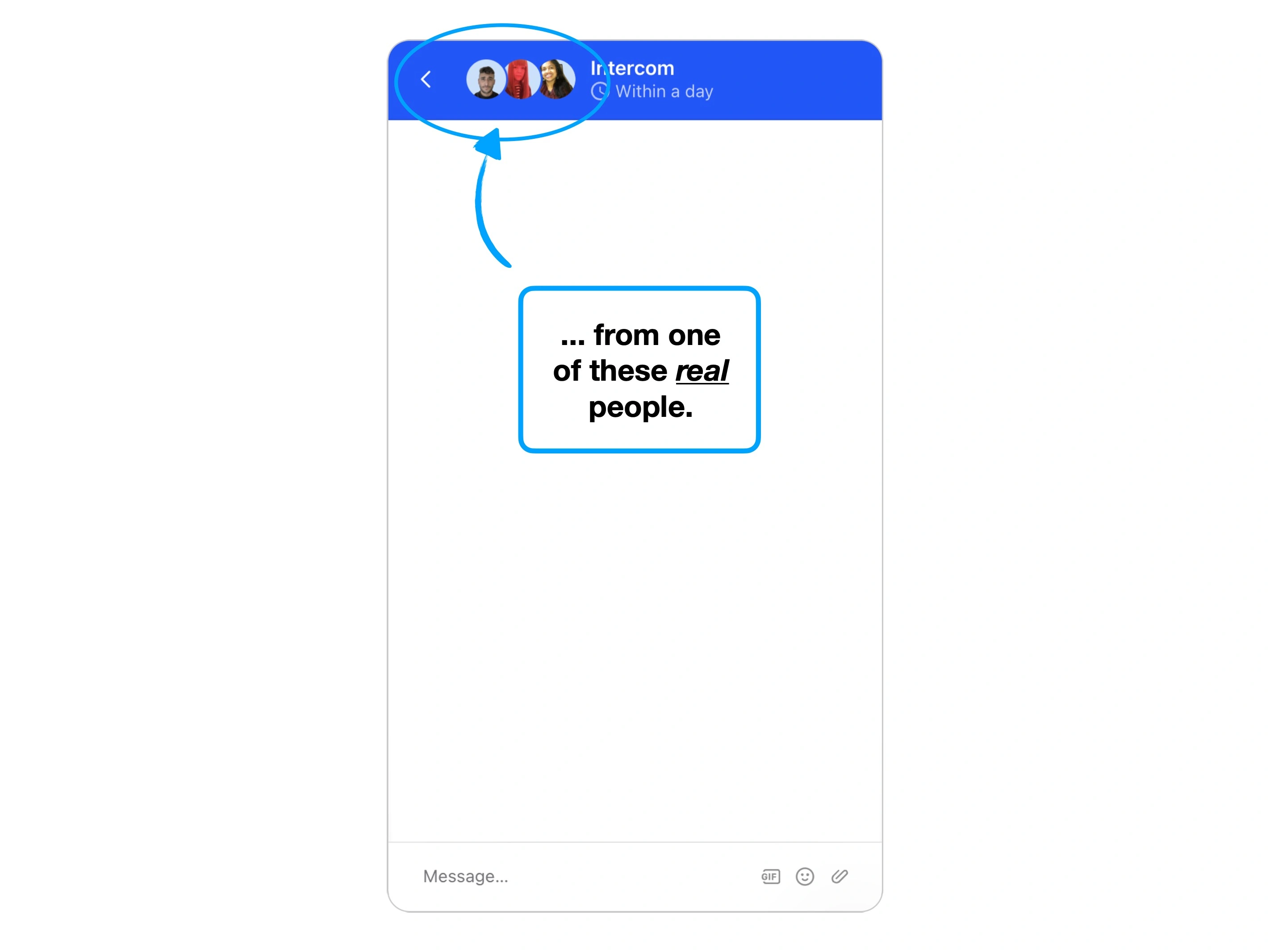

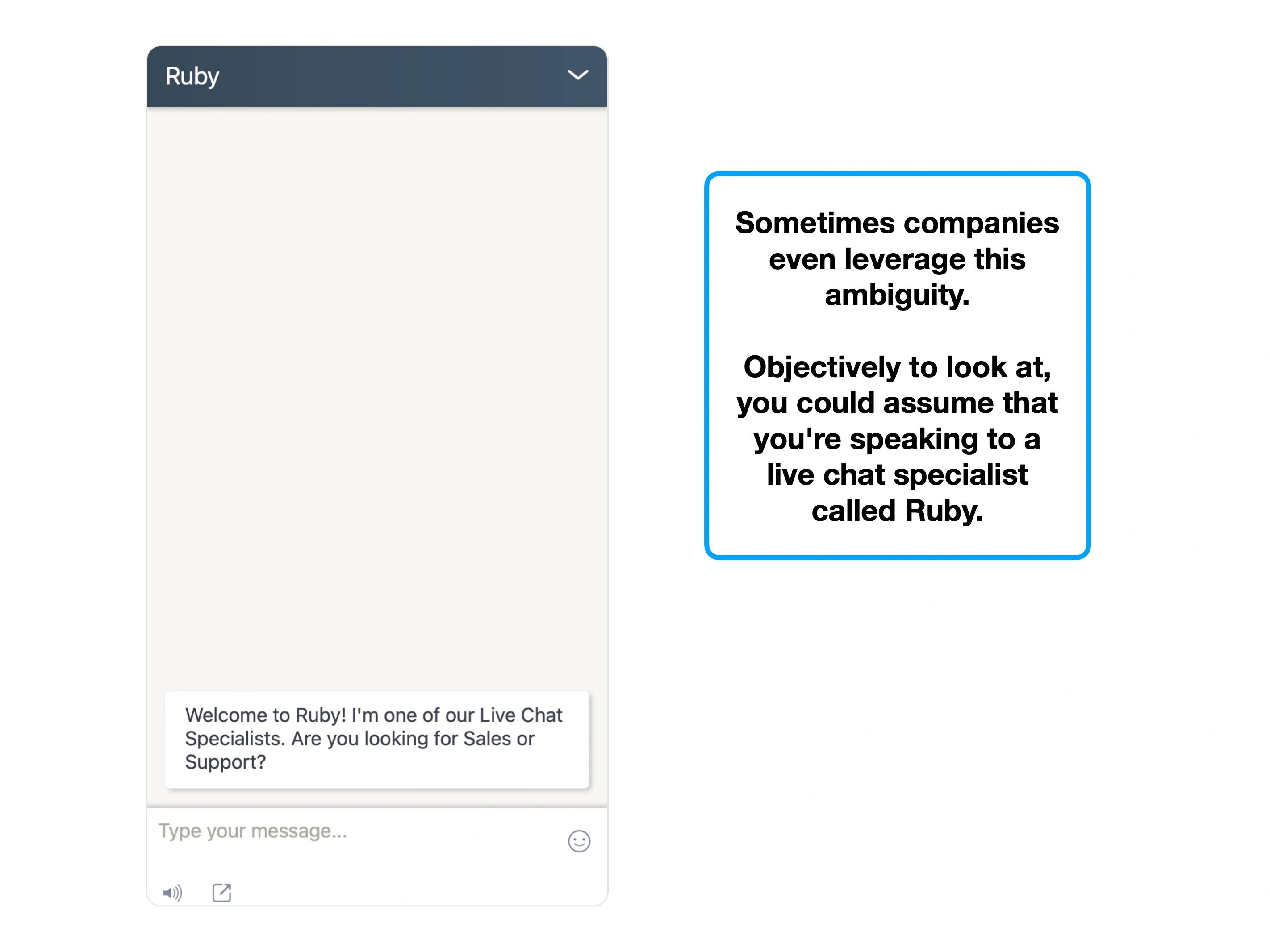

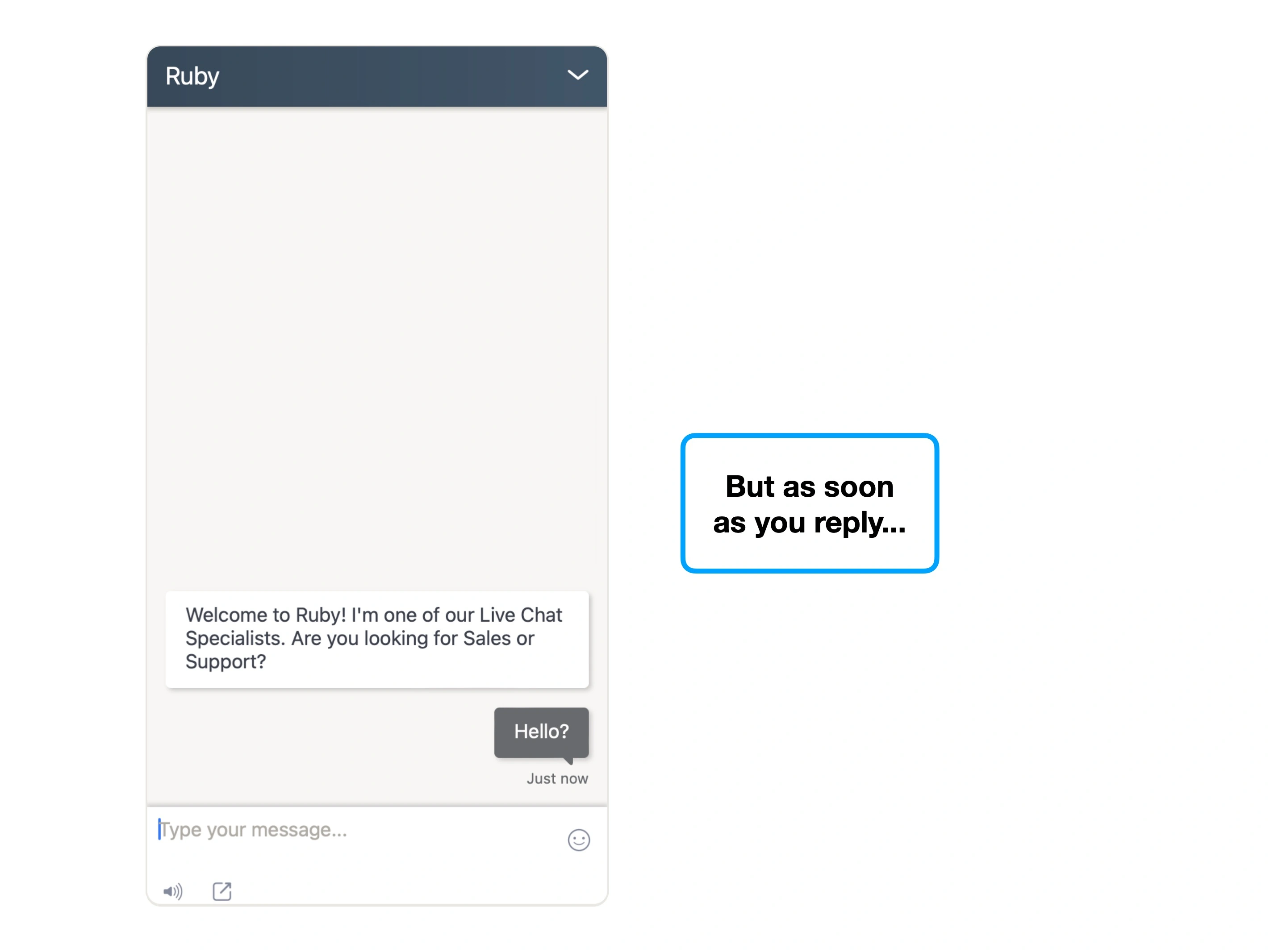

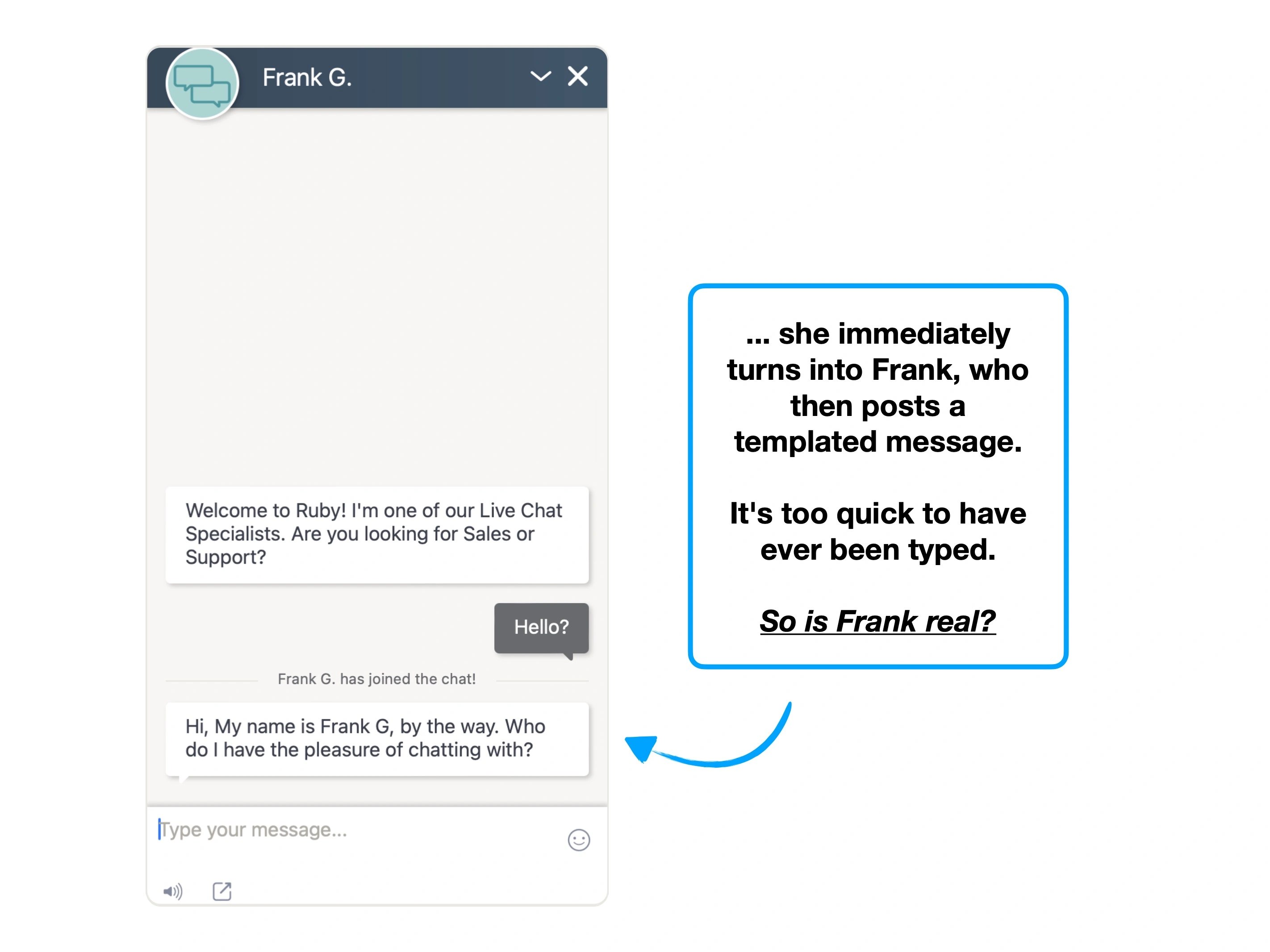

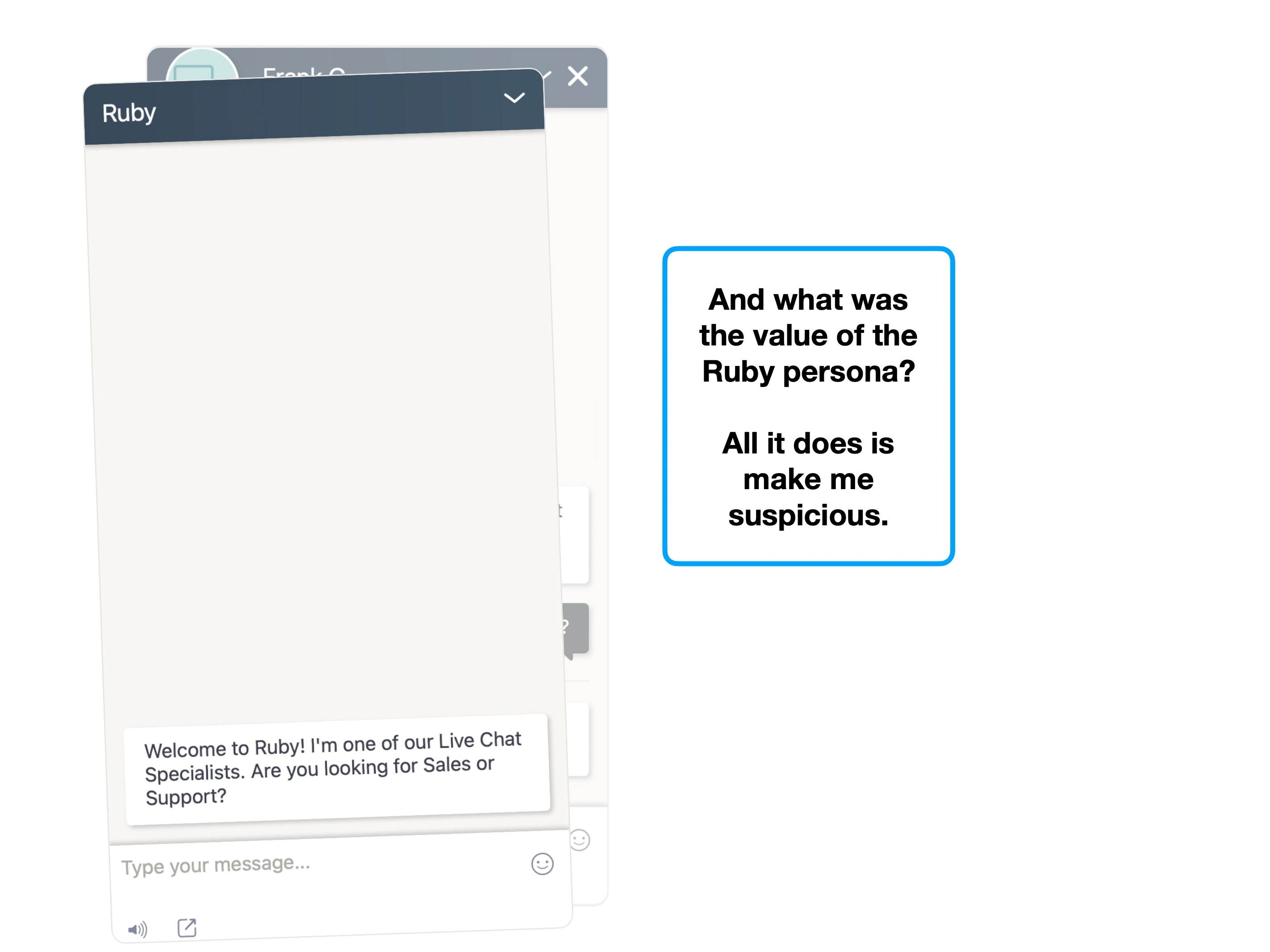

1. The expectation of humanity

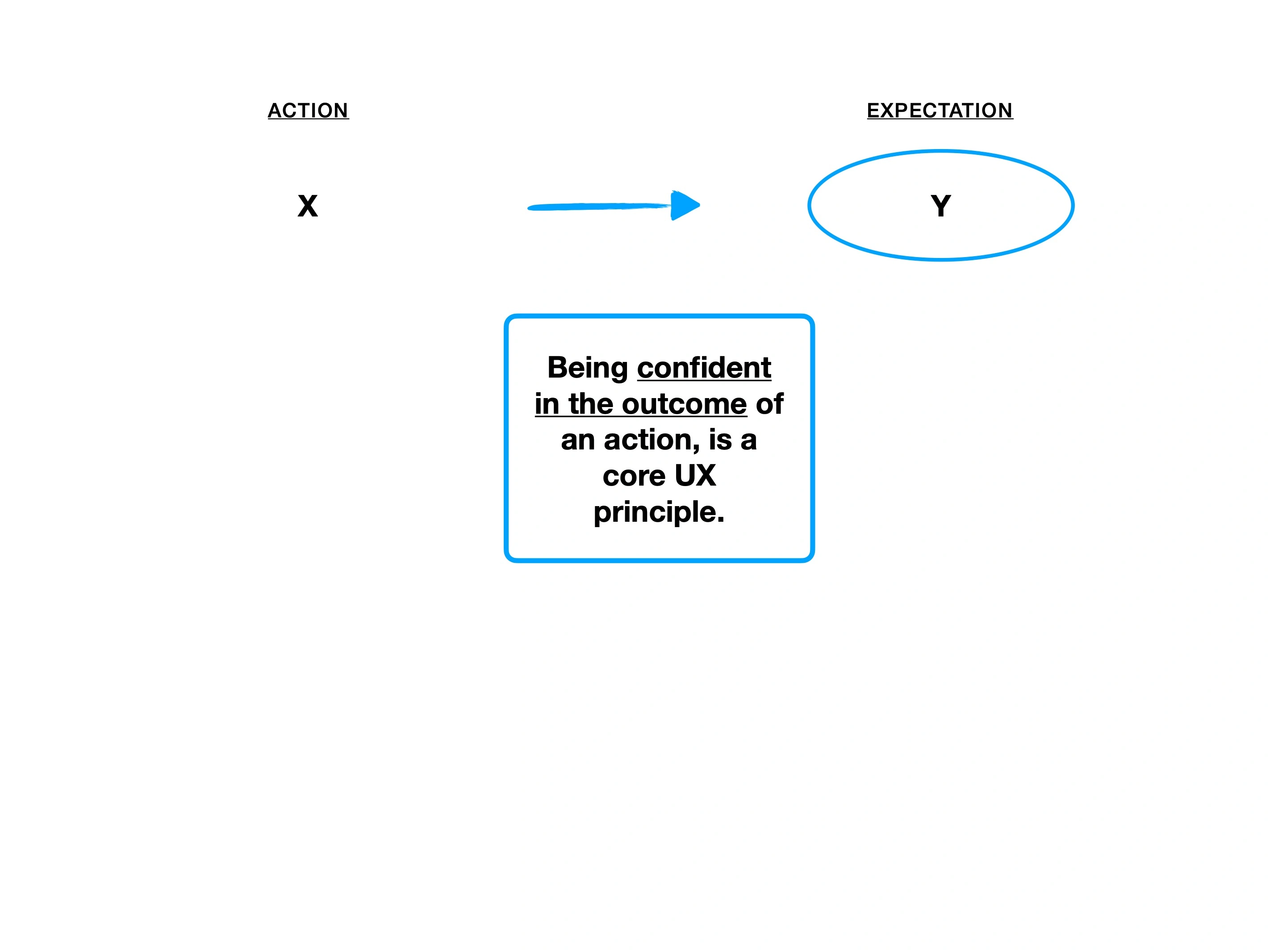

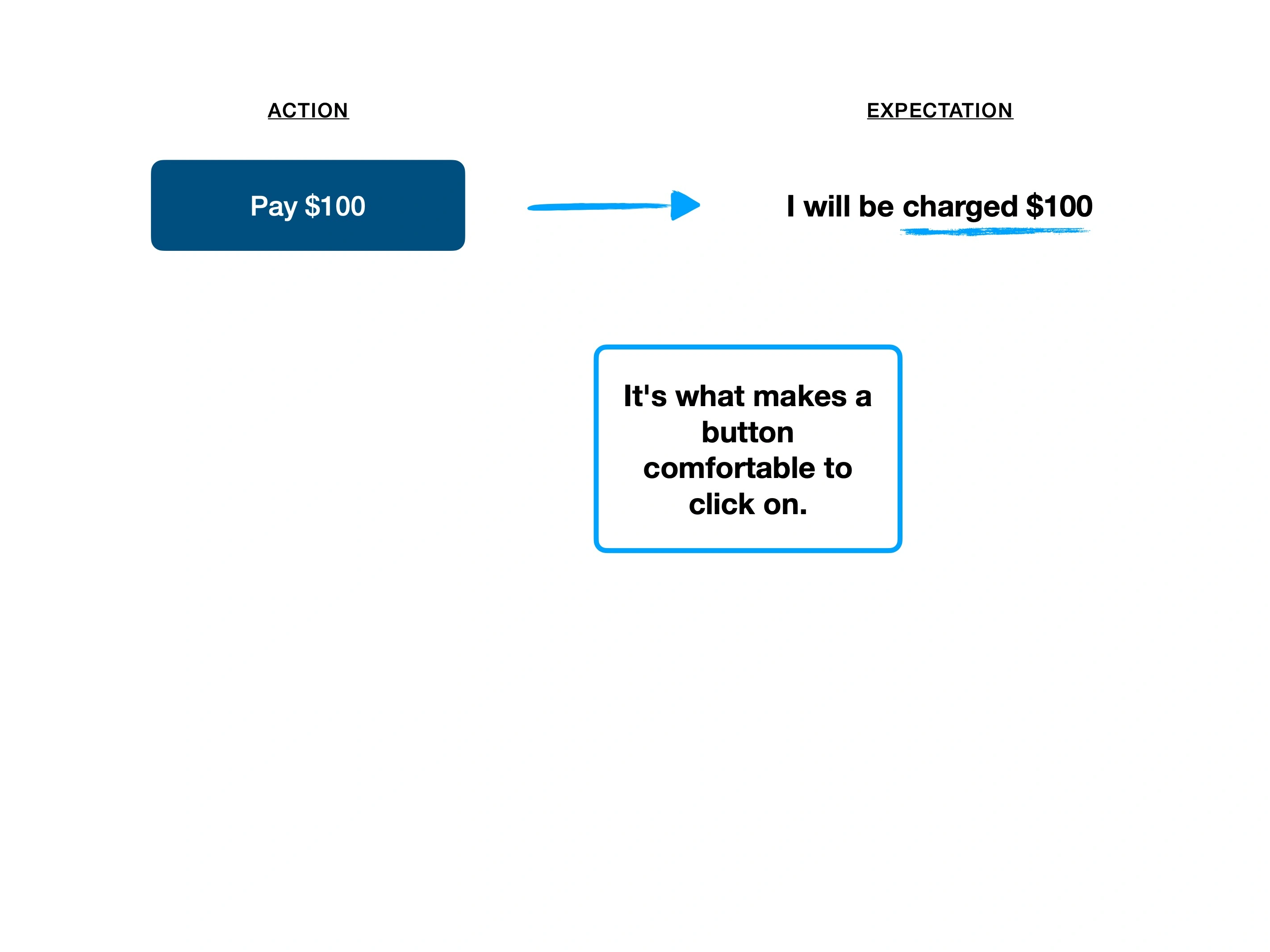

Expectations influence experience, often irrespective of the outcome.

e.g., winning a free slice of cake could ruin your day, if you were expecting the prize to be a car.

Apple's software usually feels reliable and predictable—that's part of why people say it "just works".

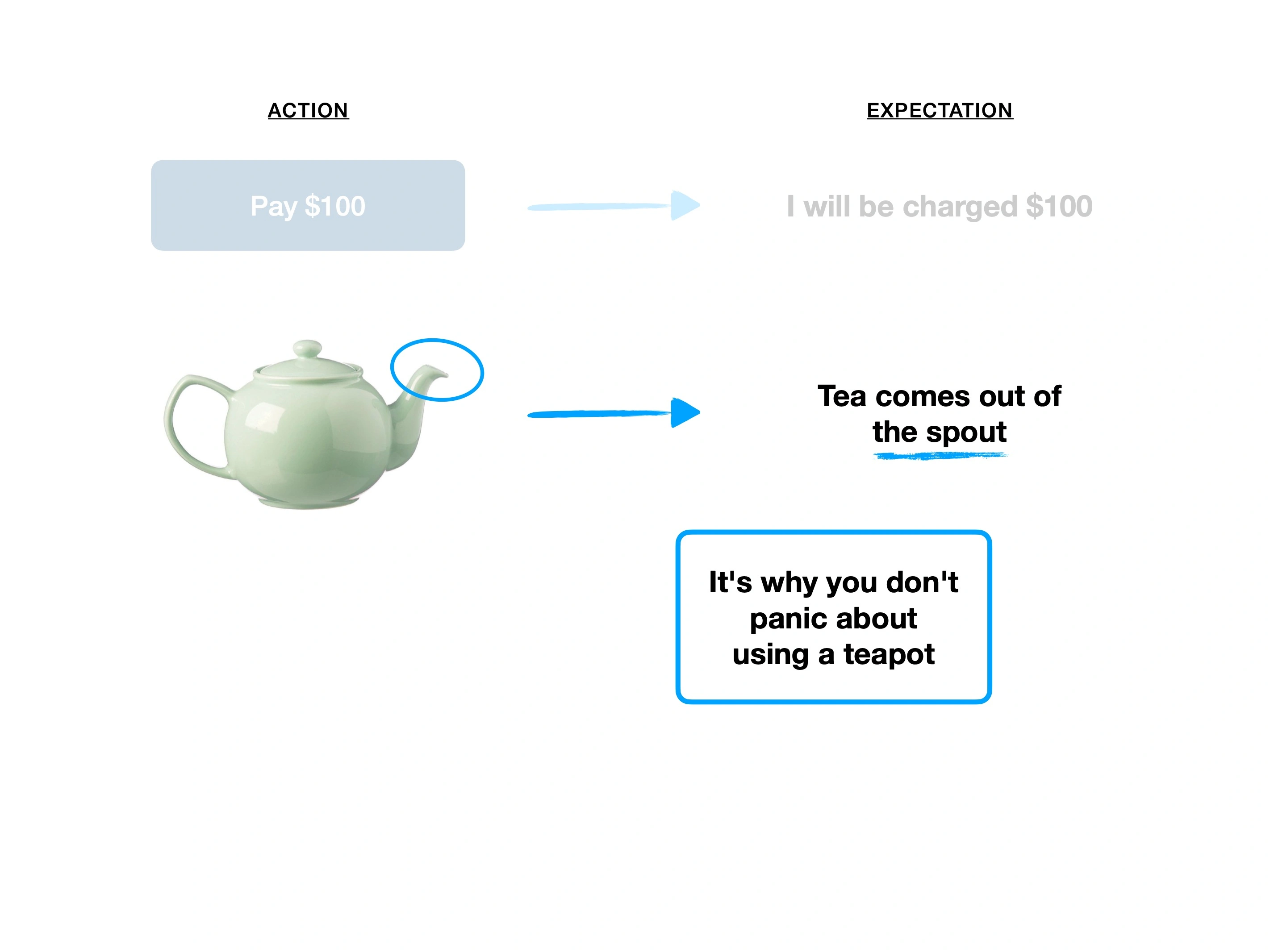

Now consider a chatbot that "thinks"; you've got a wide range of possible outcomes.

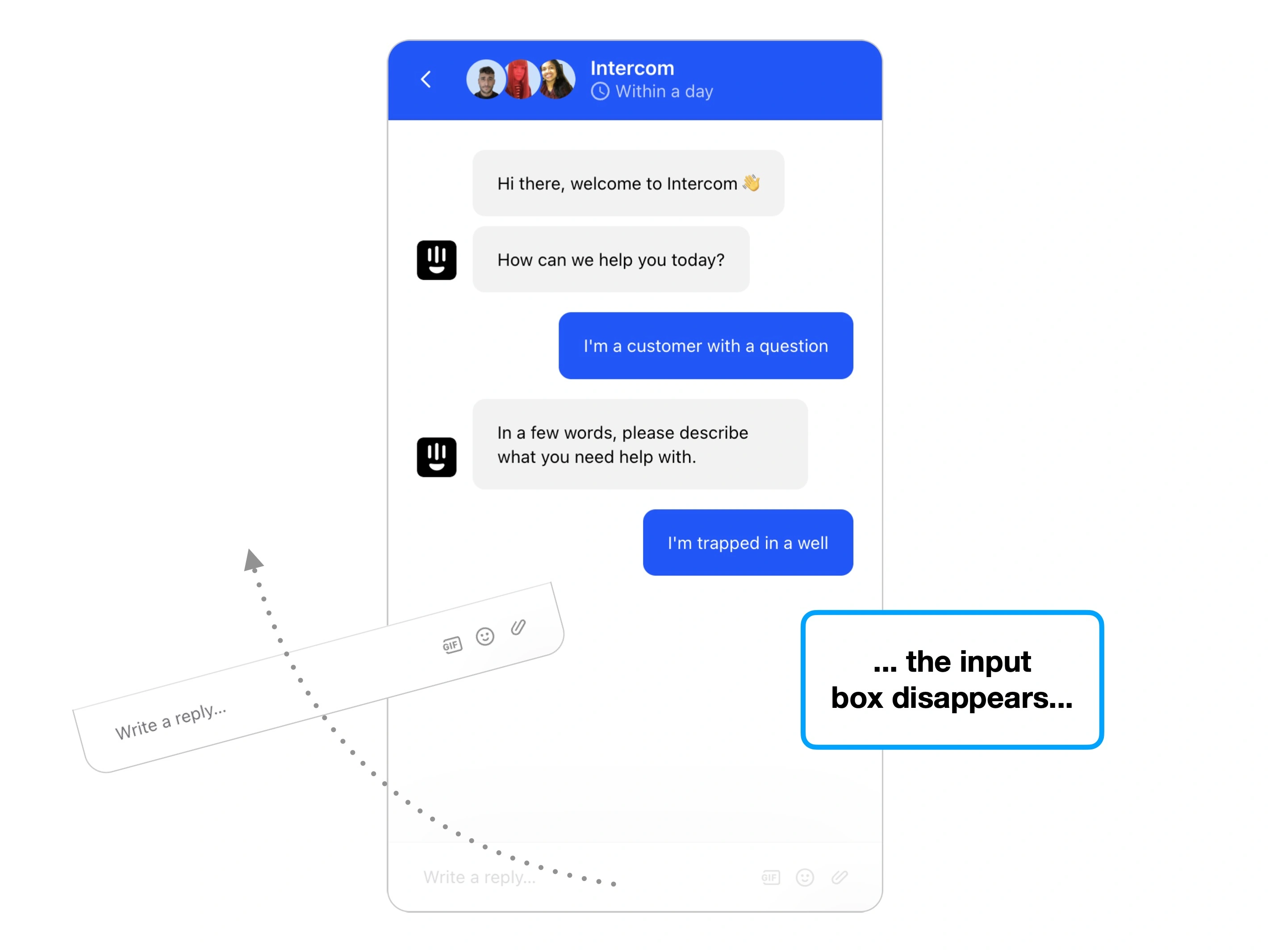

As we saw in the study, live chat (although more accurately, chatbots and decision trees), introduce some major unknowns into a conversation:

🙋♂️

Will I actually get to speak to a person?

Or will this turn into an multi-day email exchange?

🤖

Am I talking to a real person right now?

e.g., is 'Dory' a real human, or a virtual agent?

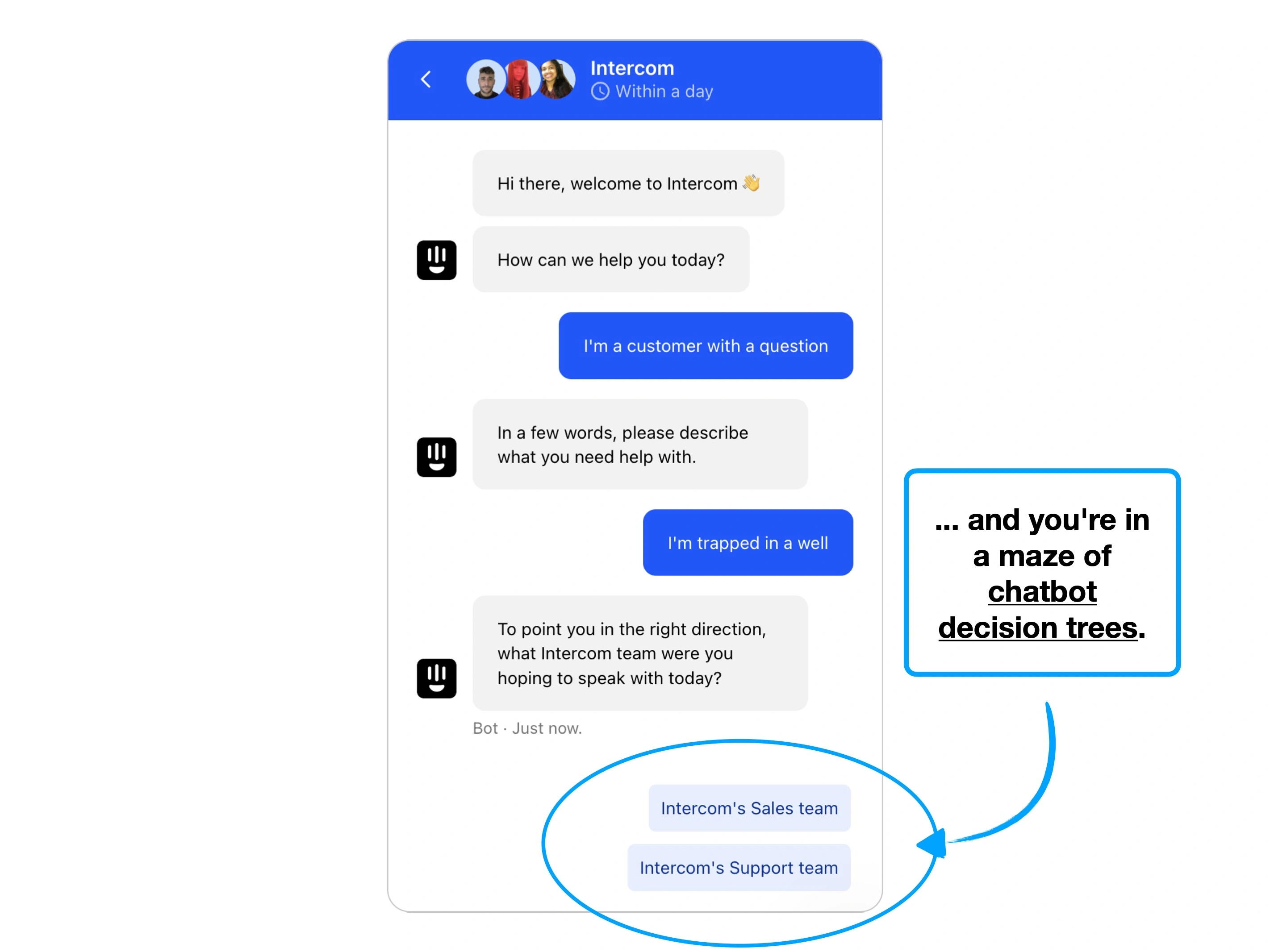

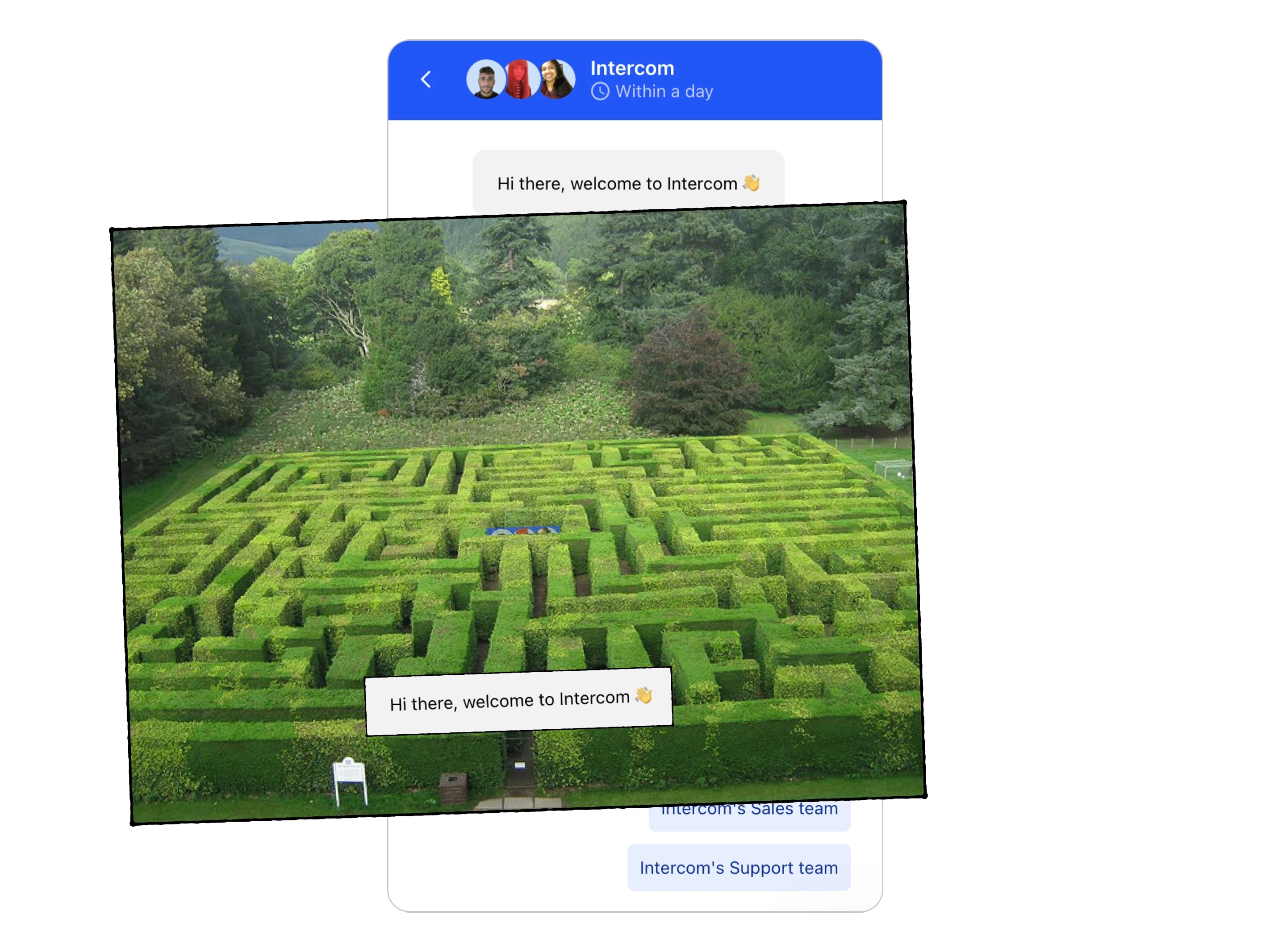

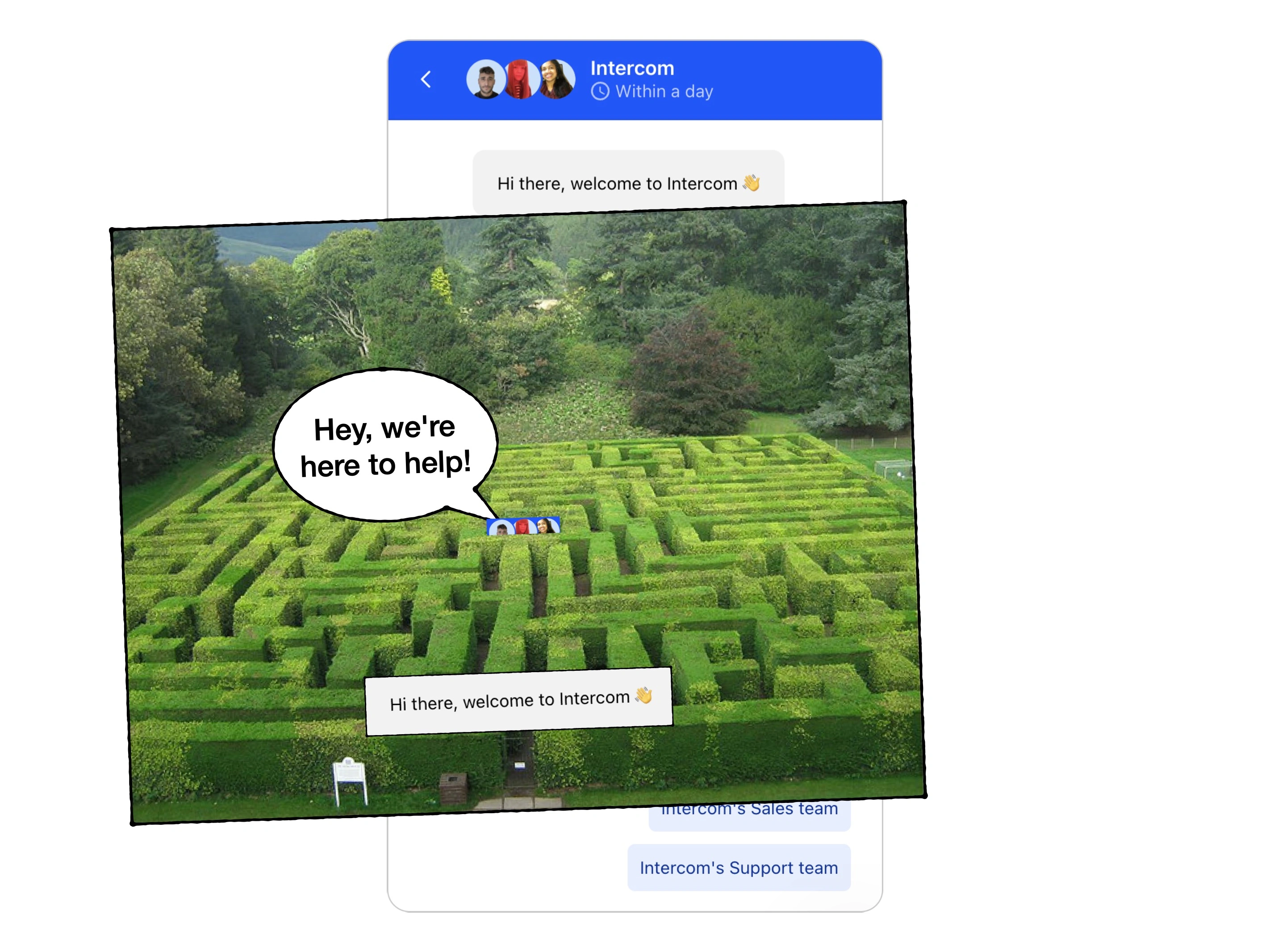

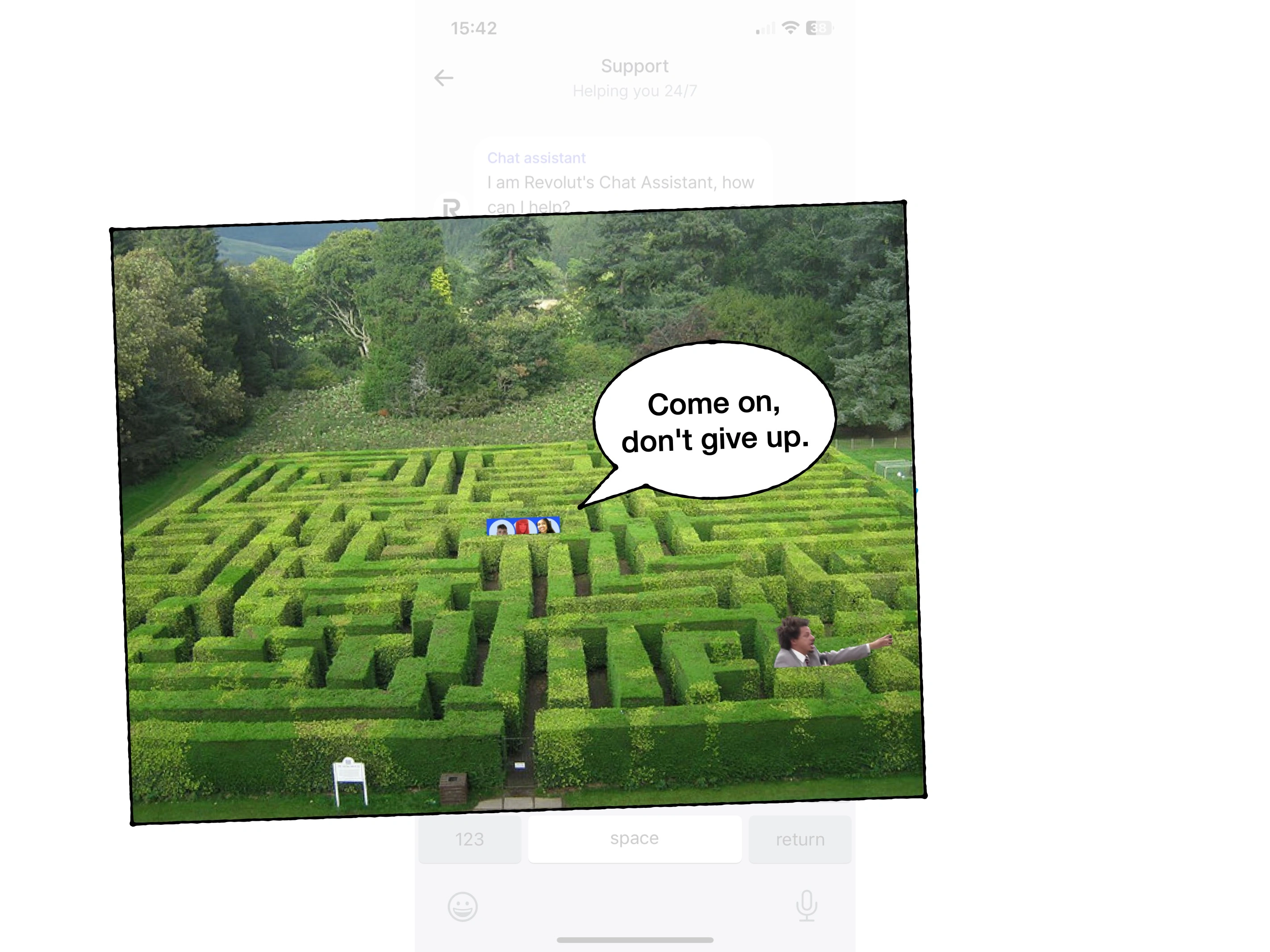

🗺

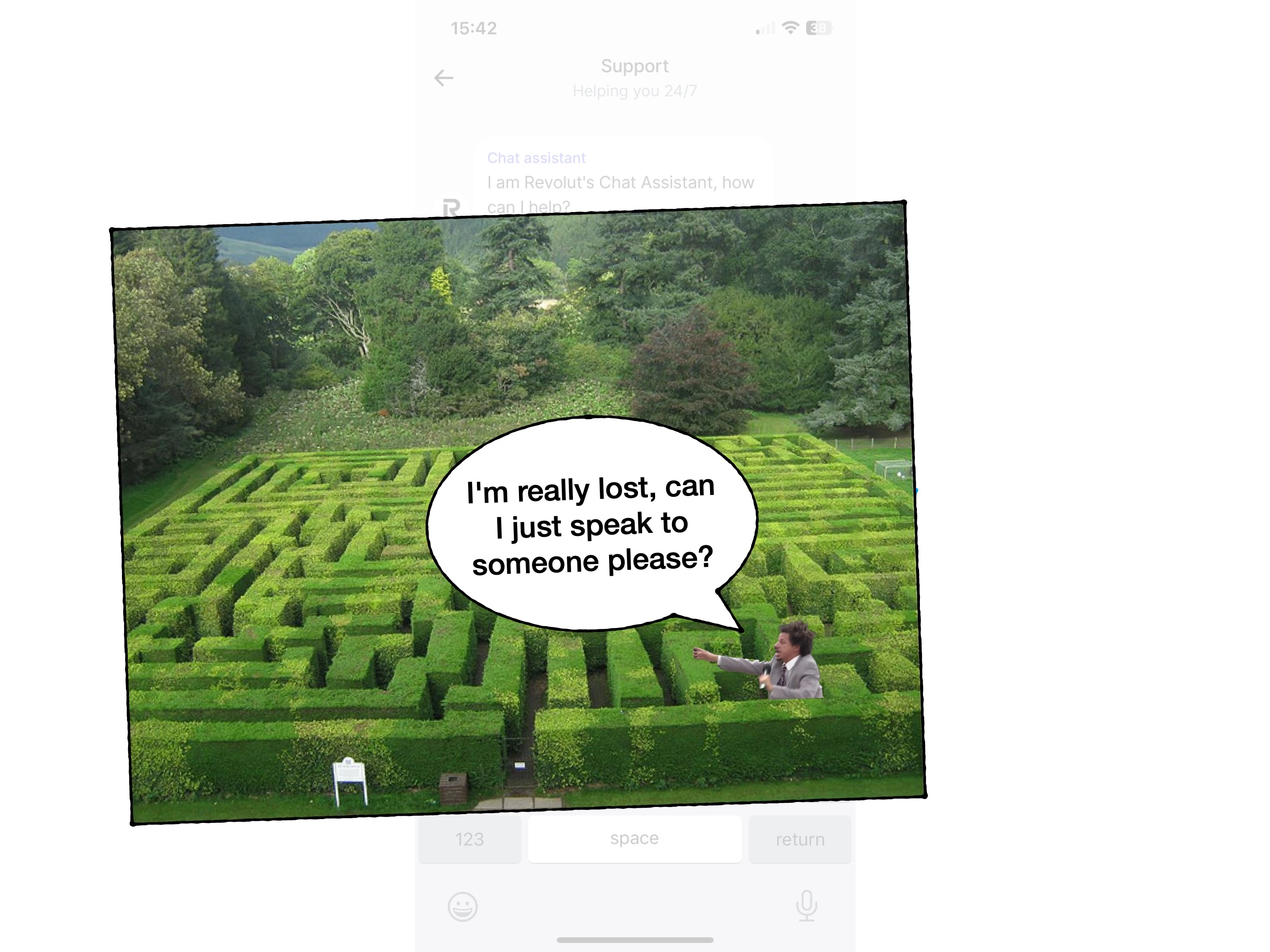

Am I currently navigating a decision maze?

i.e., are my answers even going to be seen by a human?

⏳

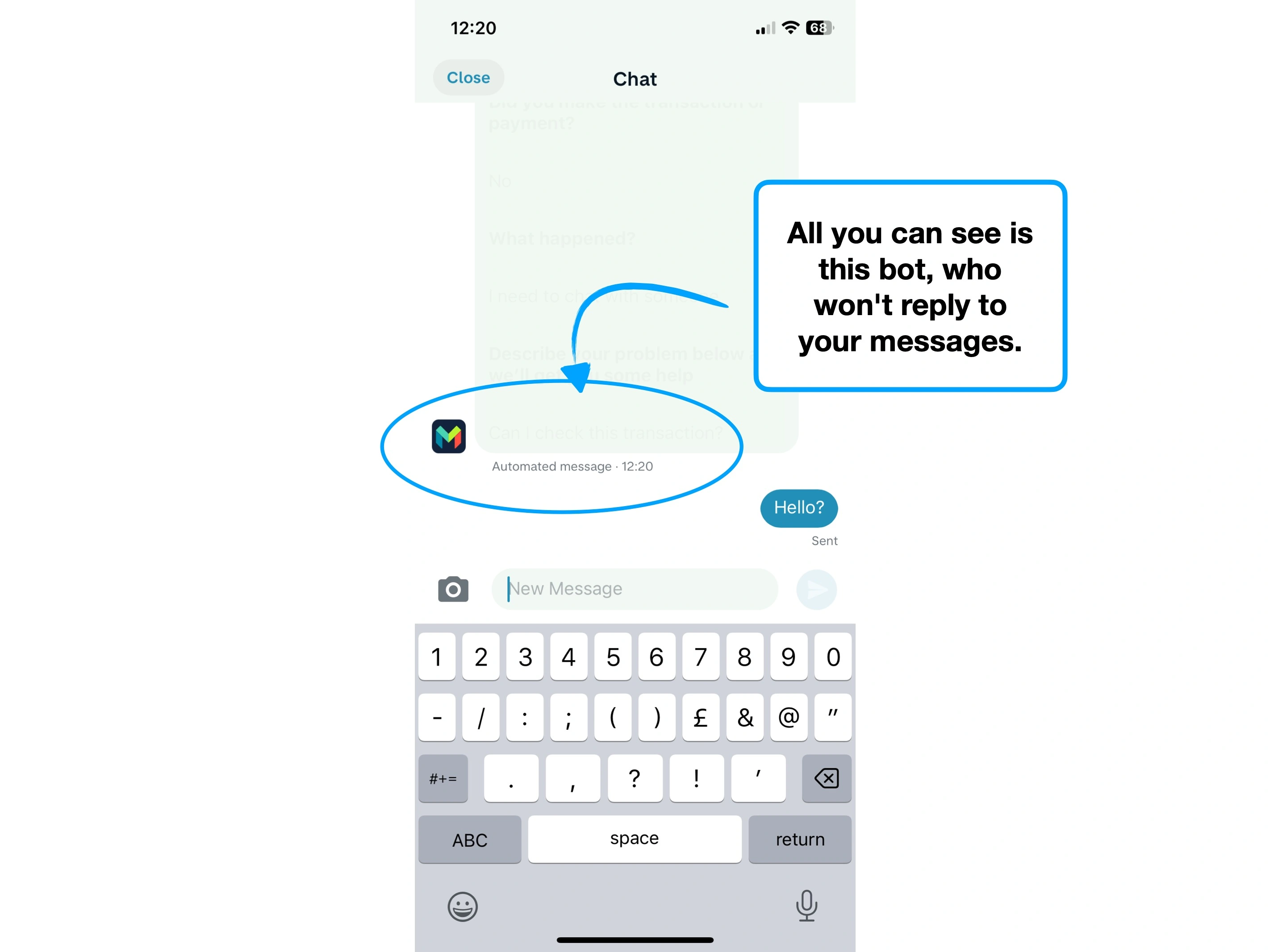

Who (or what) am I waiting for?

Is a human joining, or is a bot "thinking"?

✅

Are you functional enough to solve my issues?

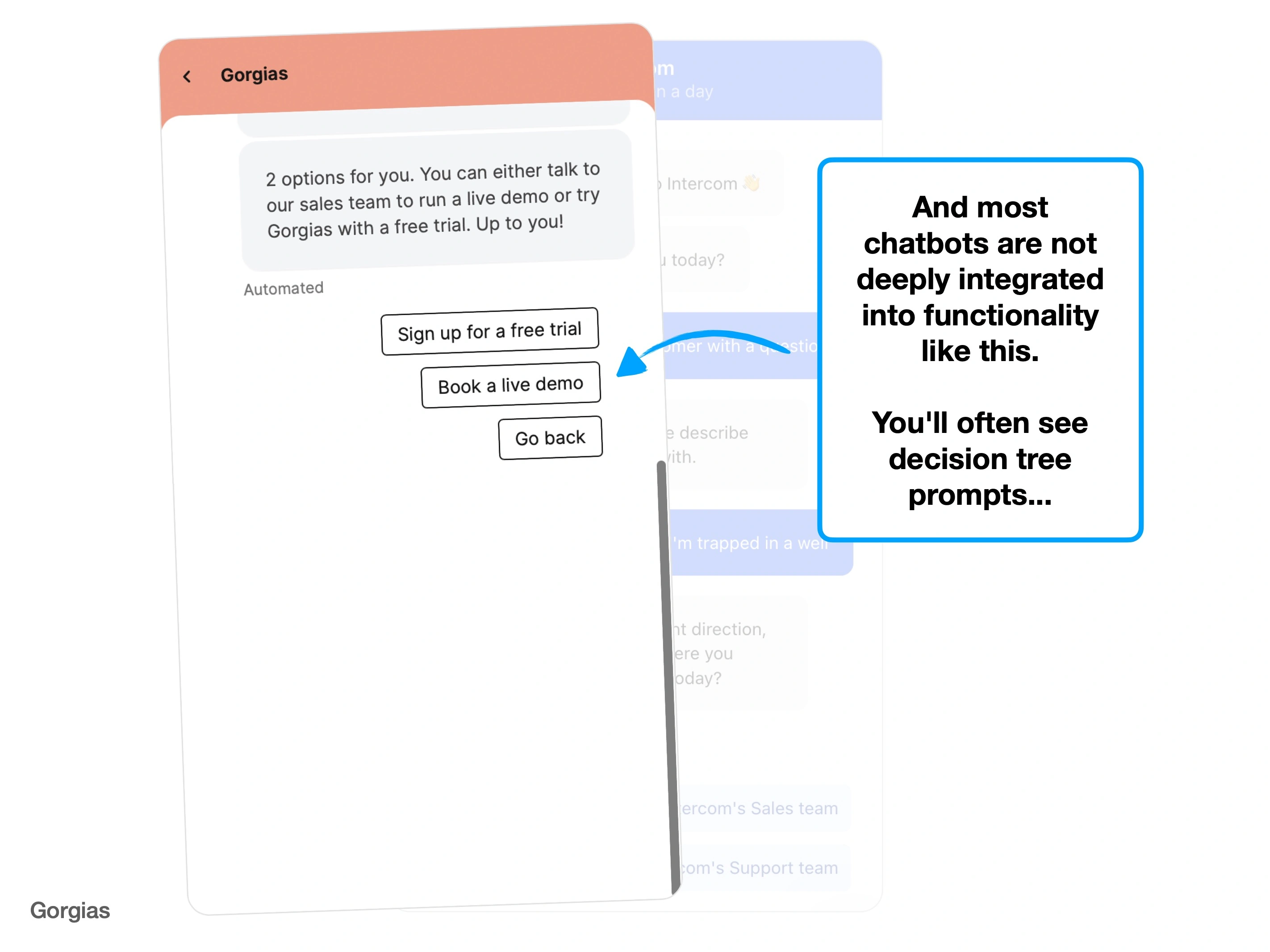

i.e., is this chatbot actually functional, or just conversational?

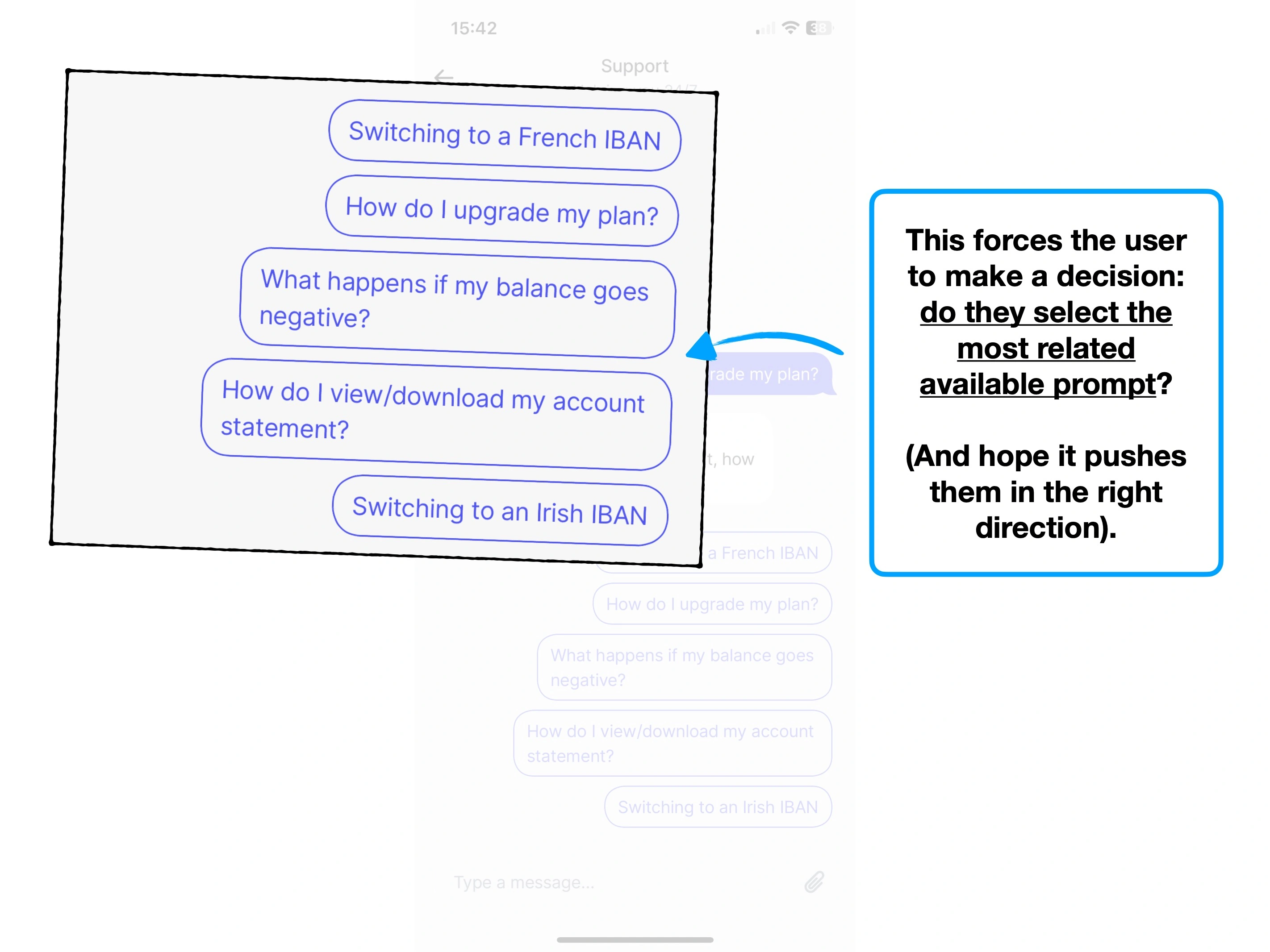

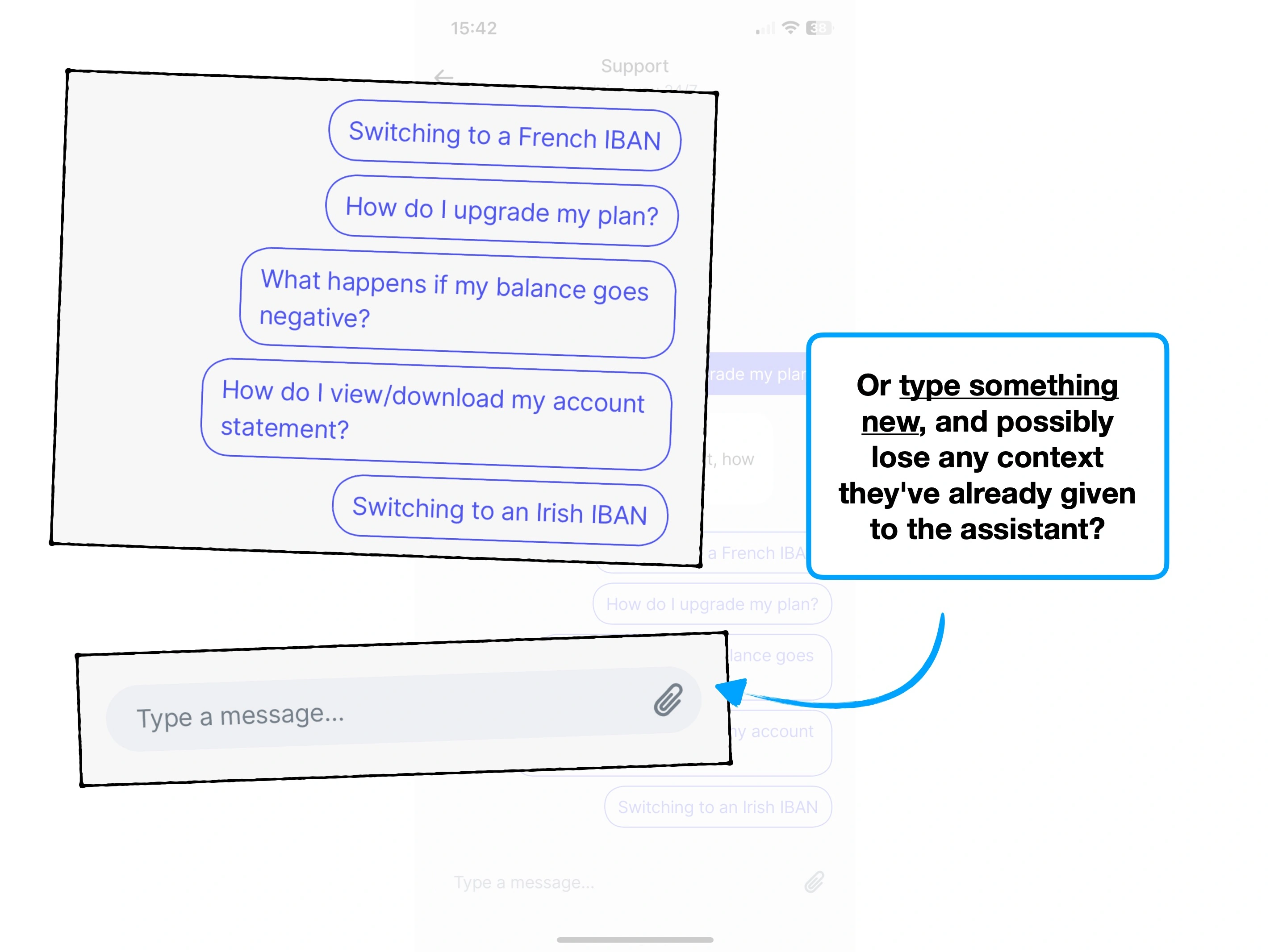

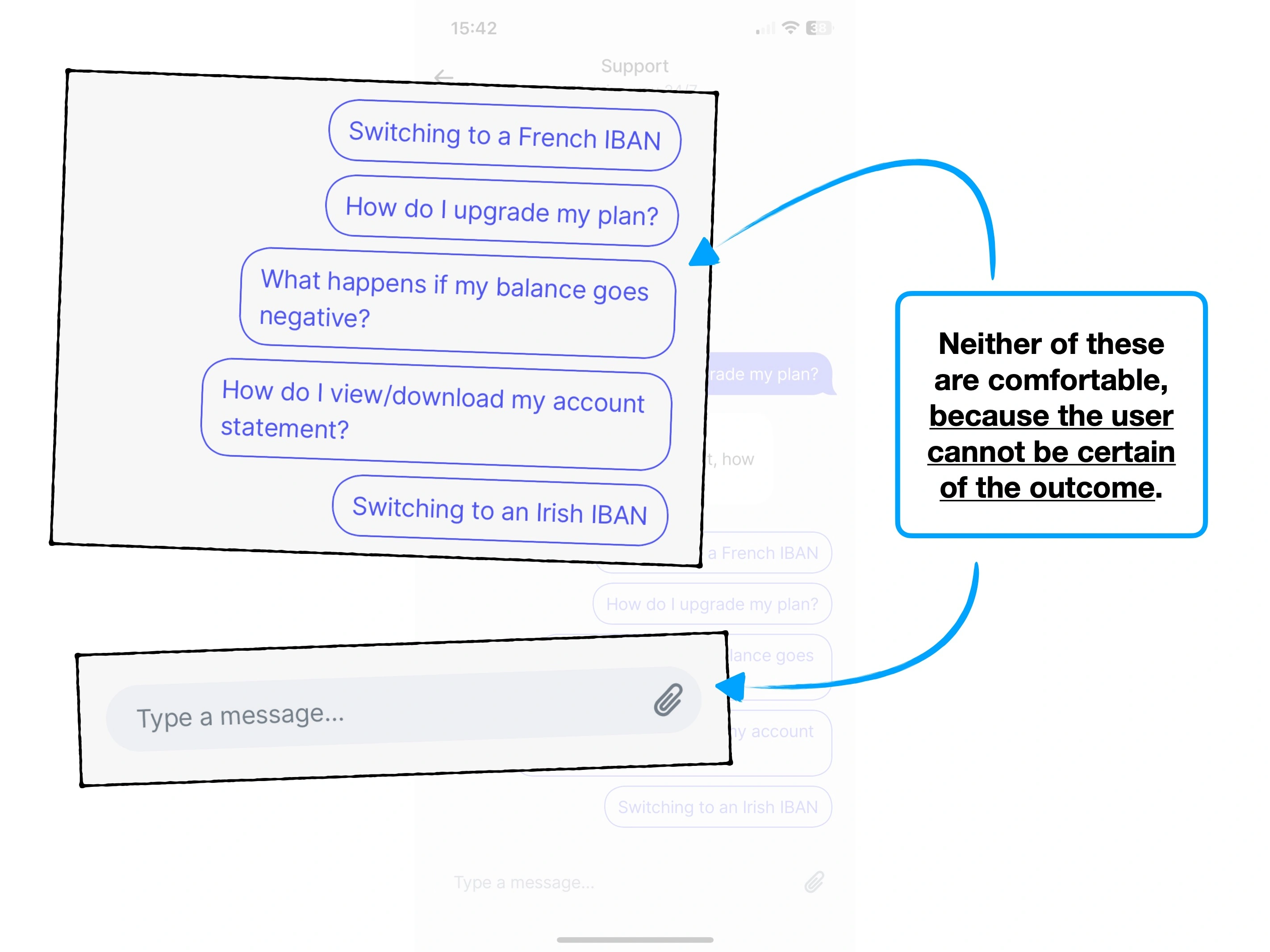

Instead of using a dashboard to solve your own problems, you're now conversing with a bot, possibly trying to reverse engineer the prompts required to actually get through to a real person.

This isn't a reason to not use chatbots, but it is a reason to set realistic expectations to the user.

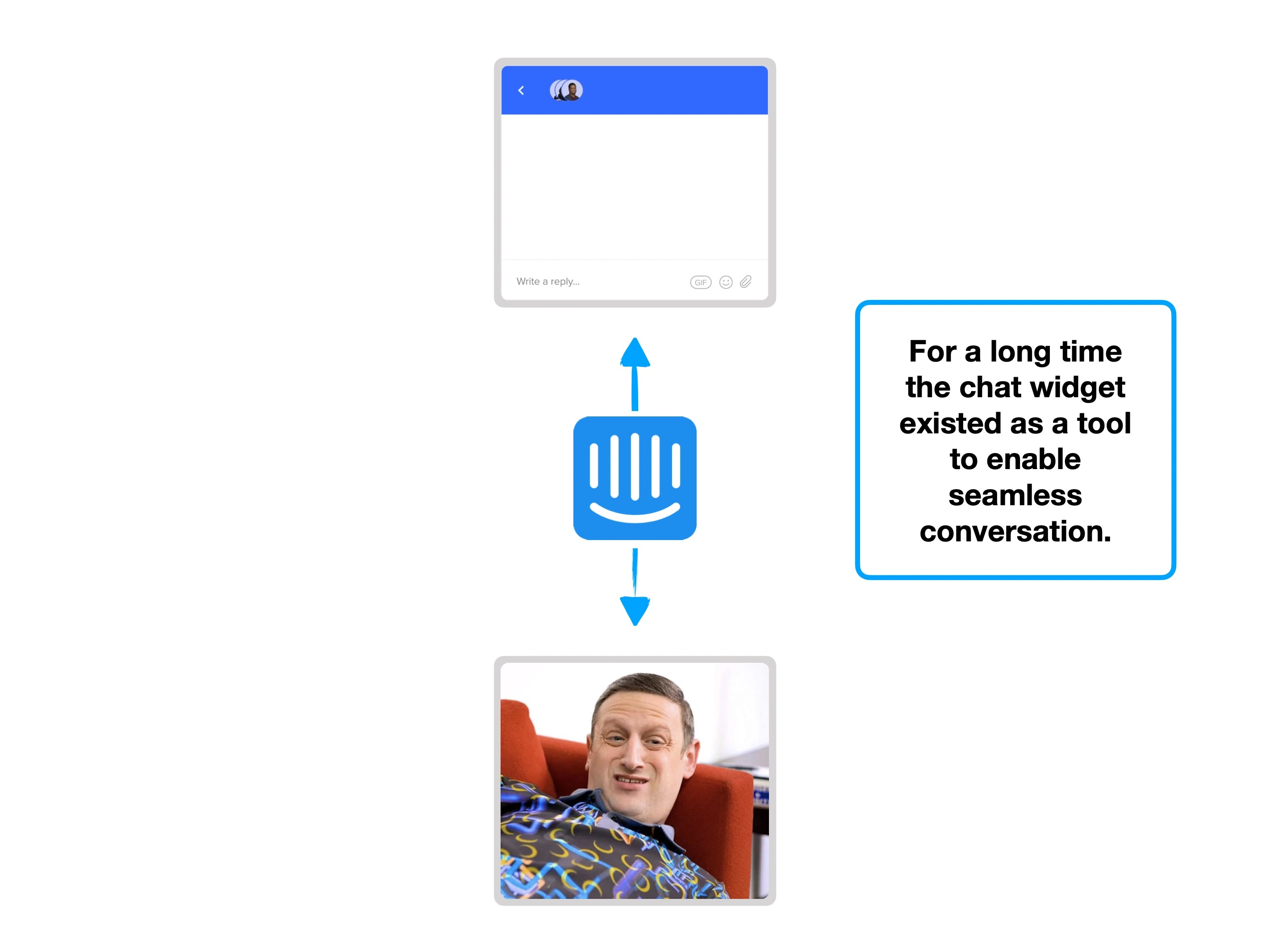

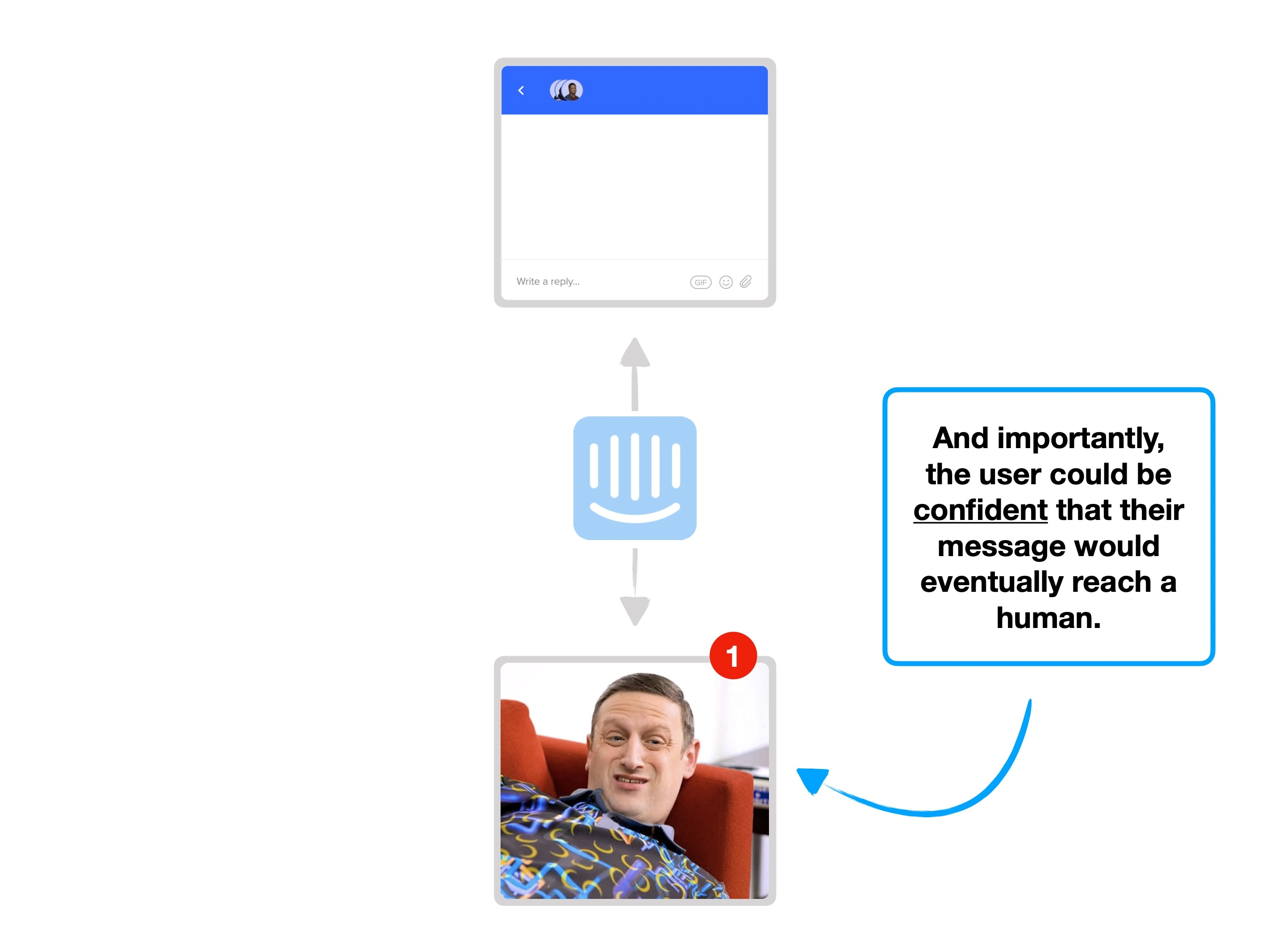

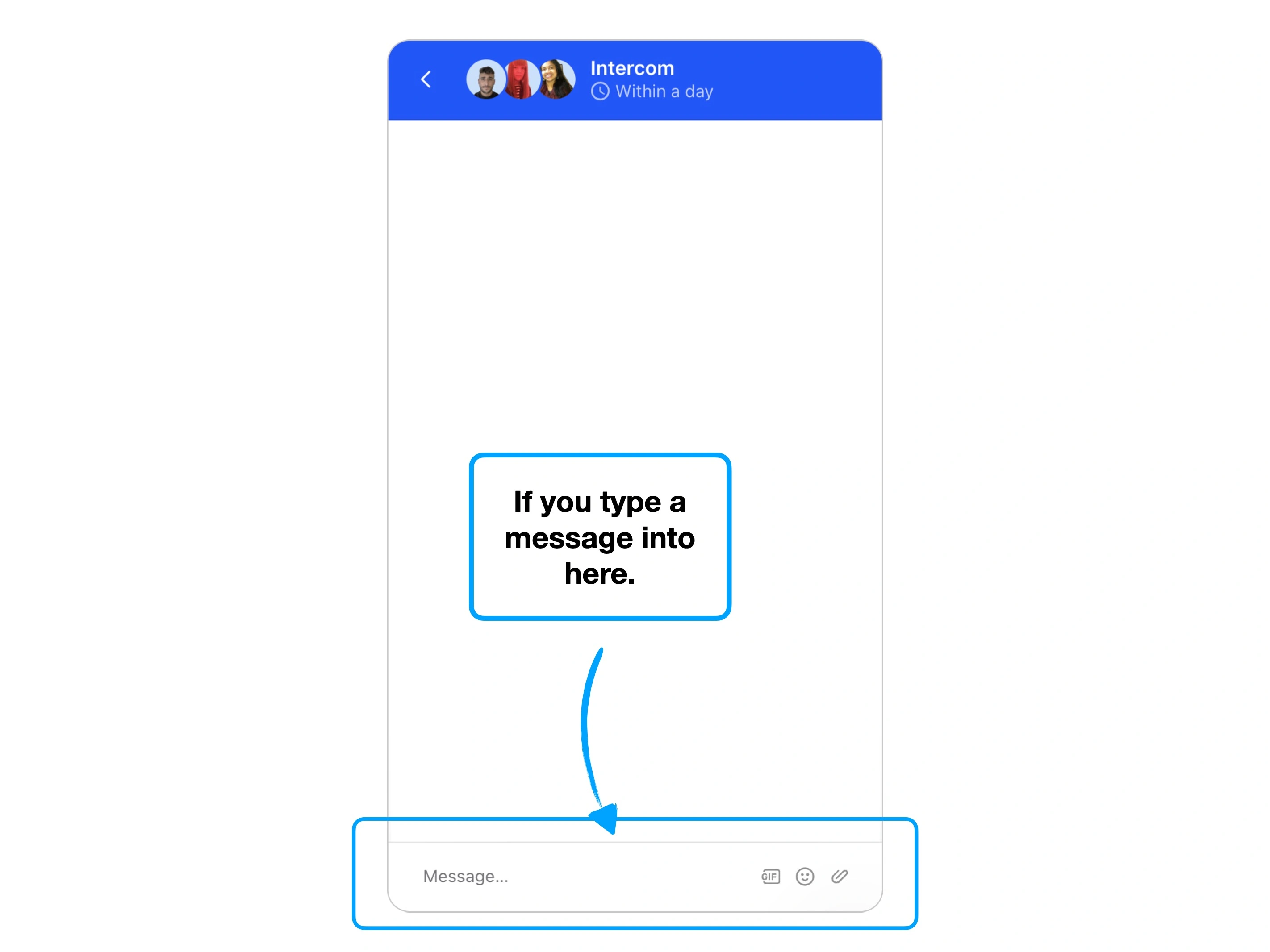

2. An evolving expected value

However patient you could be while queuing at a food truck, you'd be far less happy if you didn't even know what type of food they served.

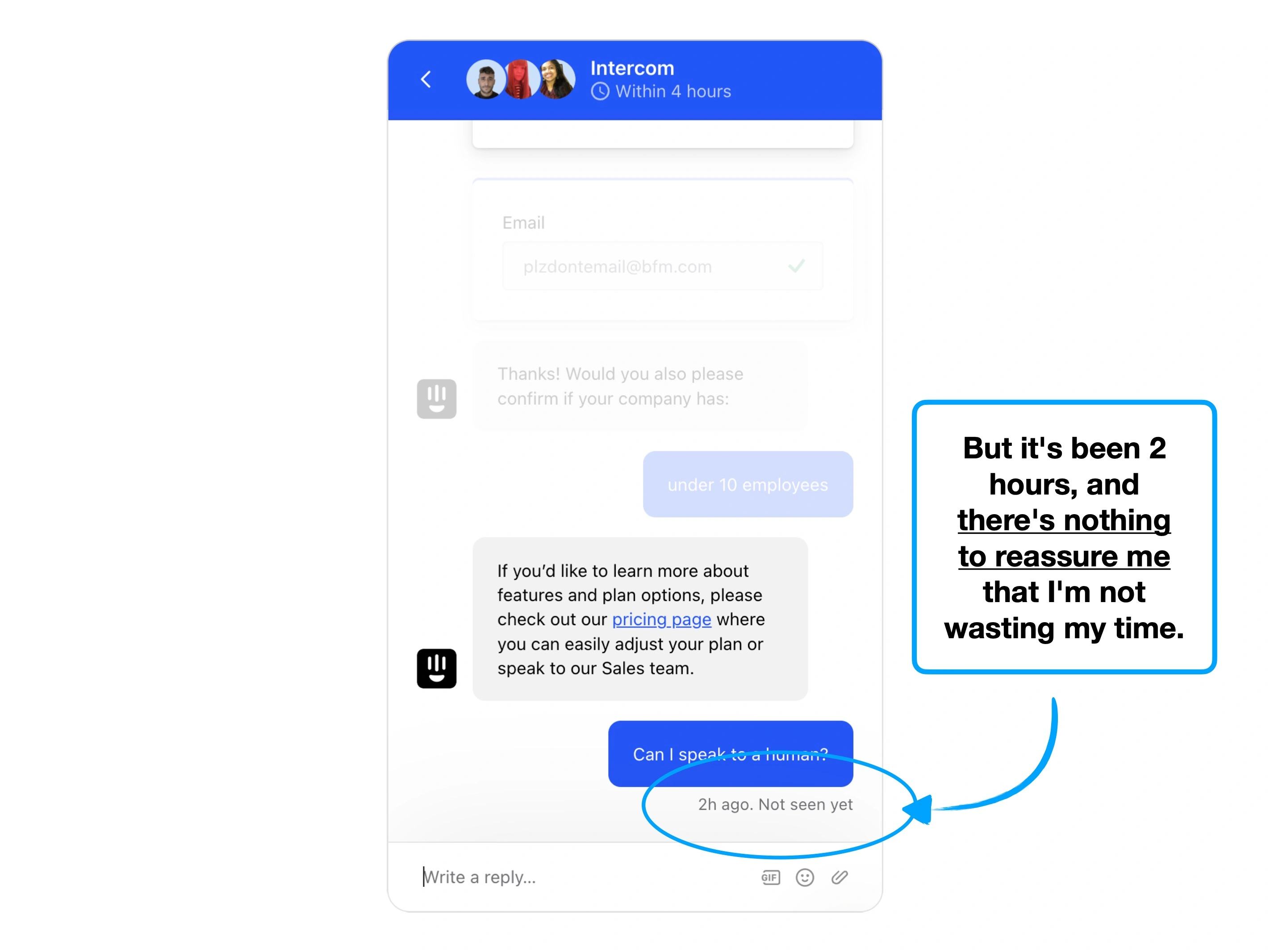

You're subconciously weighing up the cost of waiting (your time), with the expected value (whatever it is you're waiting for).

For short waits (e.g., a page loading), you're primarily concerned with the ⚡️ Doherty Threshold. But when expecting the user to wait for longer, the biggest issue is actually the concern that the user won't get what they want at the end of it.

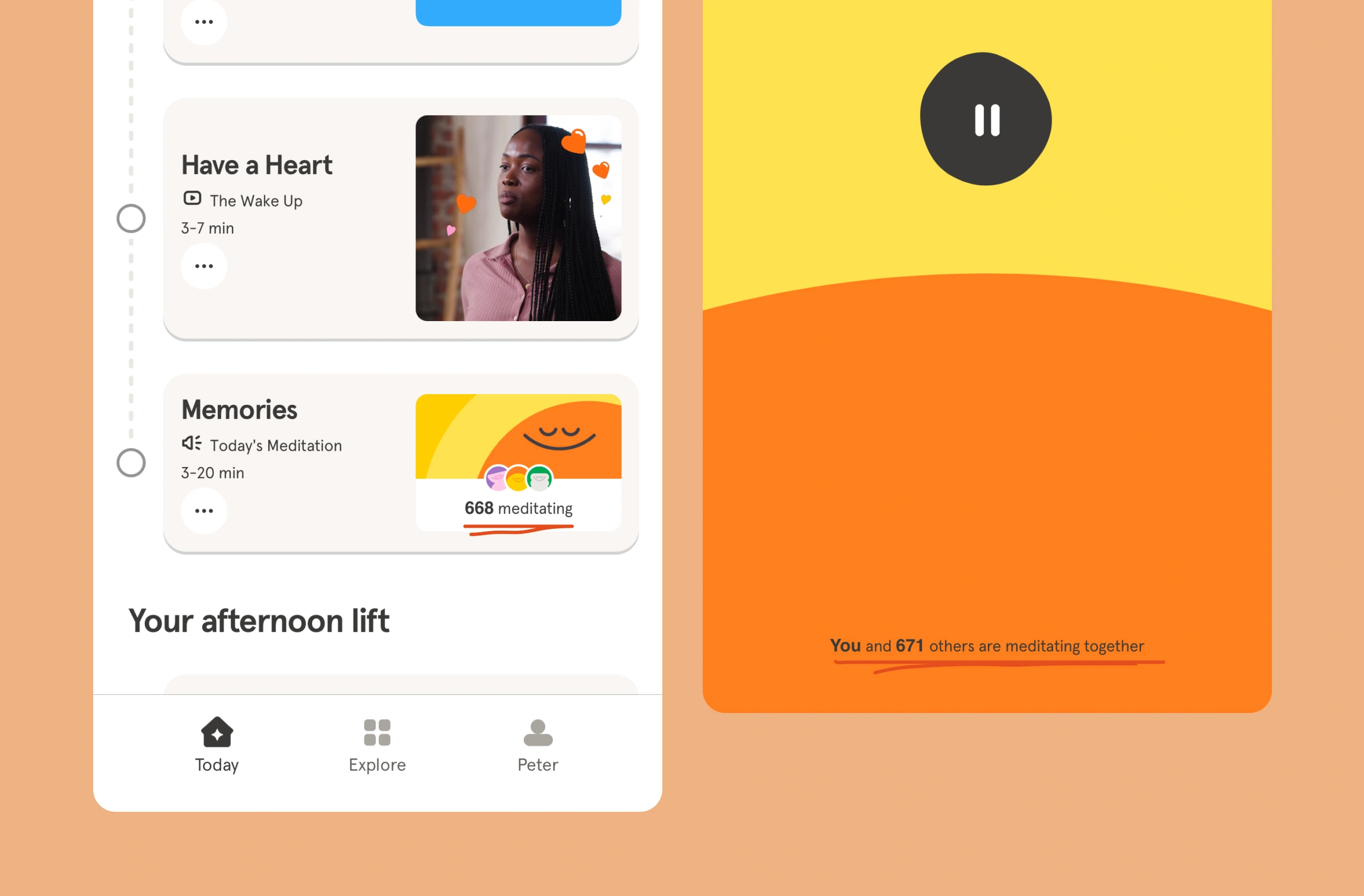

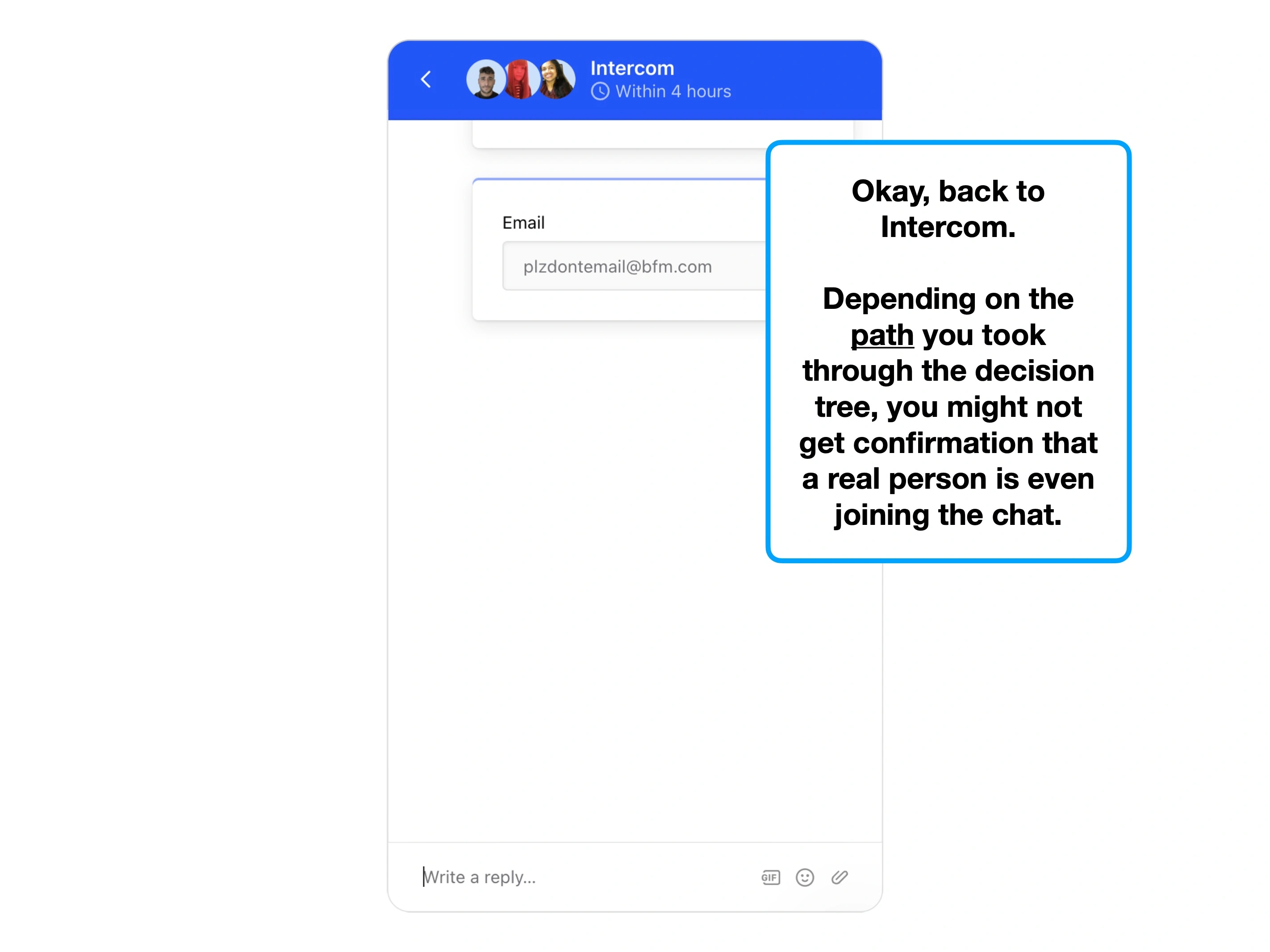

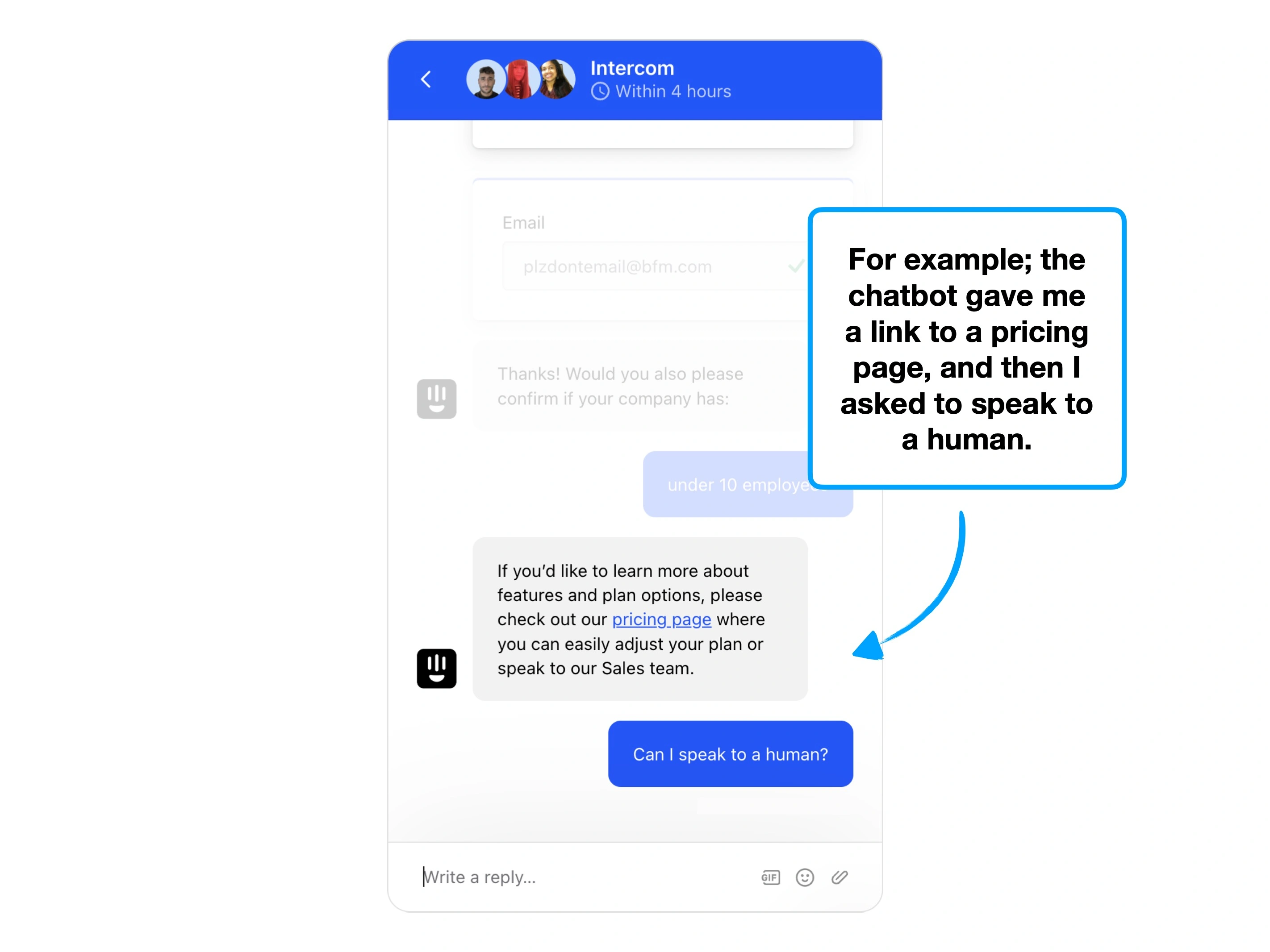

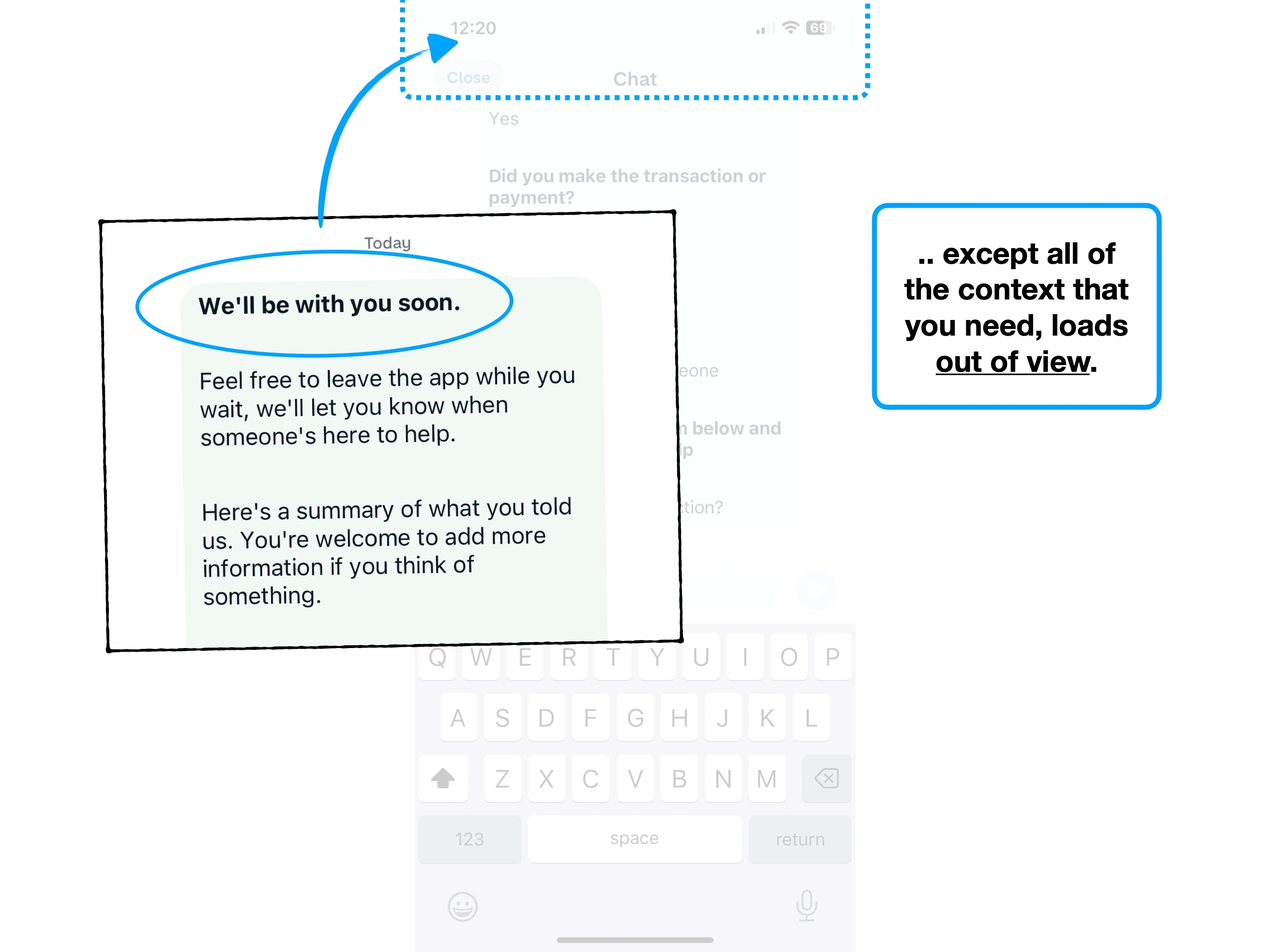

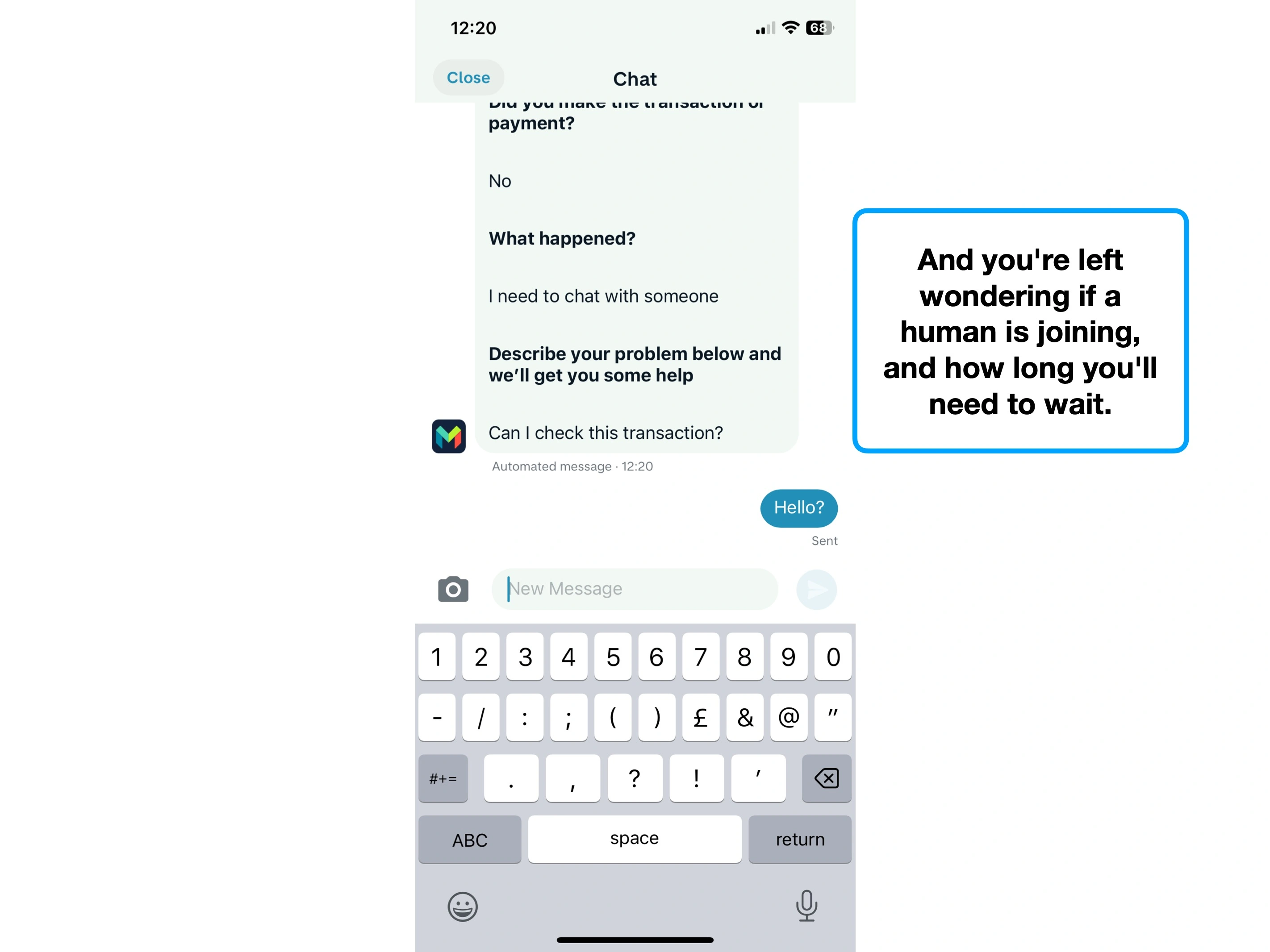

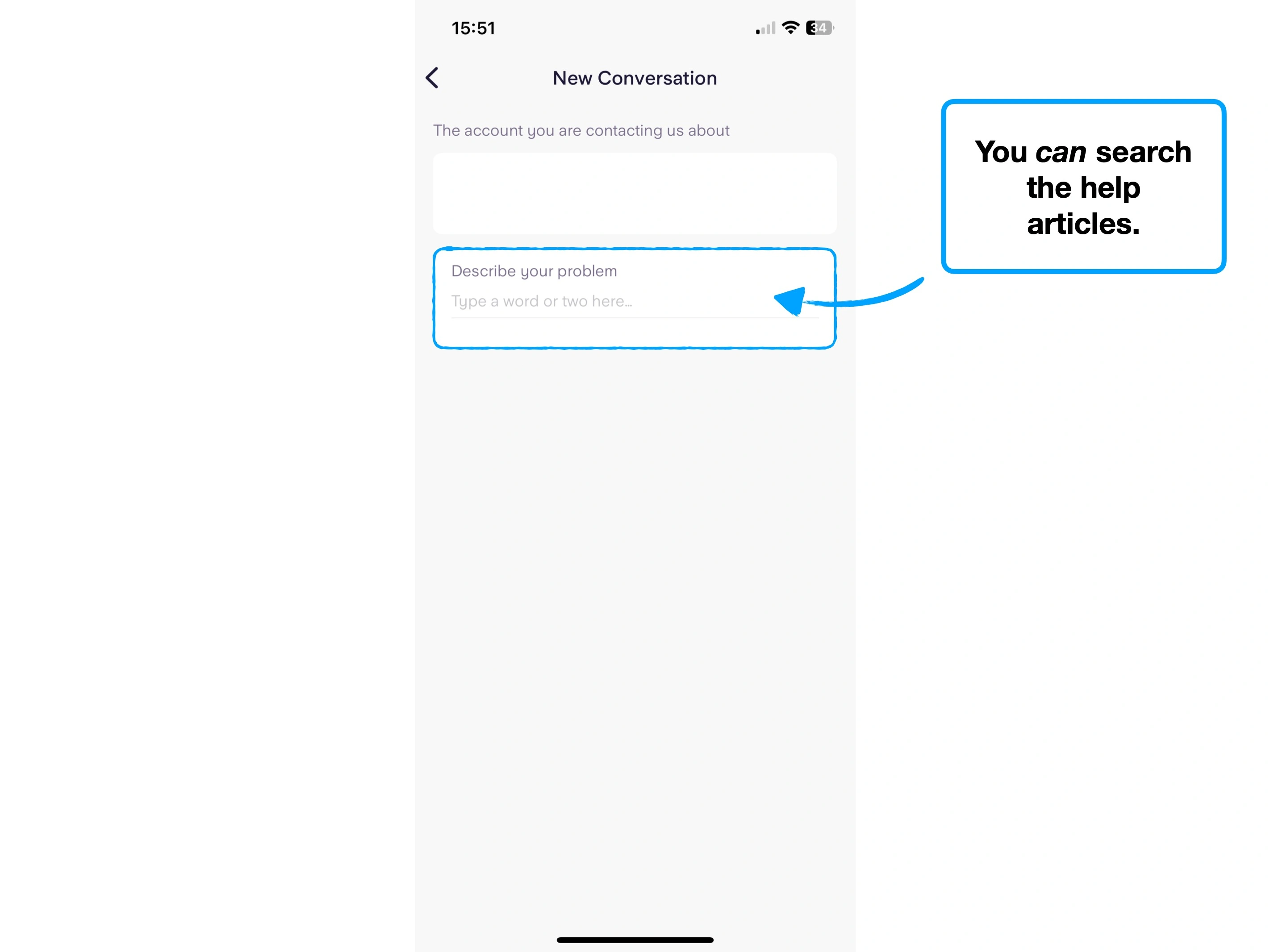

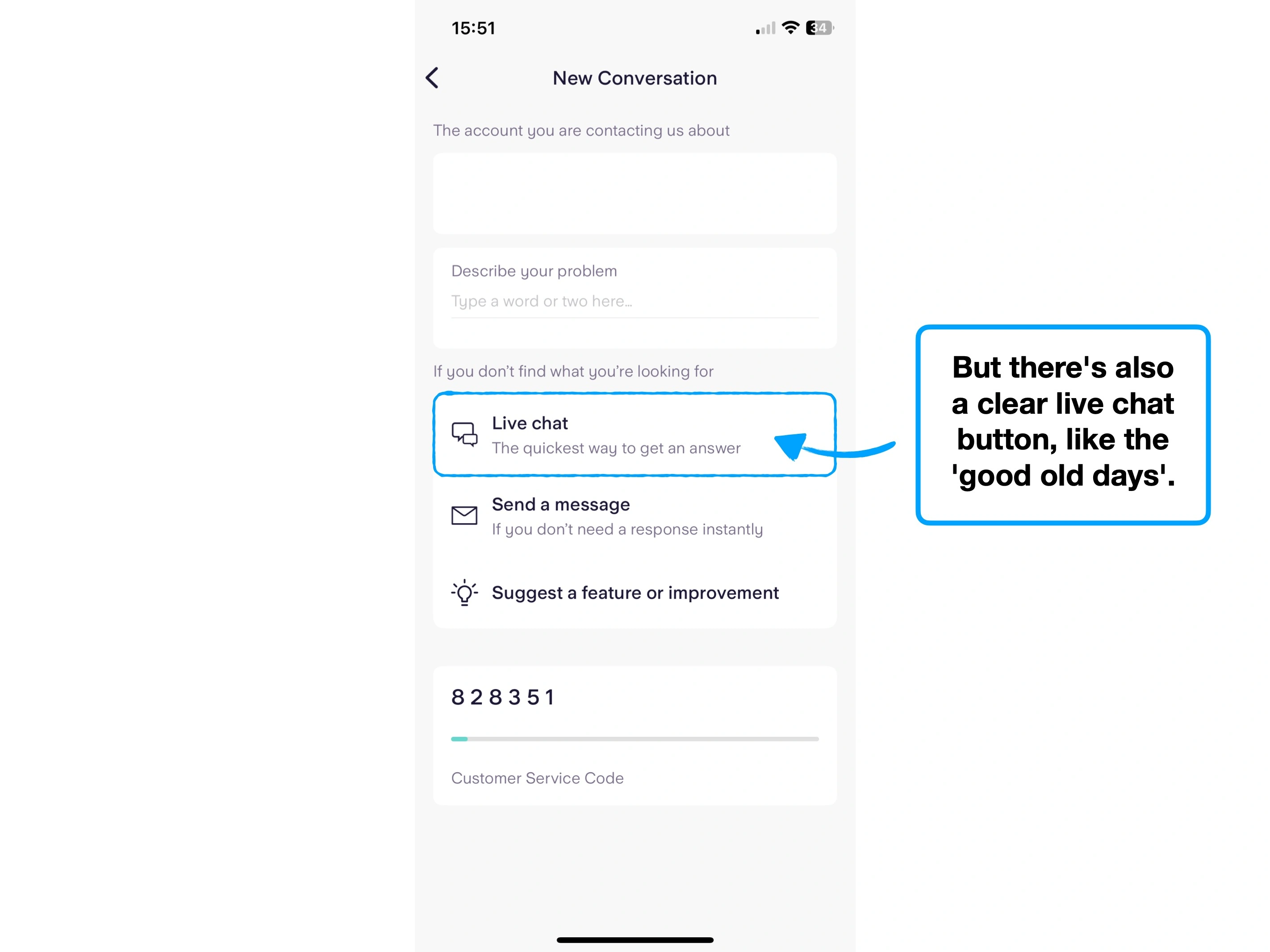

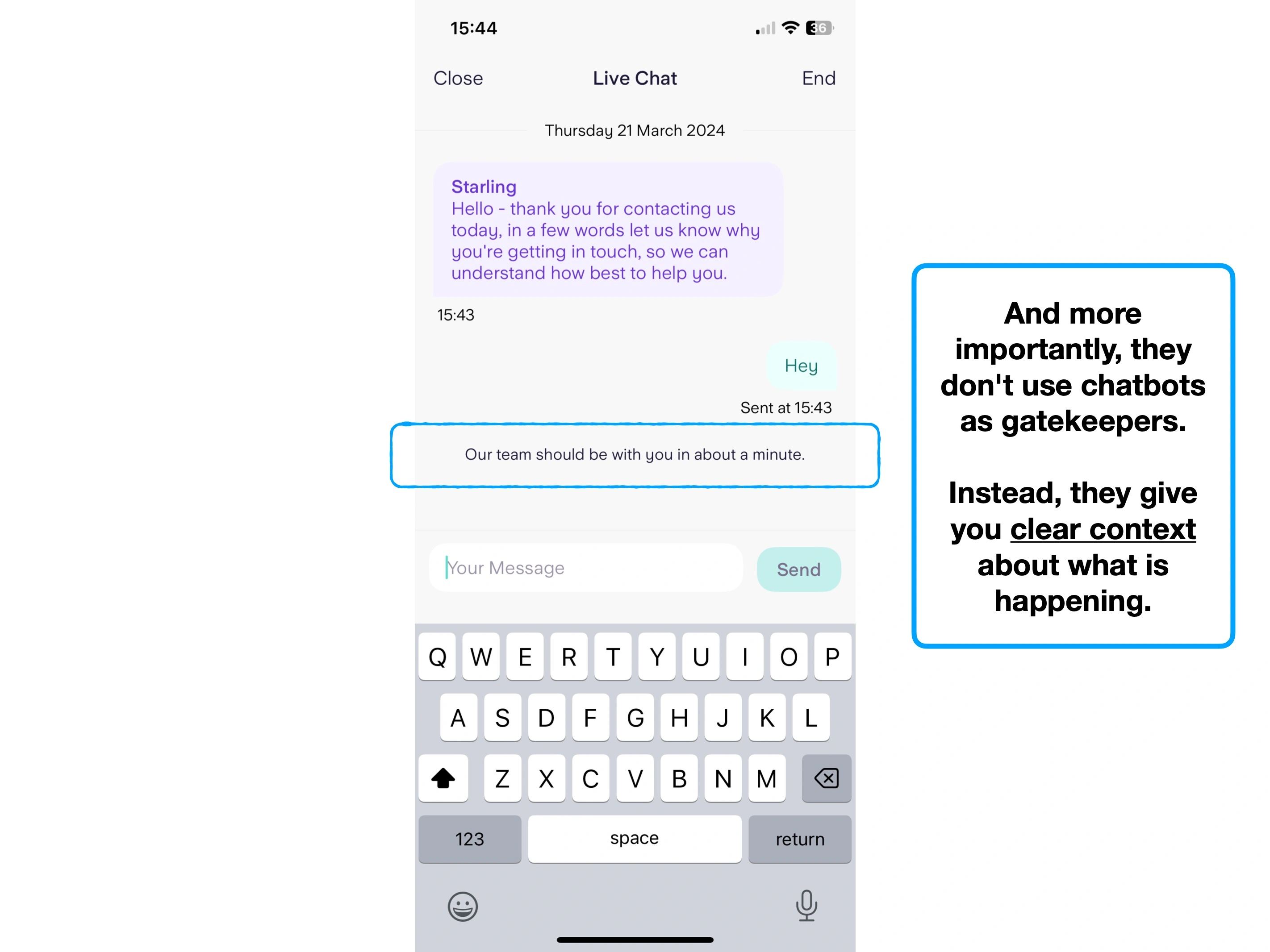

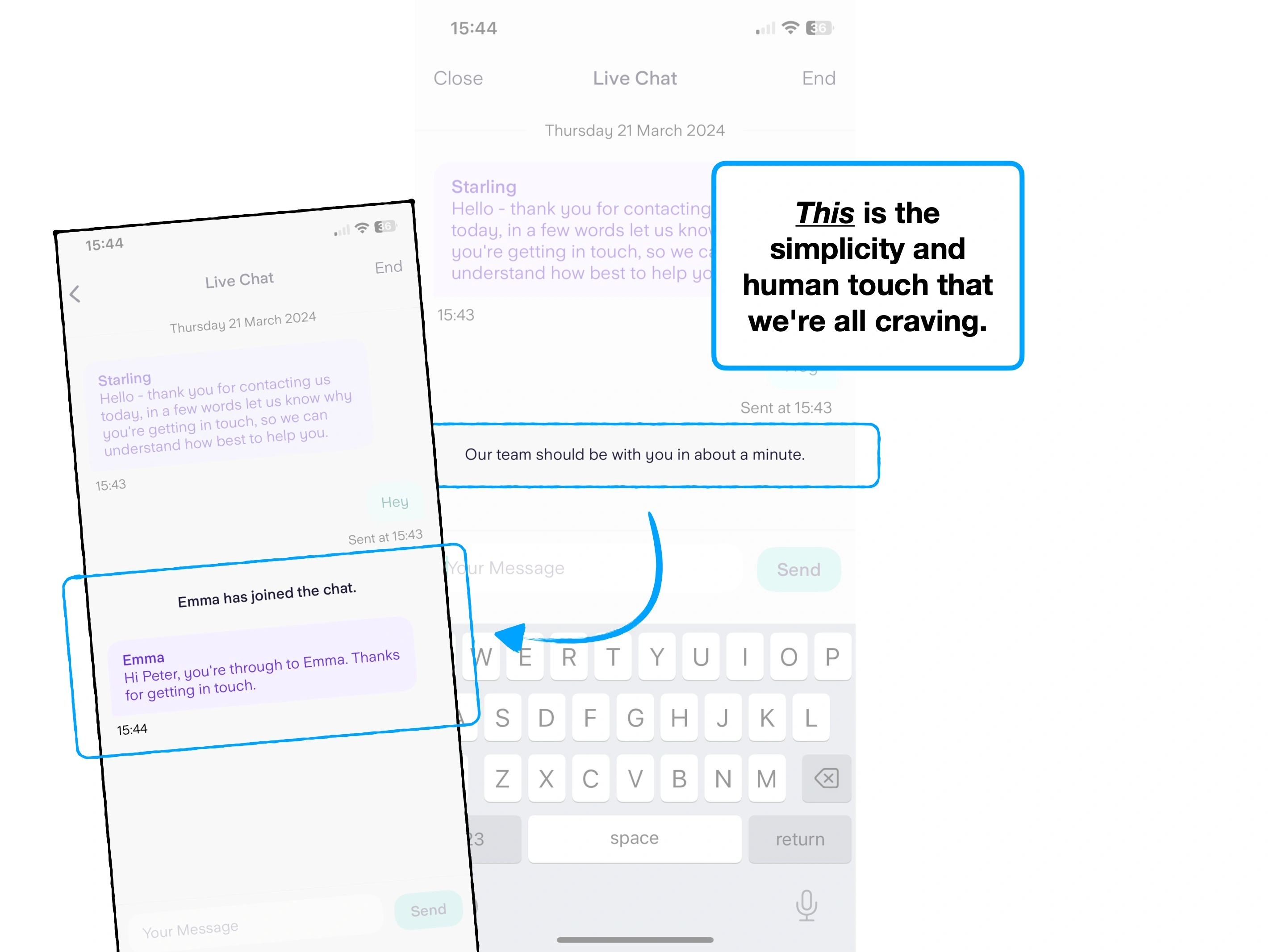

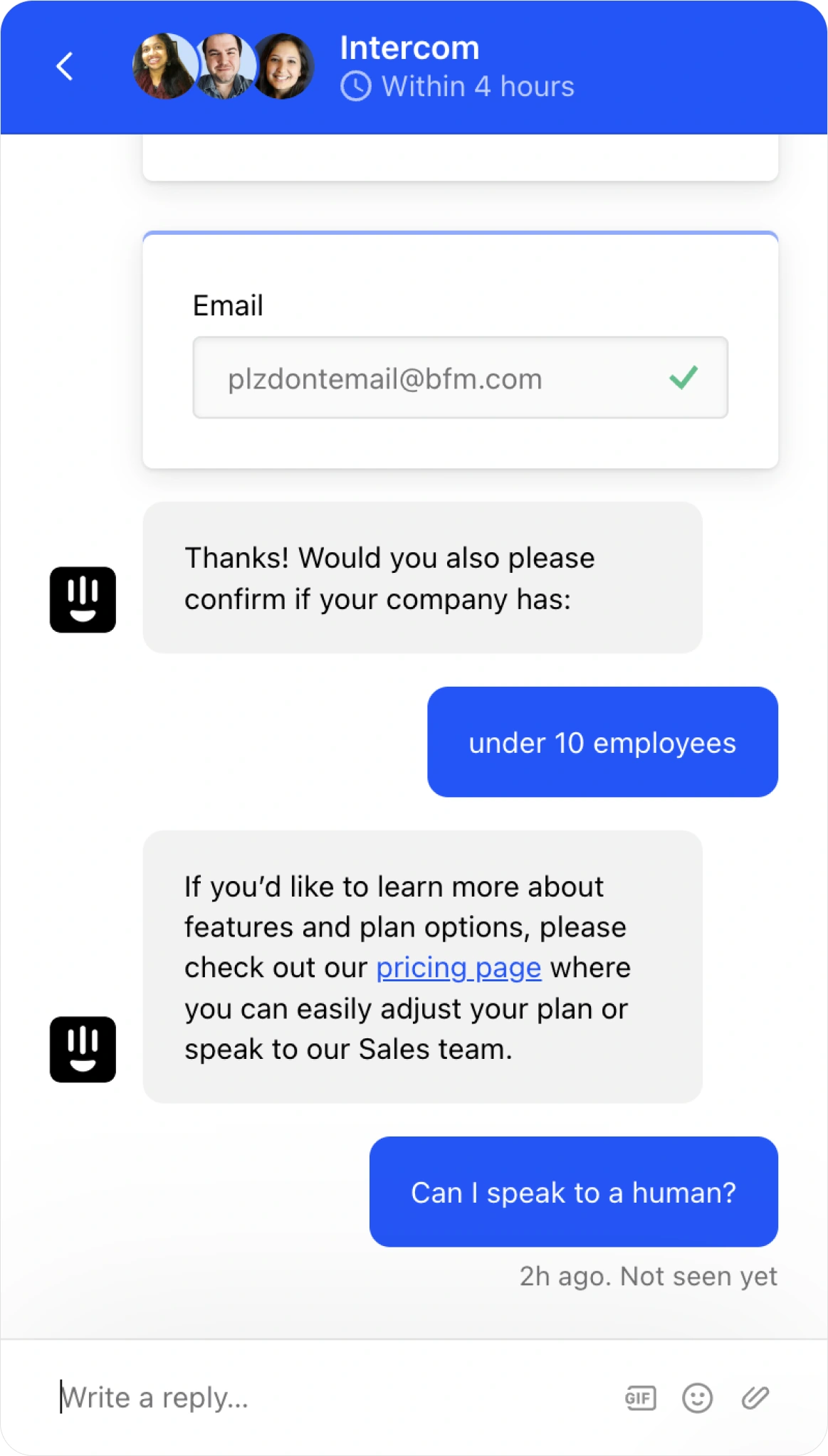

For example, while waiting for a customer service agent to join a live chat, this may be what you see:

In each of these instances, you want to be reminding the user what they're waiting for, and reassuring them that the wait isn't futile.

Or rather: if you were looking at these two screens, how would you know that you haven't been disconnected?

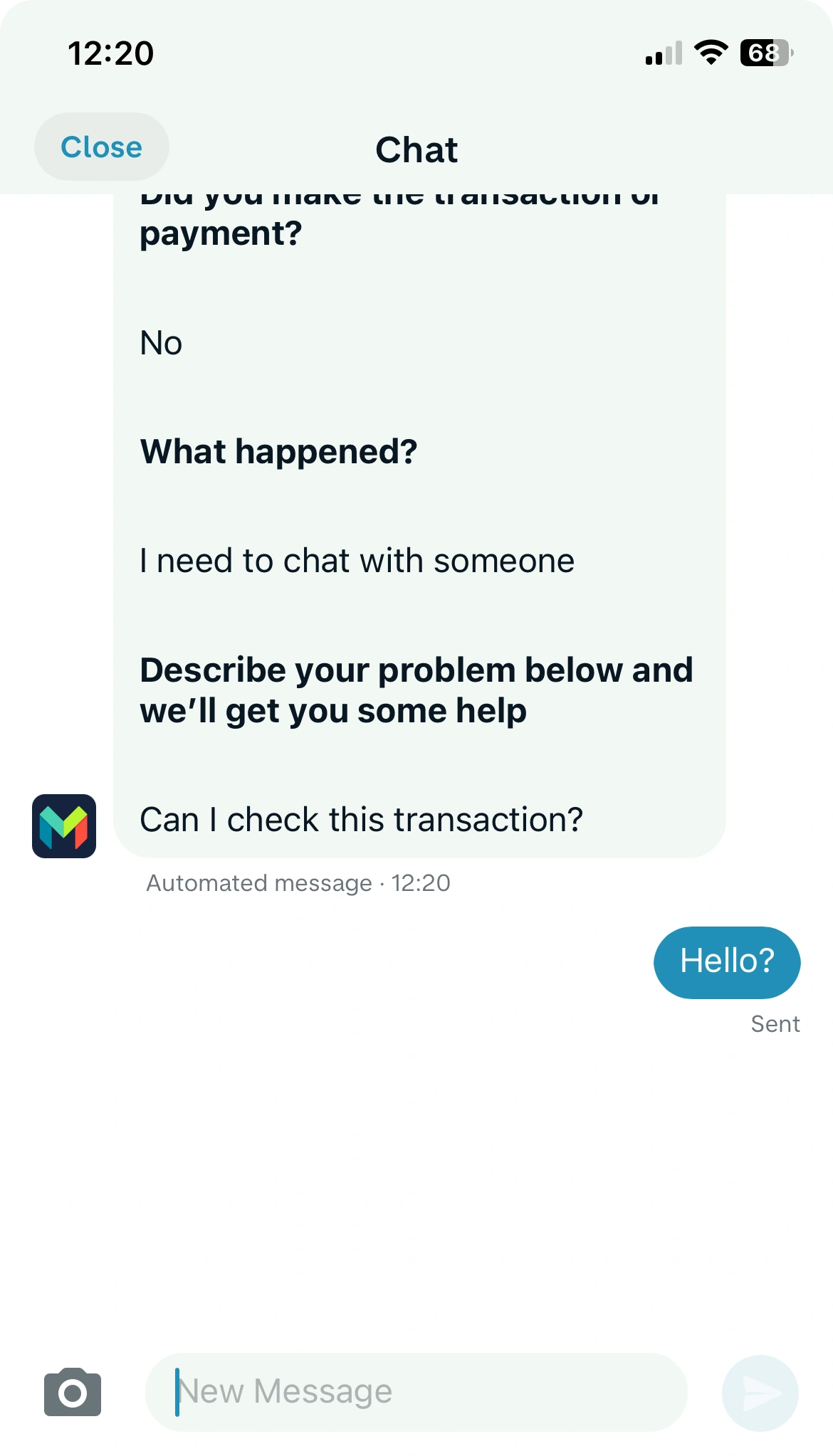

This principle is true for dashboards, data exports and even train station signage.

For example, when exporting data from Stripe, they'll clearly label what's happening, and how long it's expected to take.

Two very small details that'd be easy to overlook.

But without that clarity and confirmation (of what's happening), you'd find the wait more painful.

Especially if the progress bar stopped moving for a while.

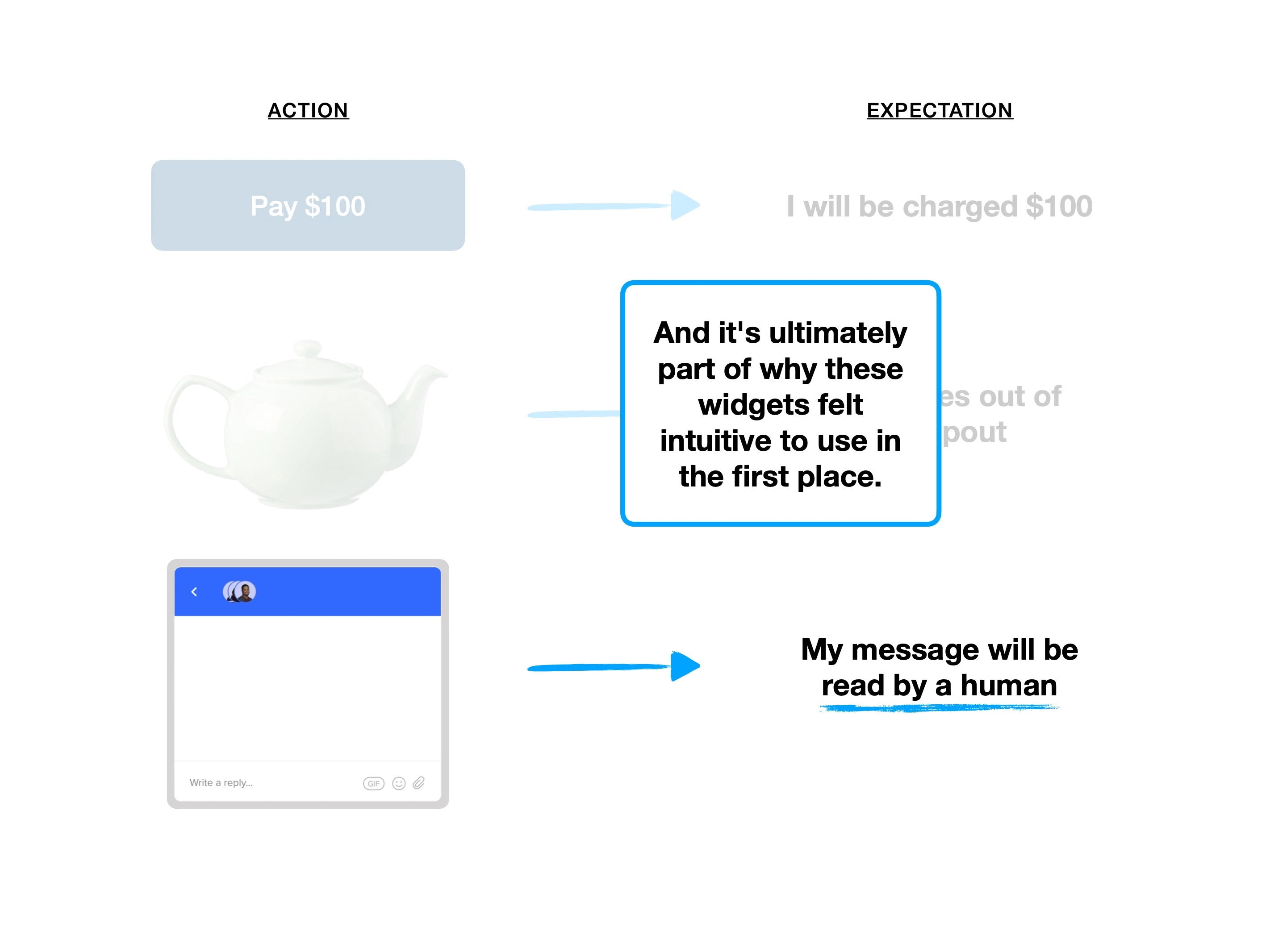

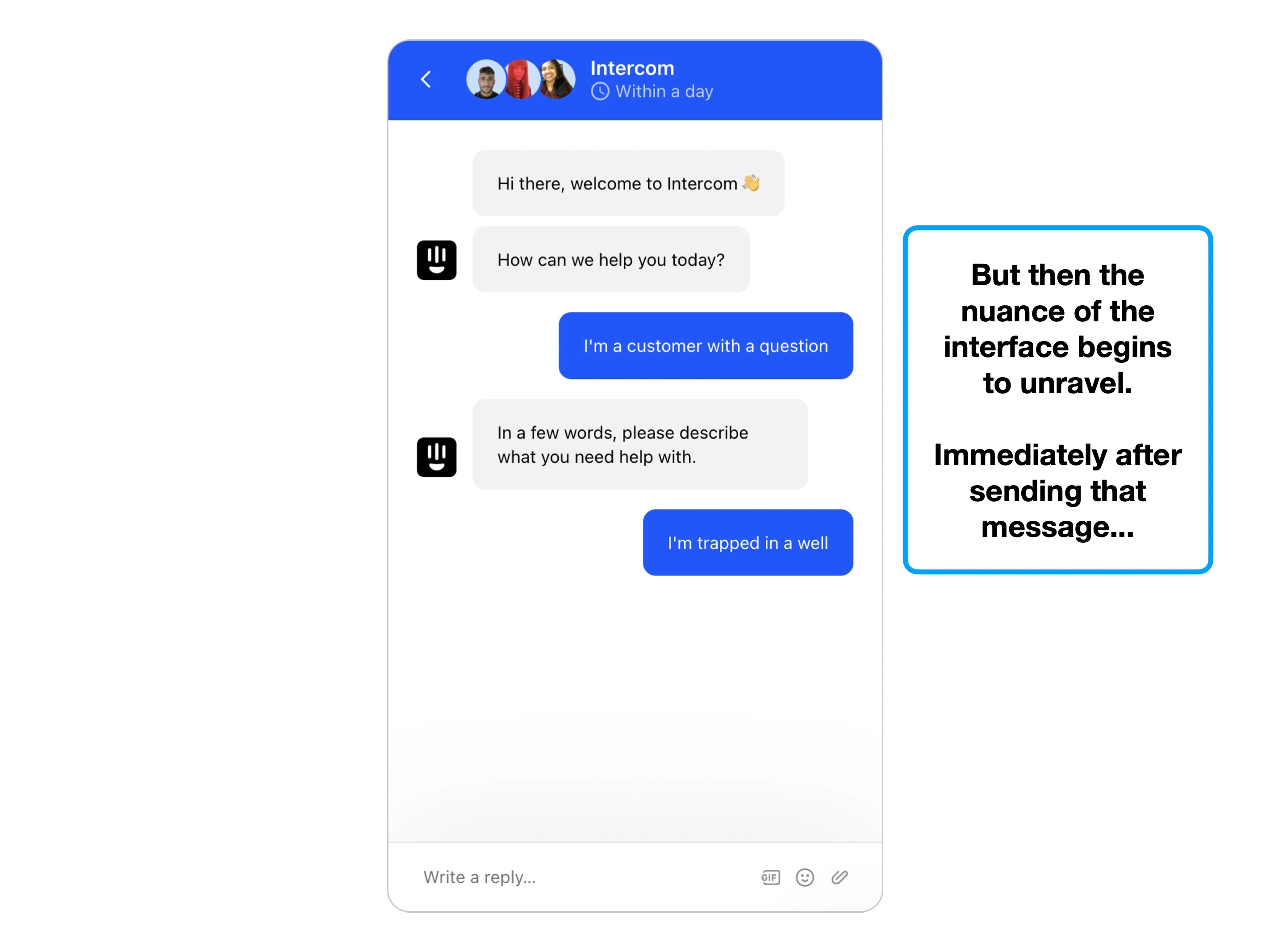

3. The rise of ChatGPT

Initially, the ChatGPT API look to usher in a new vanguard of AI-powered chatbots.

But 12 months in, I think we can see that a lot of the friction that was removed from businesses, has instead been pushed on customers.

i.e., a higher 🧠 Cognitive Load, longer digestion times and more uncertainty.

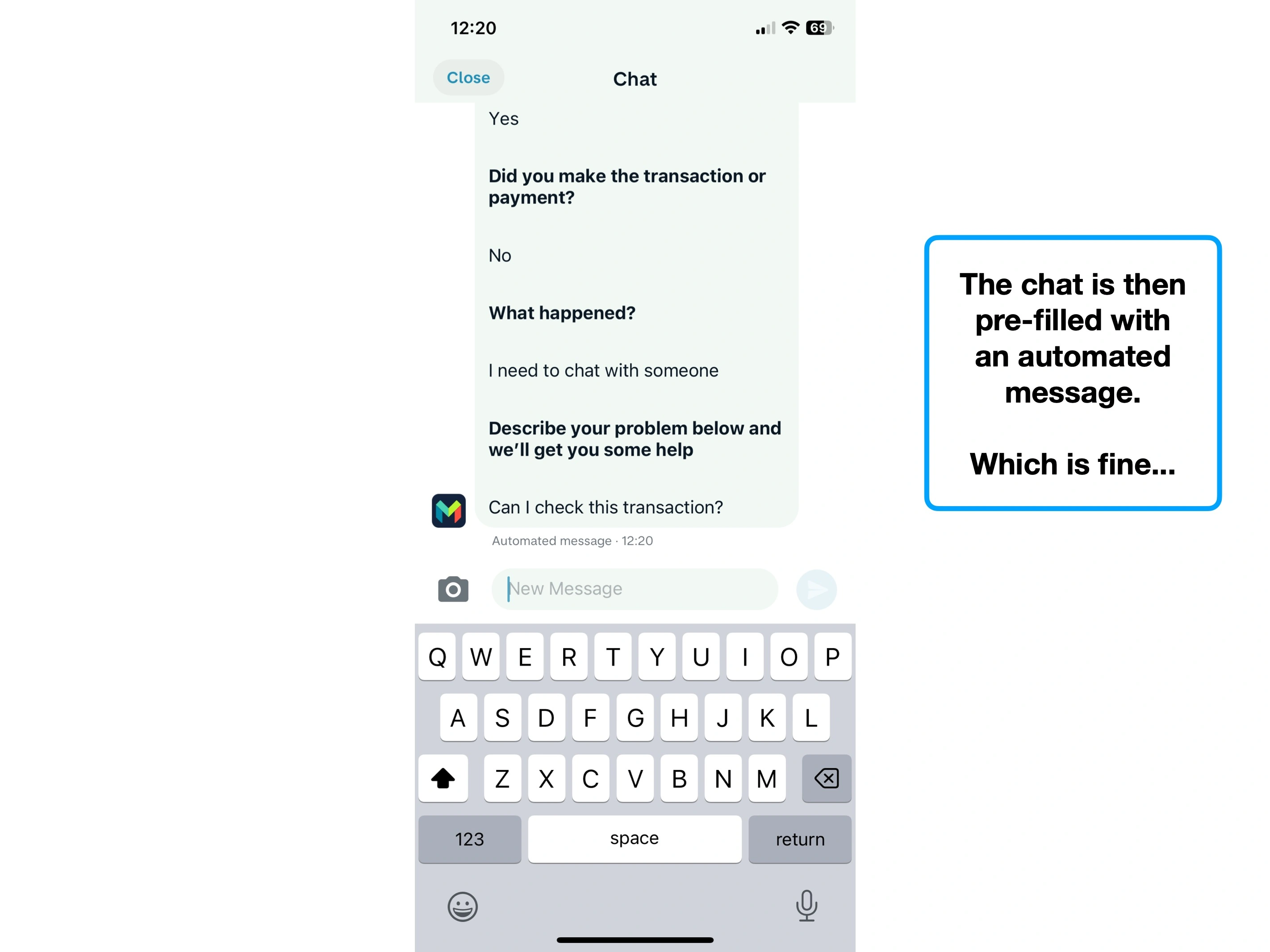

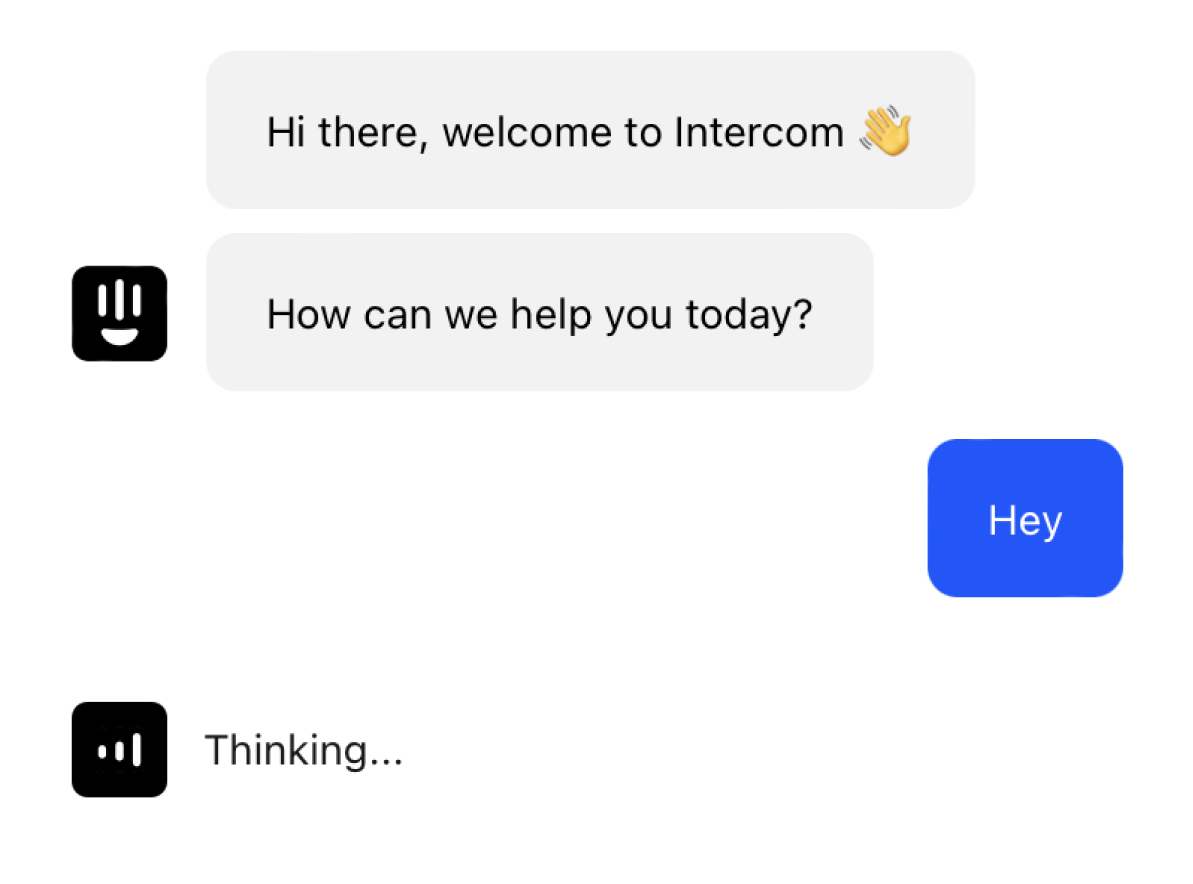

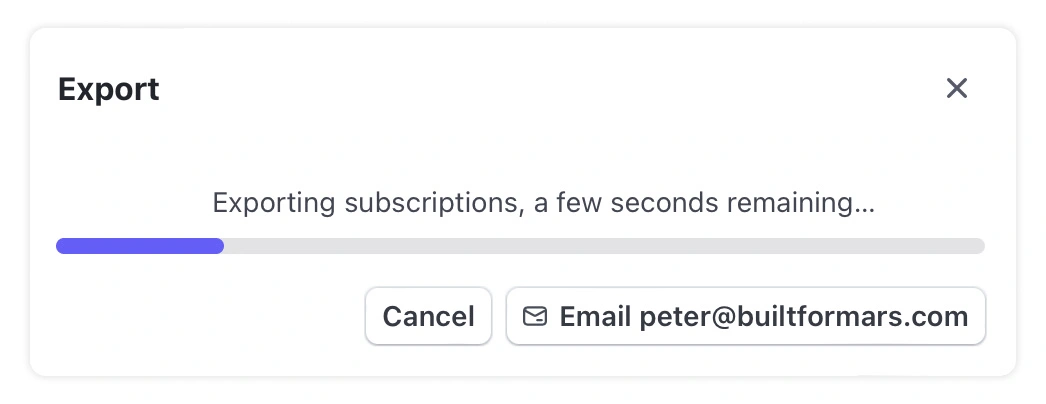

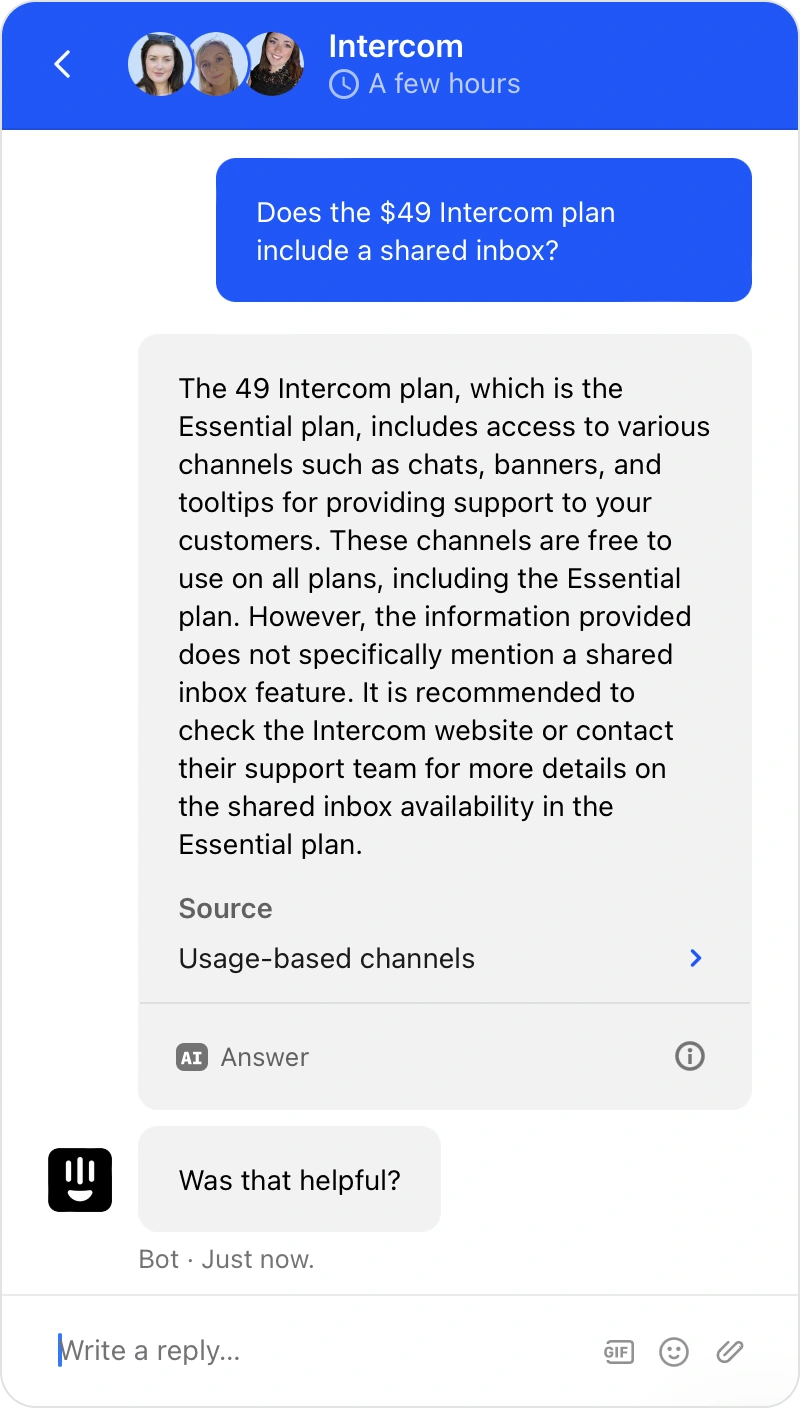

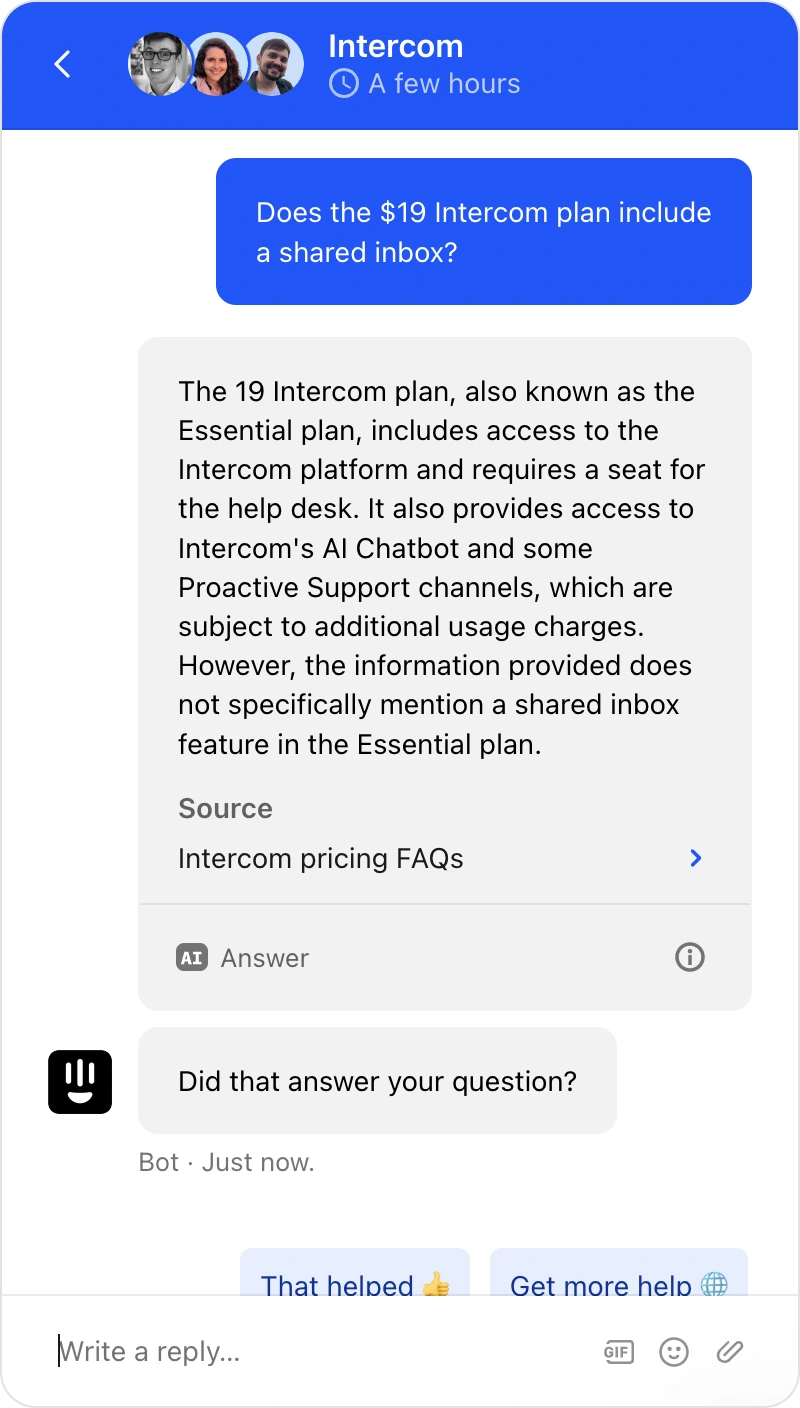

For instance, here's the same question, answered by both ChatGPT (via Intercom), and a human.

AI bot

Human with templates

Aside from the obvious word count, you'll notice basic usability and experience problems, like the currency not being placed next to the prices, but in a seperate paragraph.

Conceptually there's a problem too: these widgets are usually a few hundred pixels wide, and so often messages would elapse entirely out of view, auto-scrolling as it goes.

You've taken an already encumbered answer (a hallmark of the large language models), and forced the user to scroll up and down vertically to read the conversation.

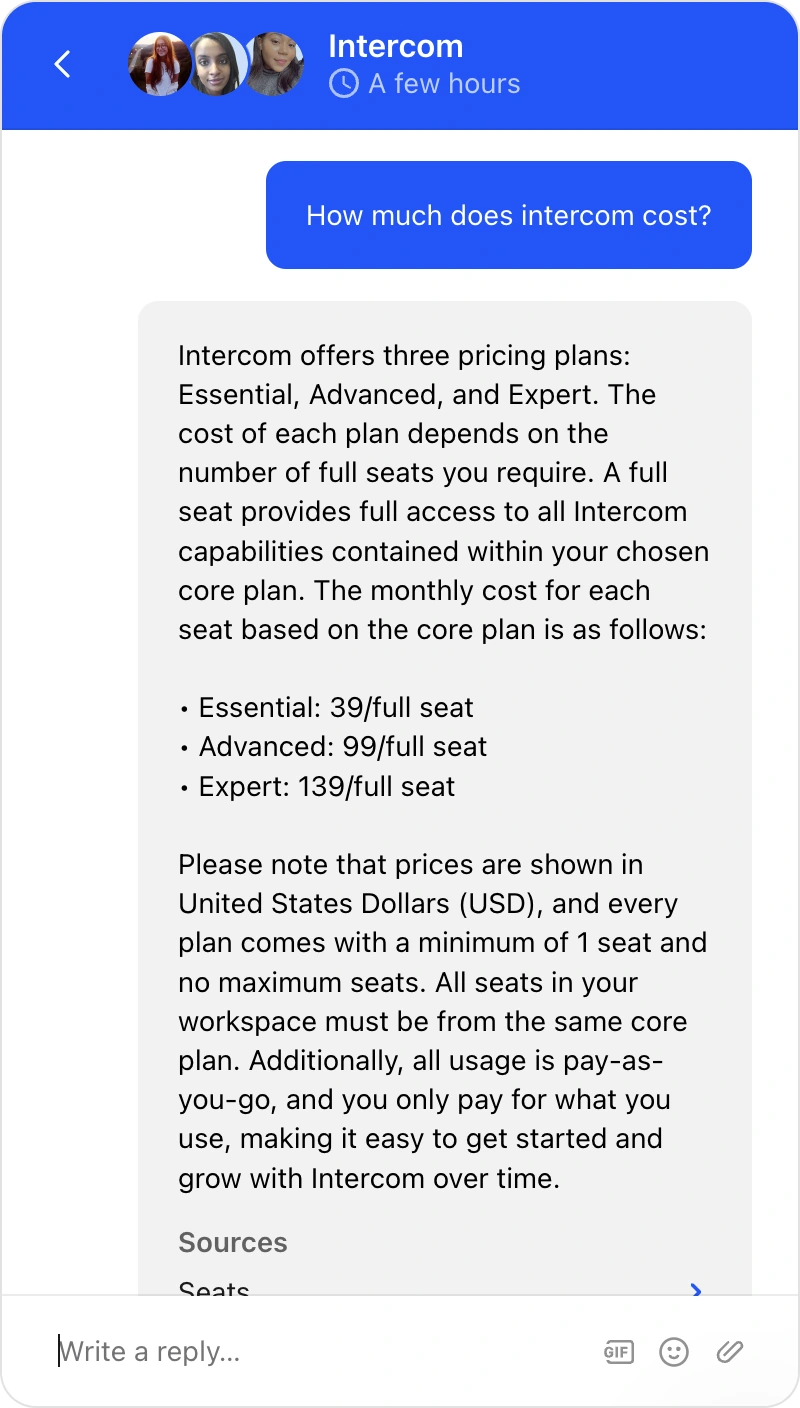

Another problem is how it differs from traditional 'rule-based' chatbots—it'll 'infer' answers.

i.e., you can make up a plan and it'll tell you about it.

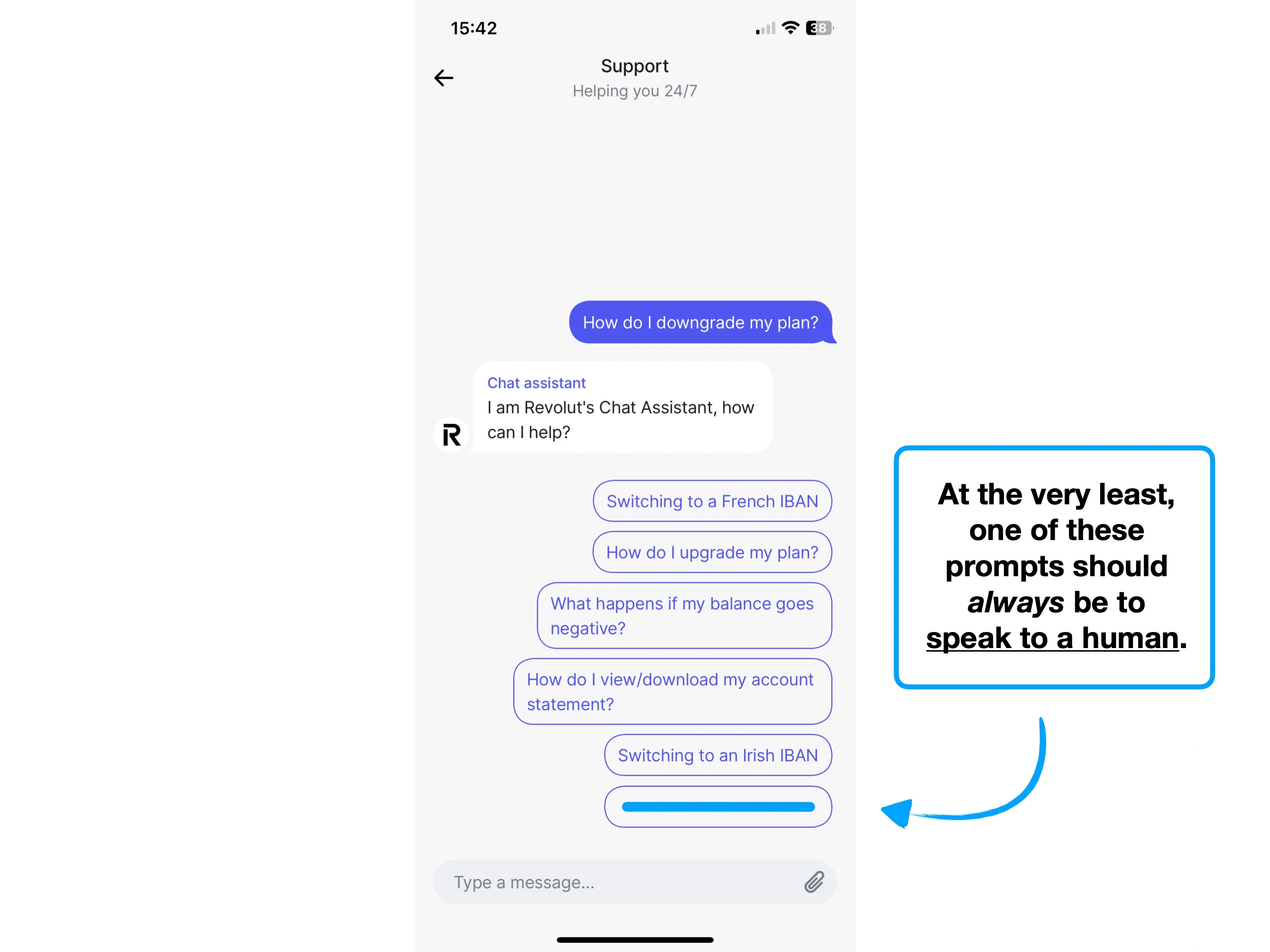

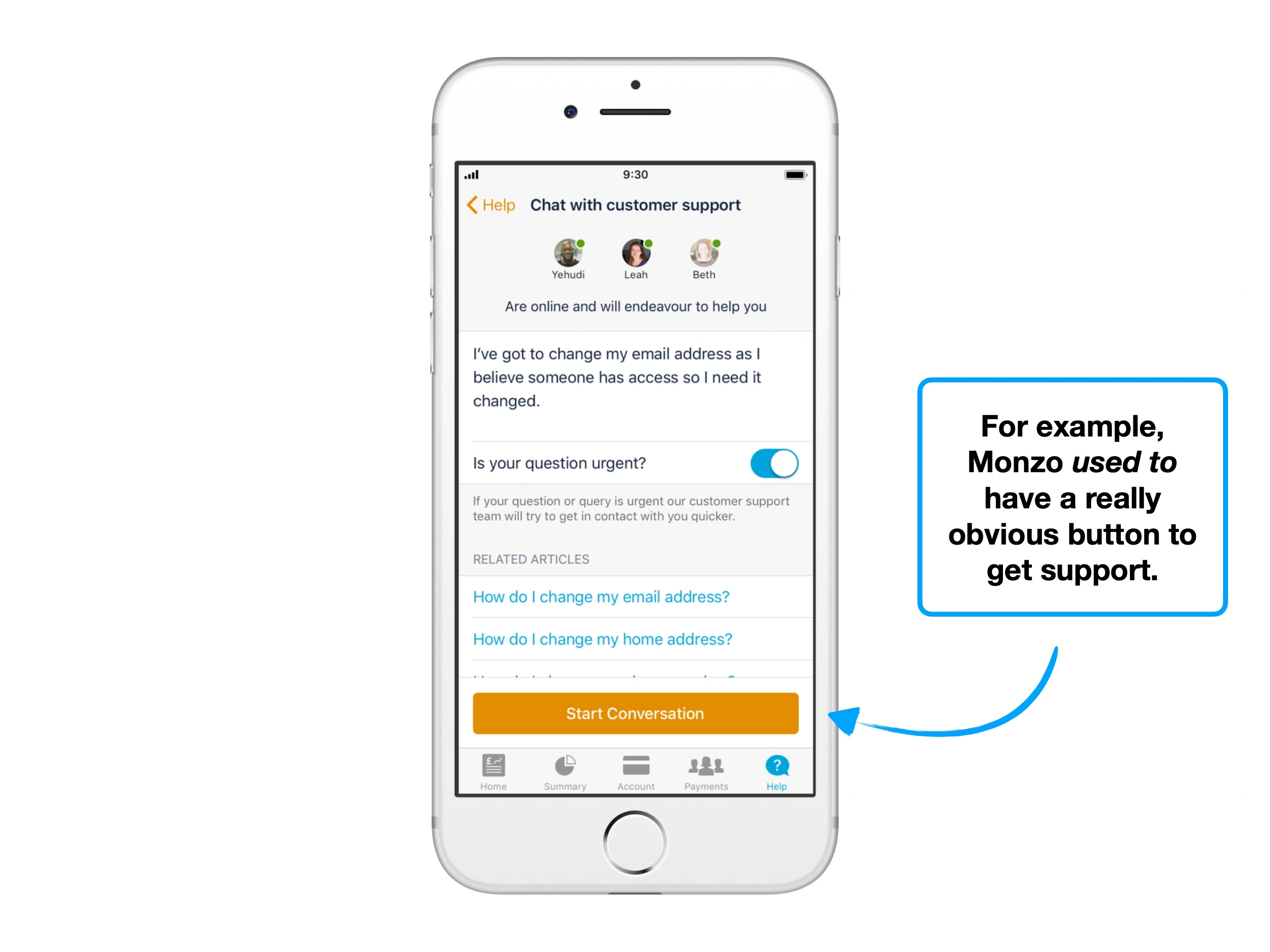

The point is that if AI doesn't know when it's wrong (which it doesn't), then you need to give clear escape routes.

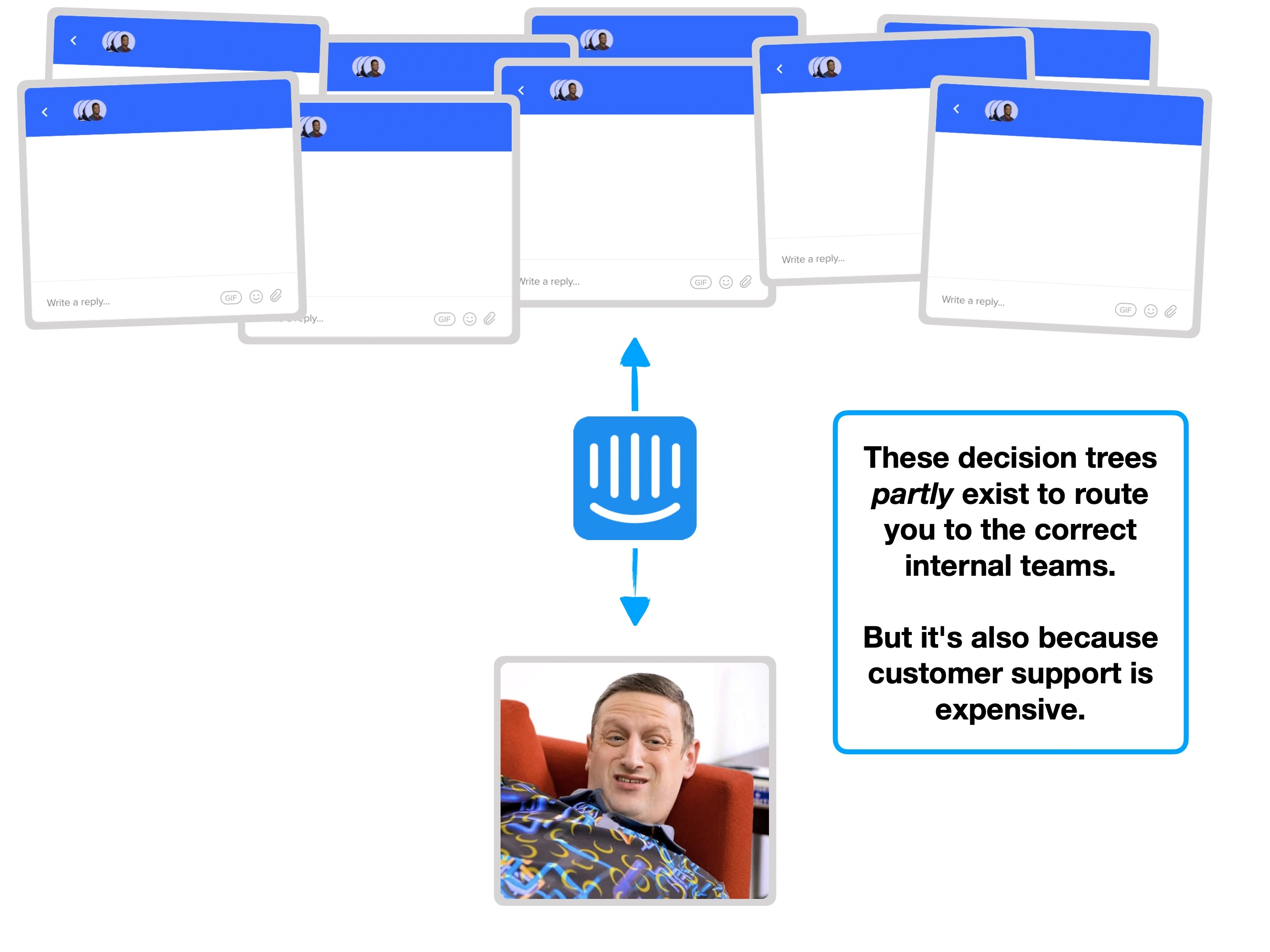

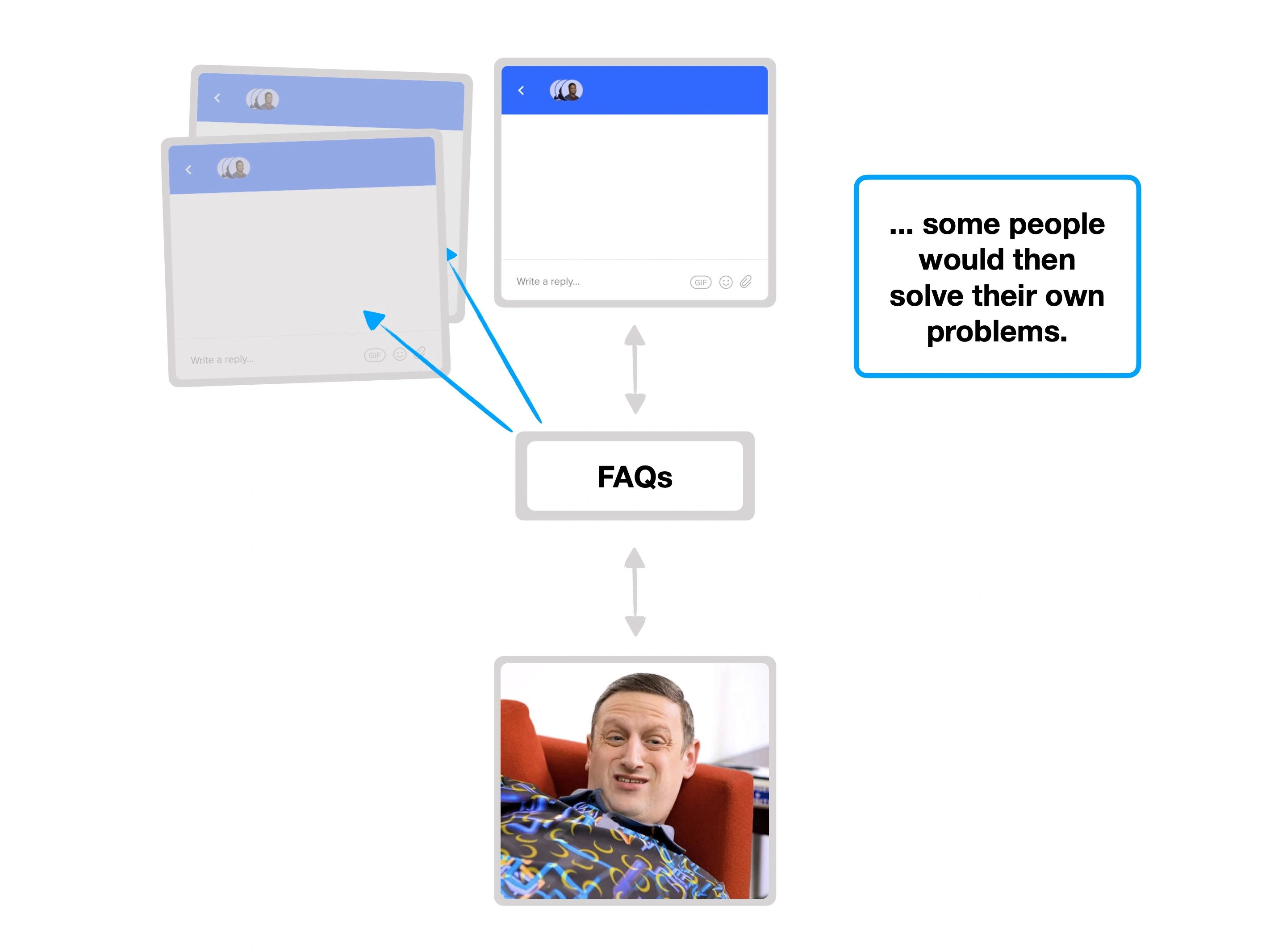

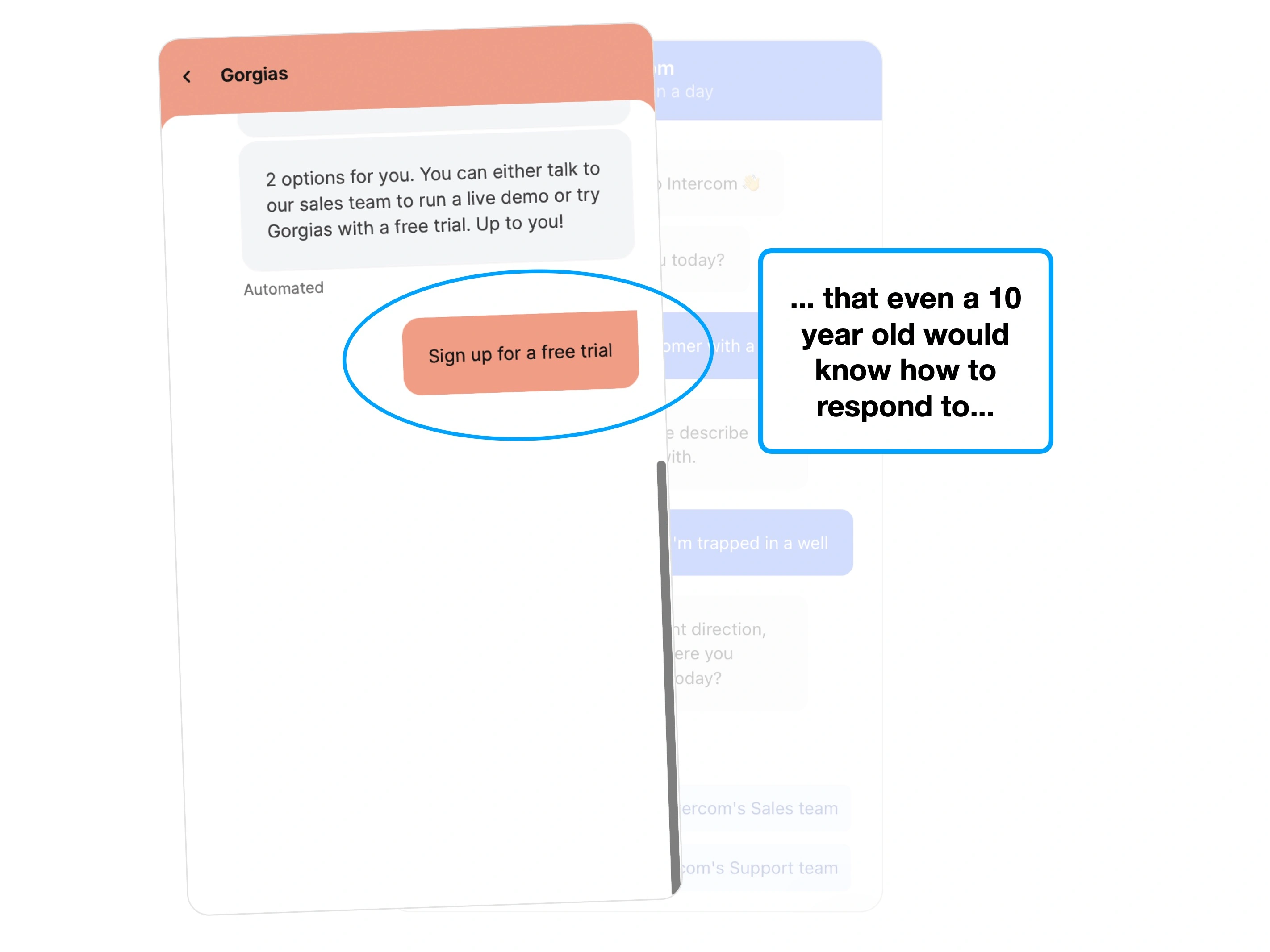

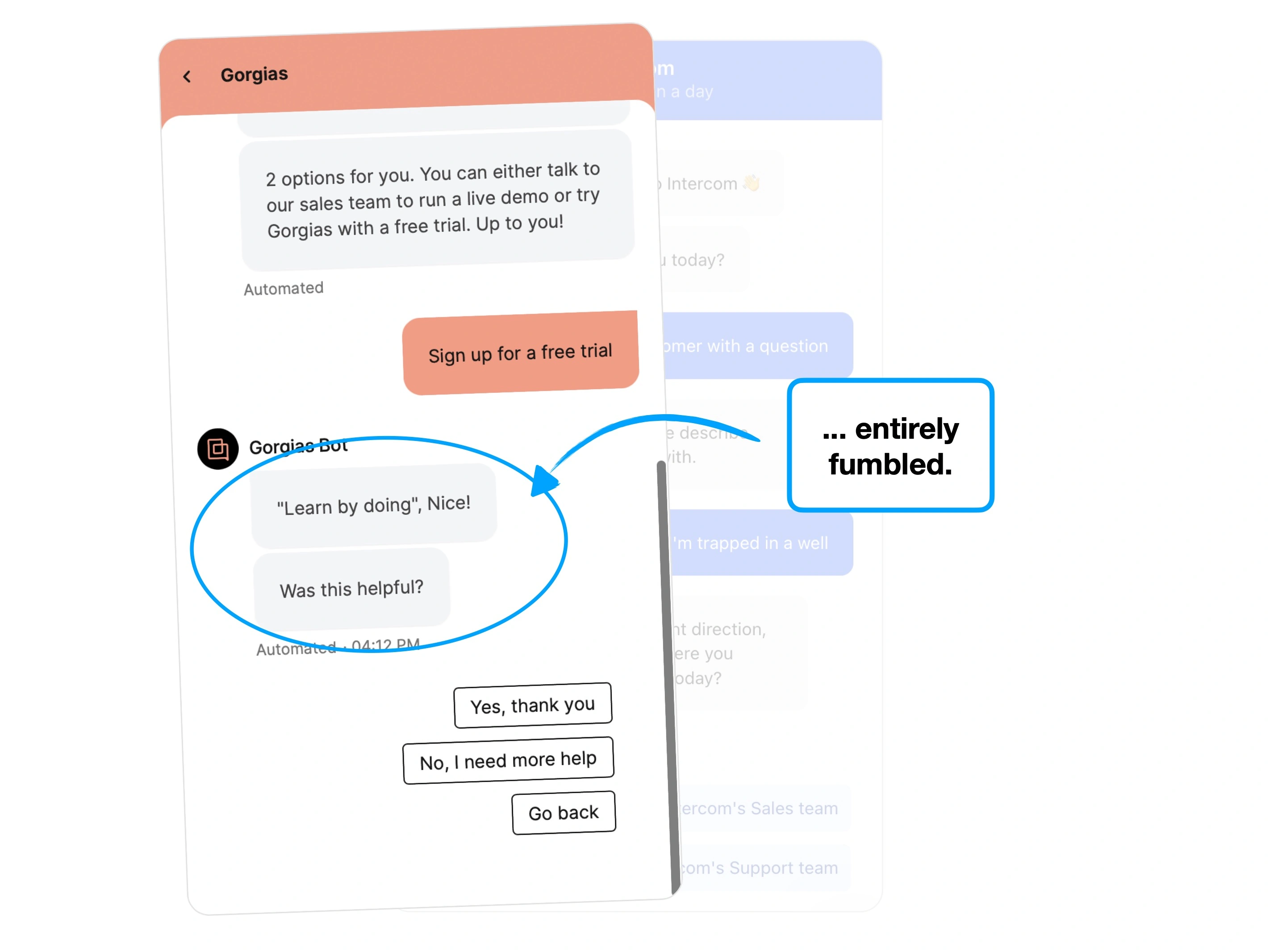

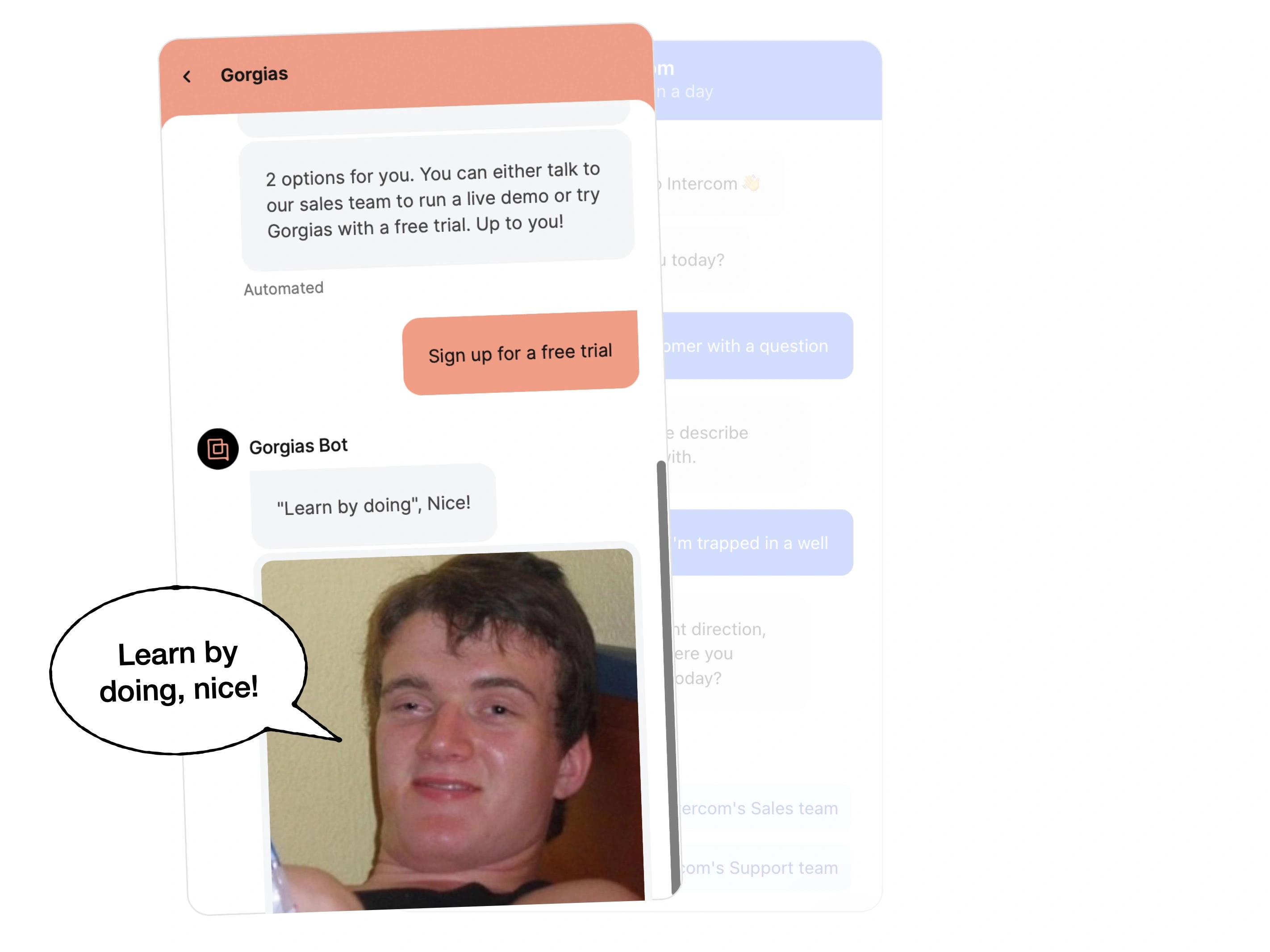

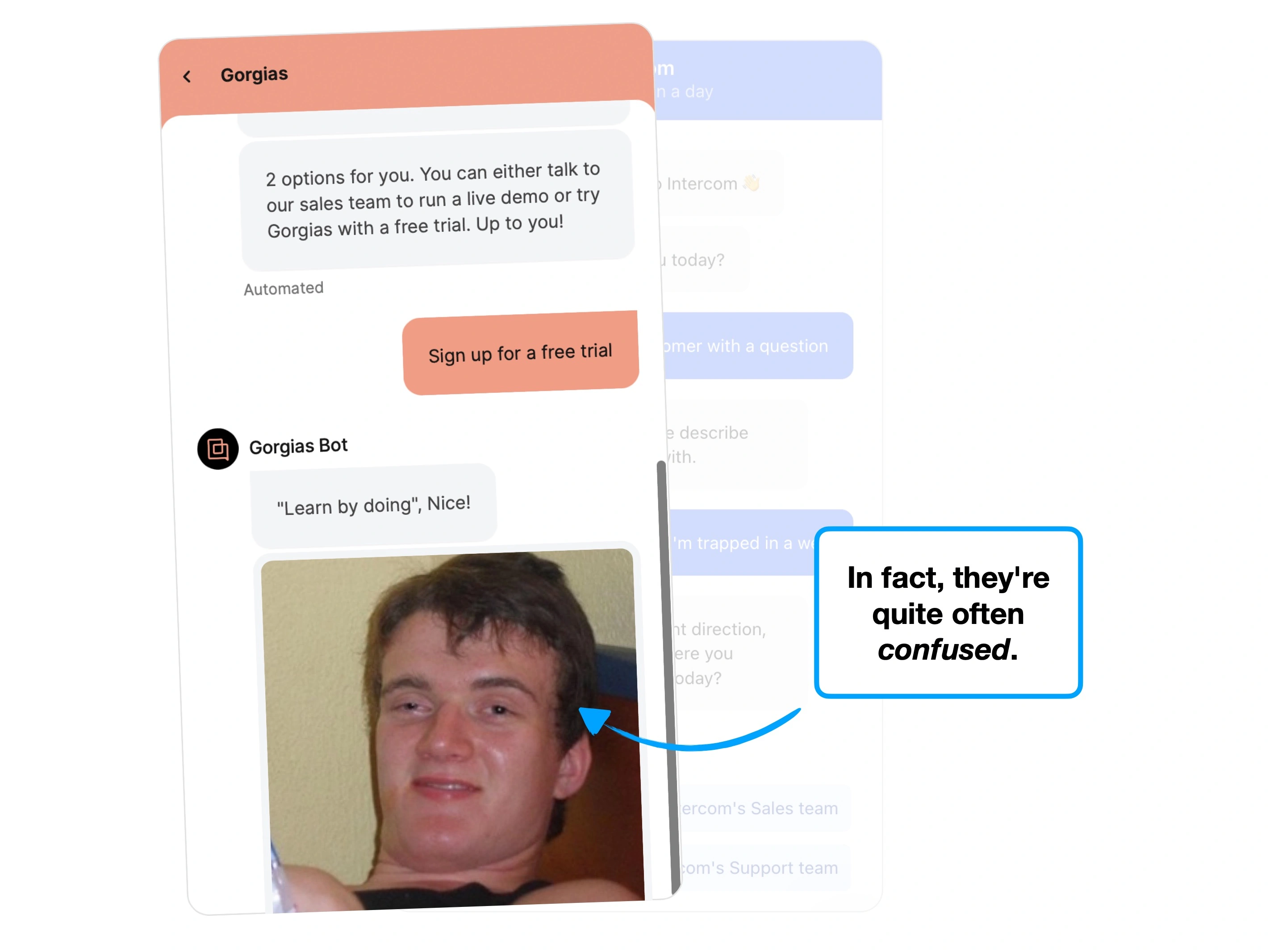

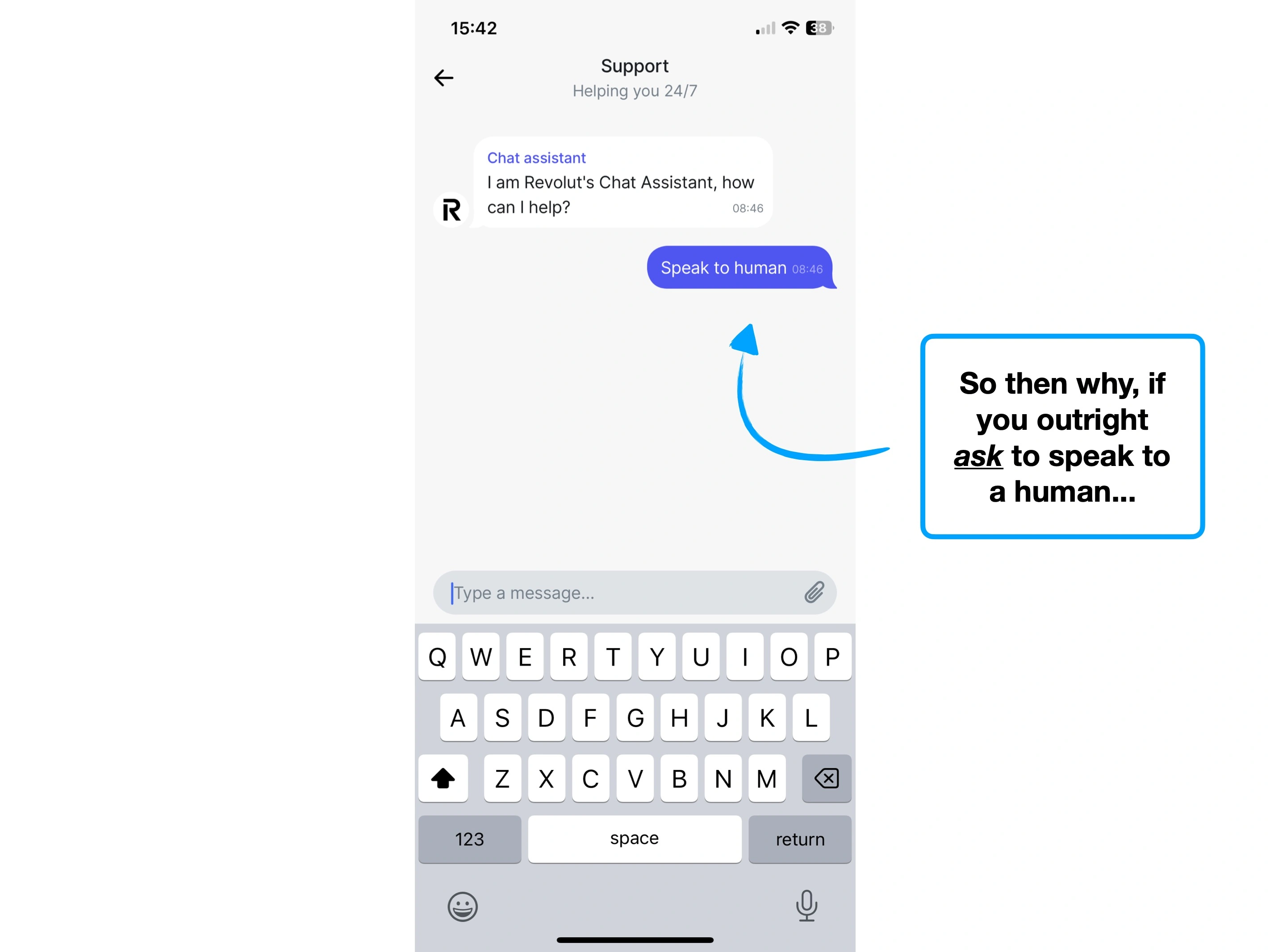

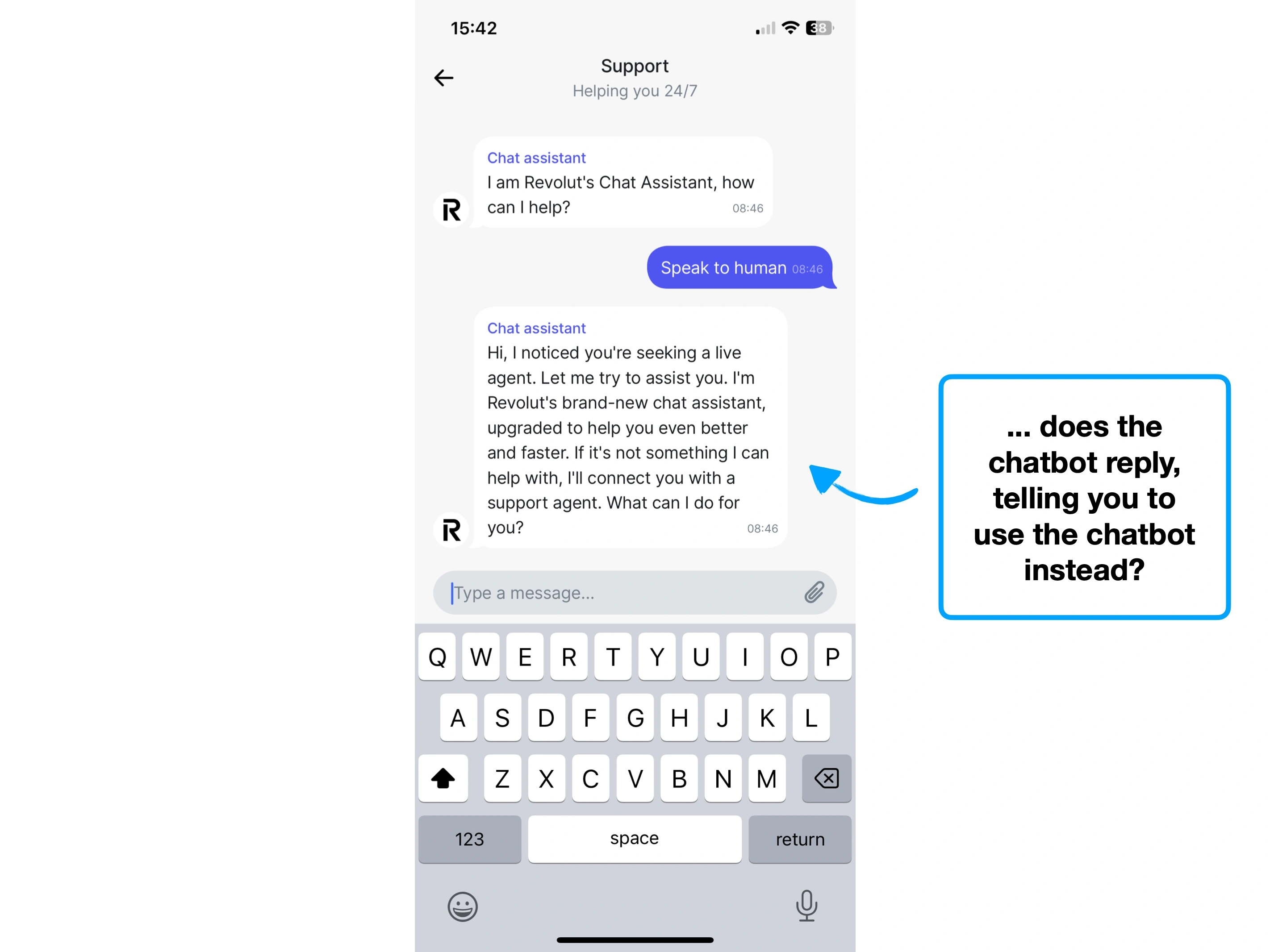

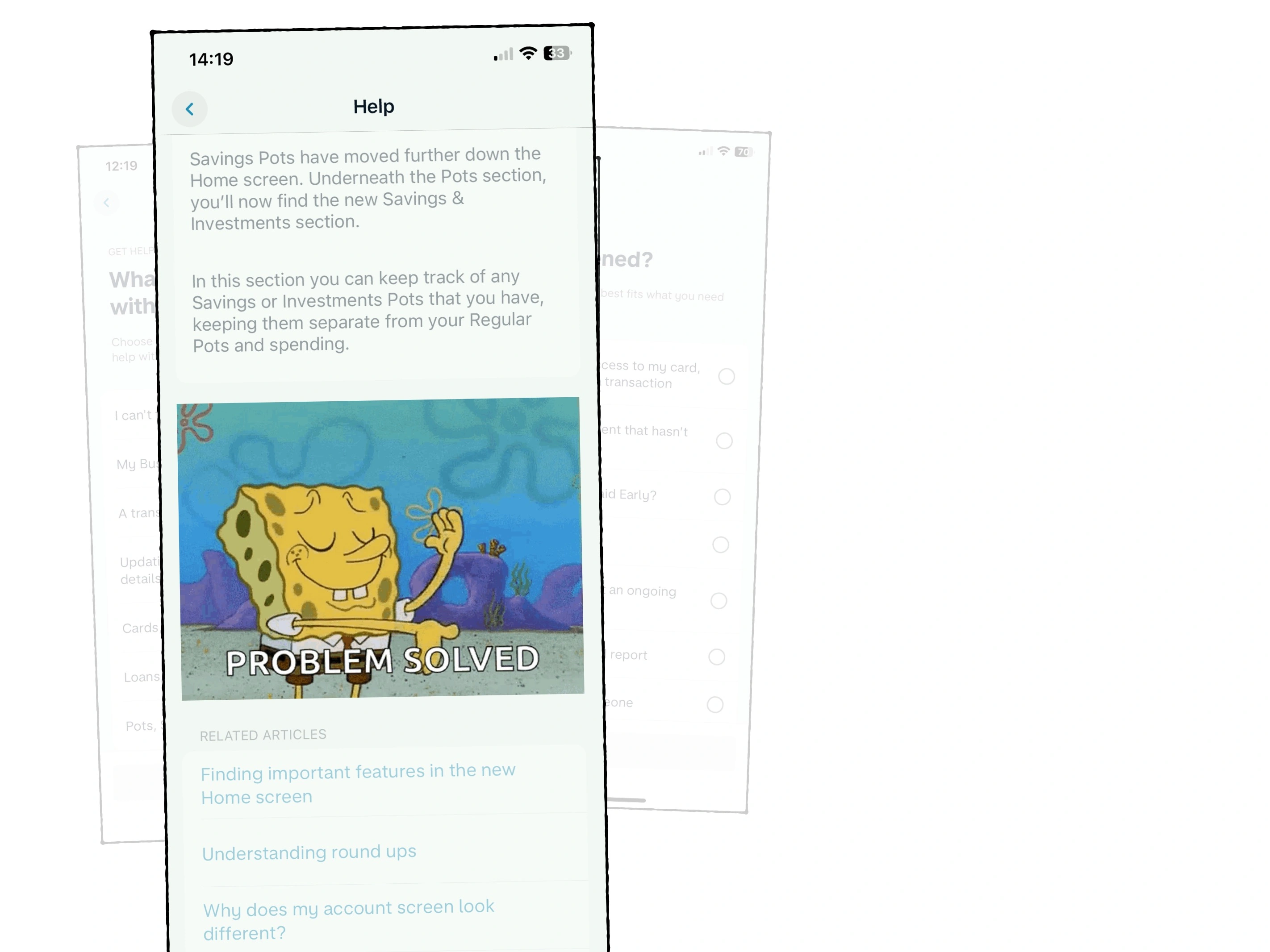

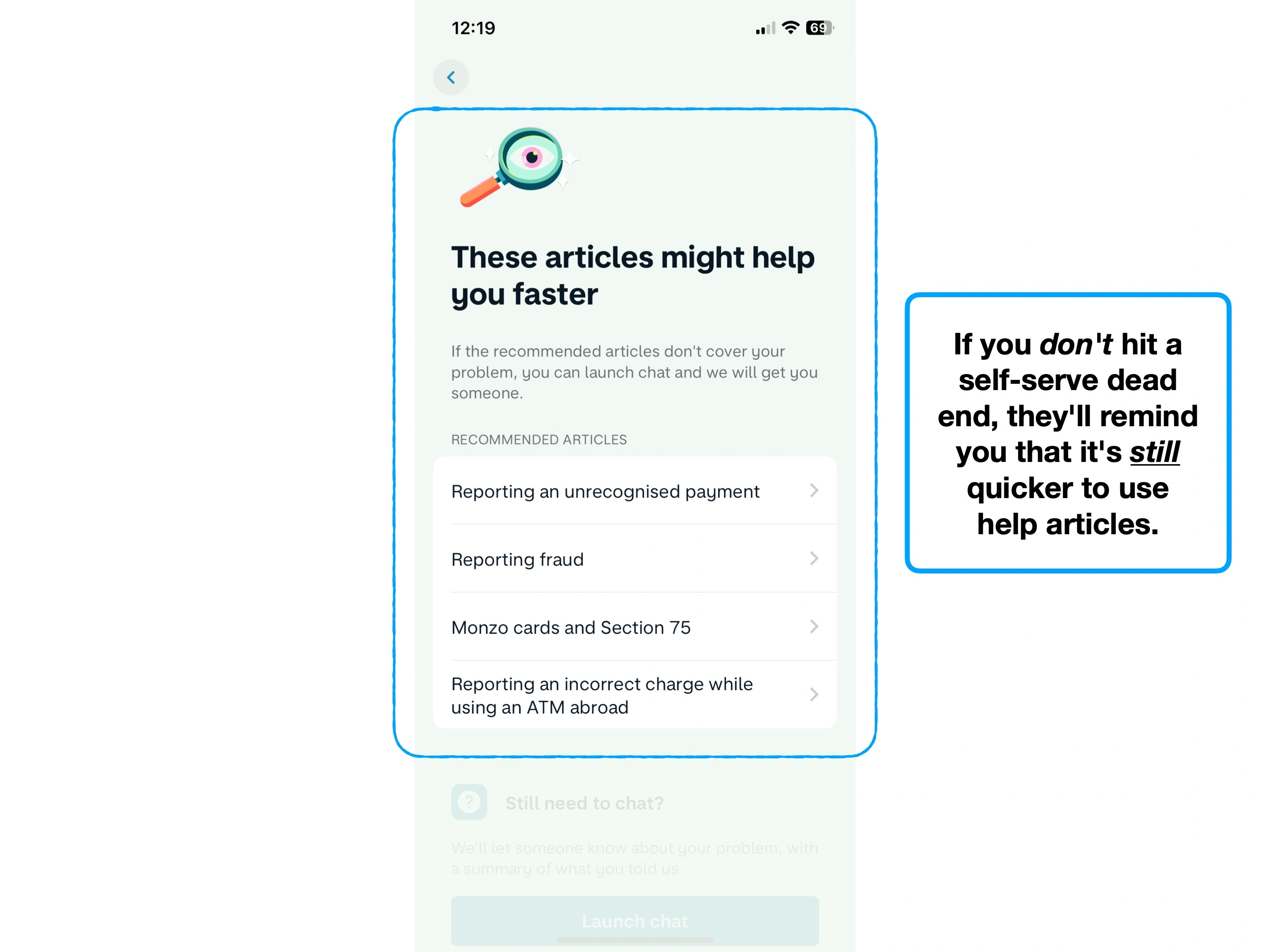

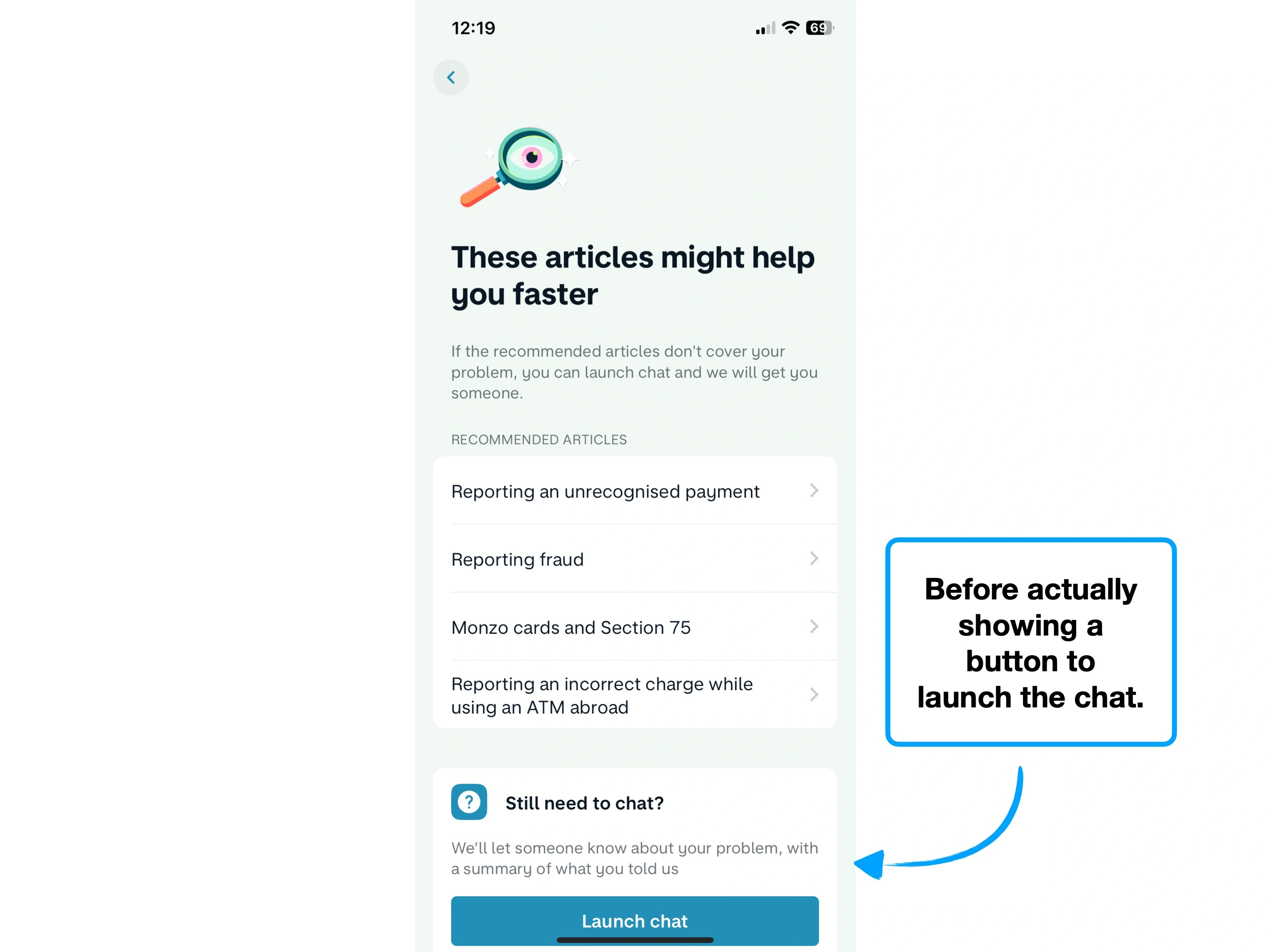

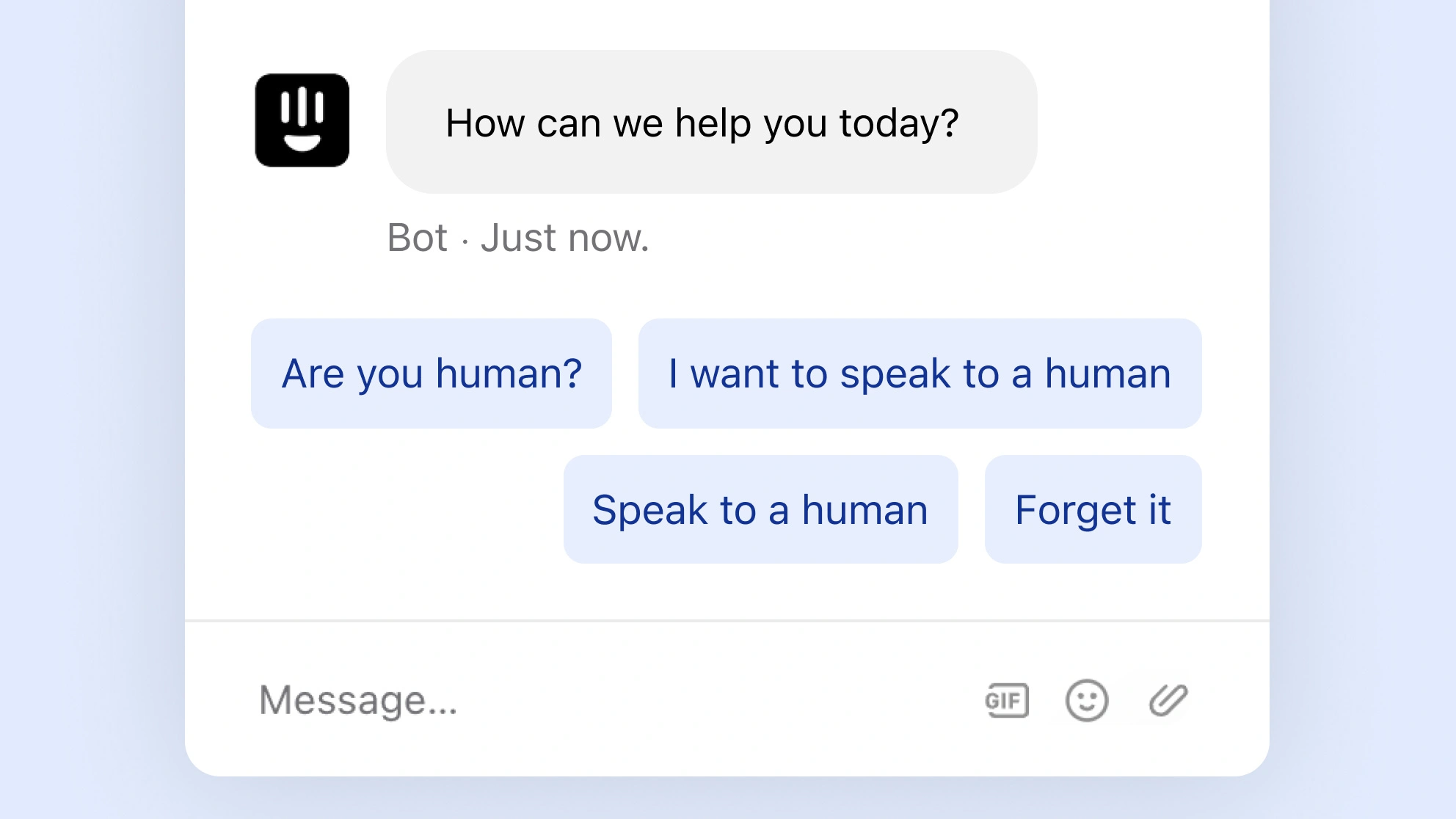

Conceptually, using decision trees and chatbots to gatekeep real human interaction, only works if it's correct in knowing when to answer, and when to give up—AI doesn't (yet).

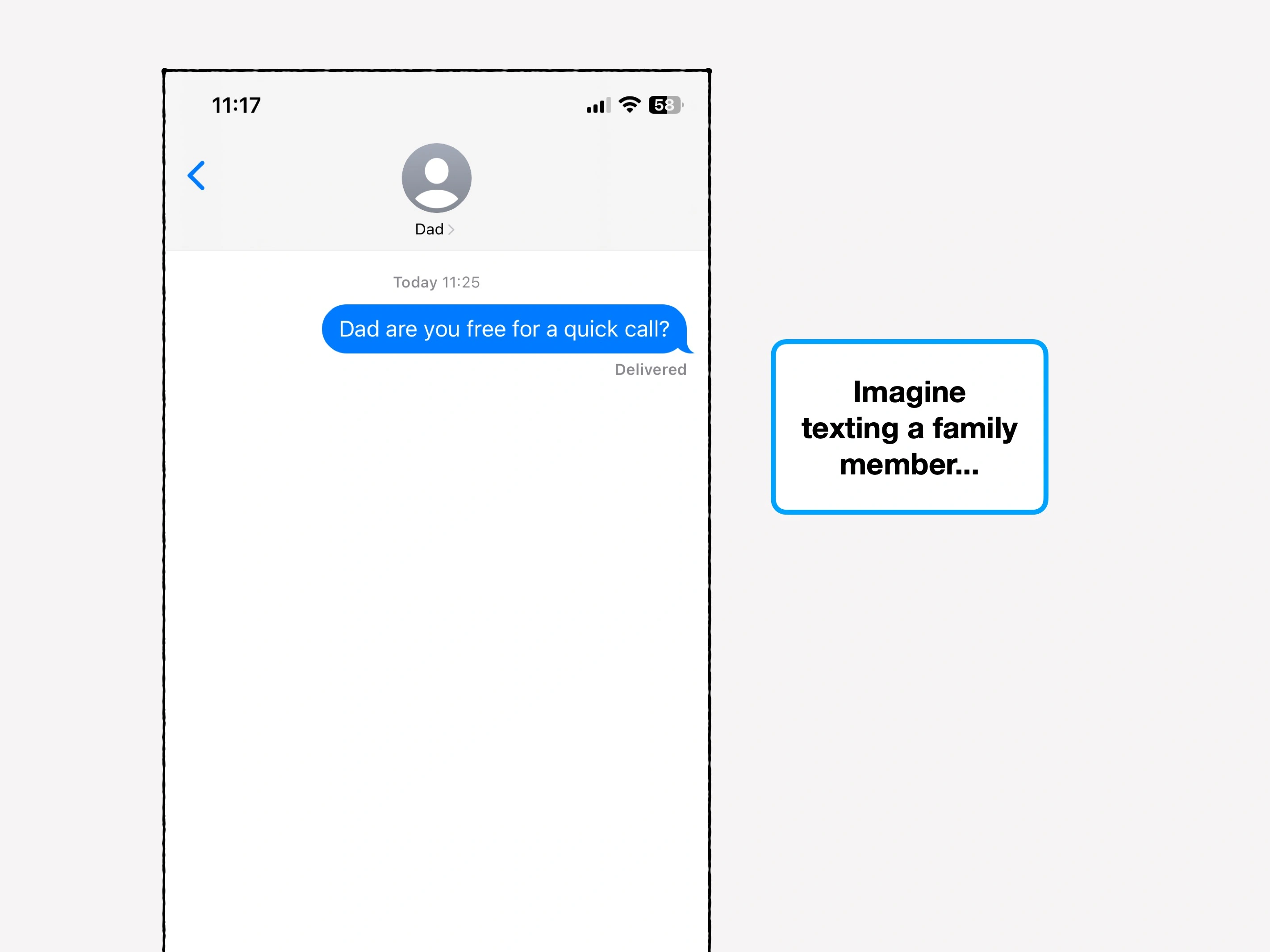

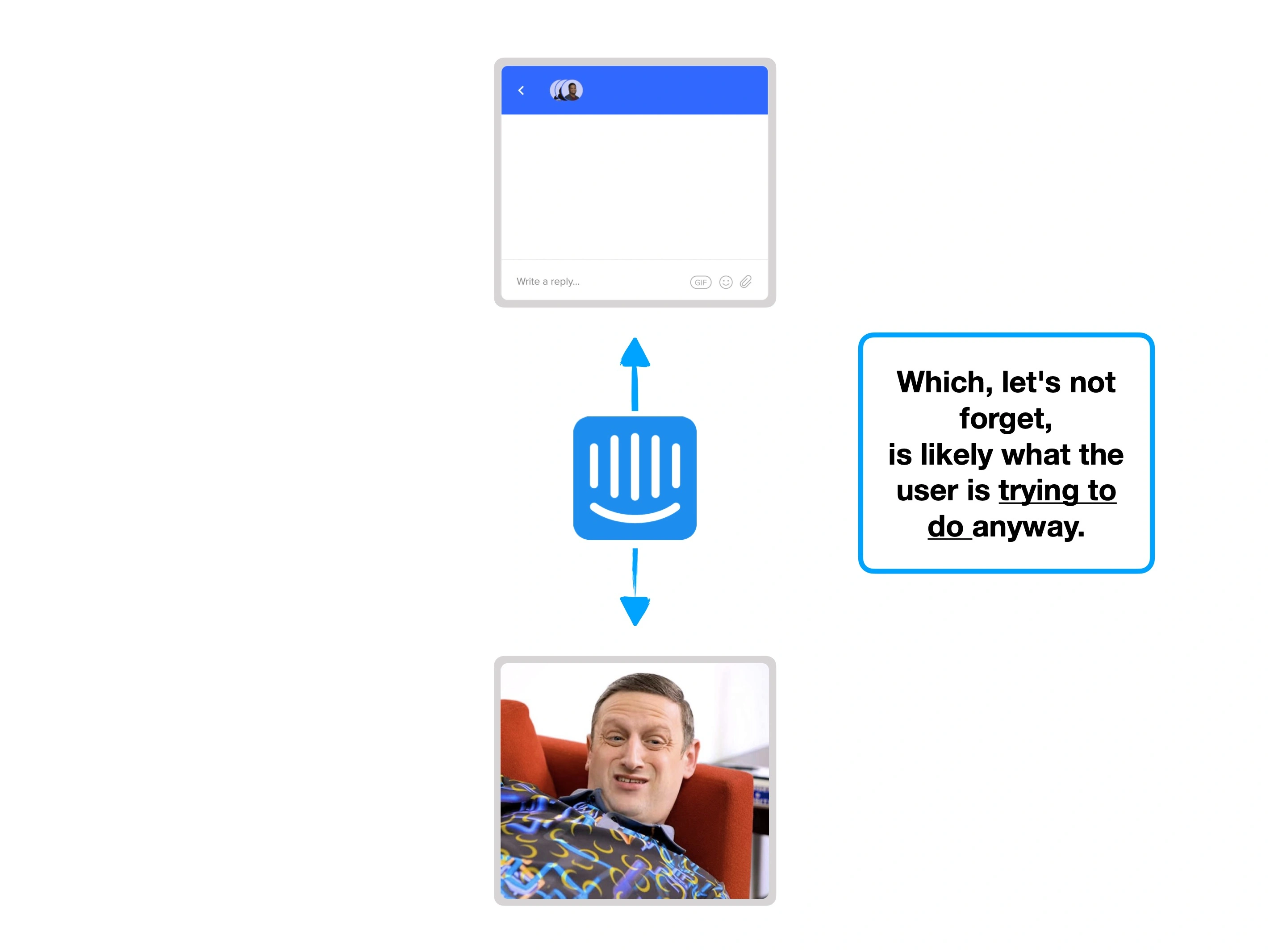

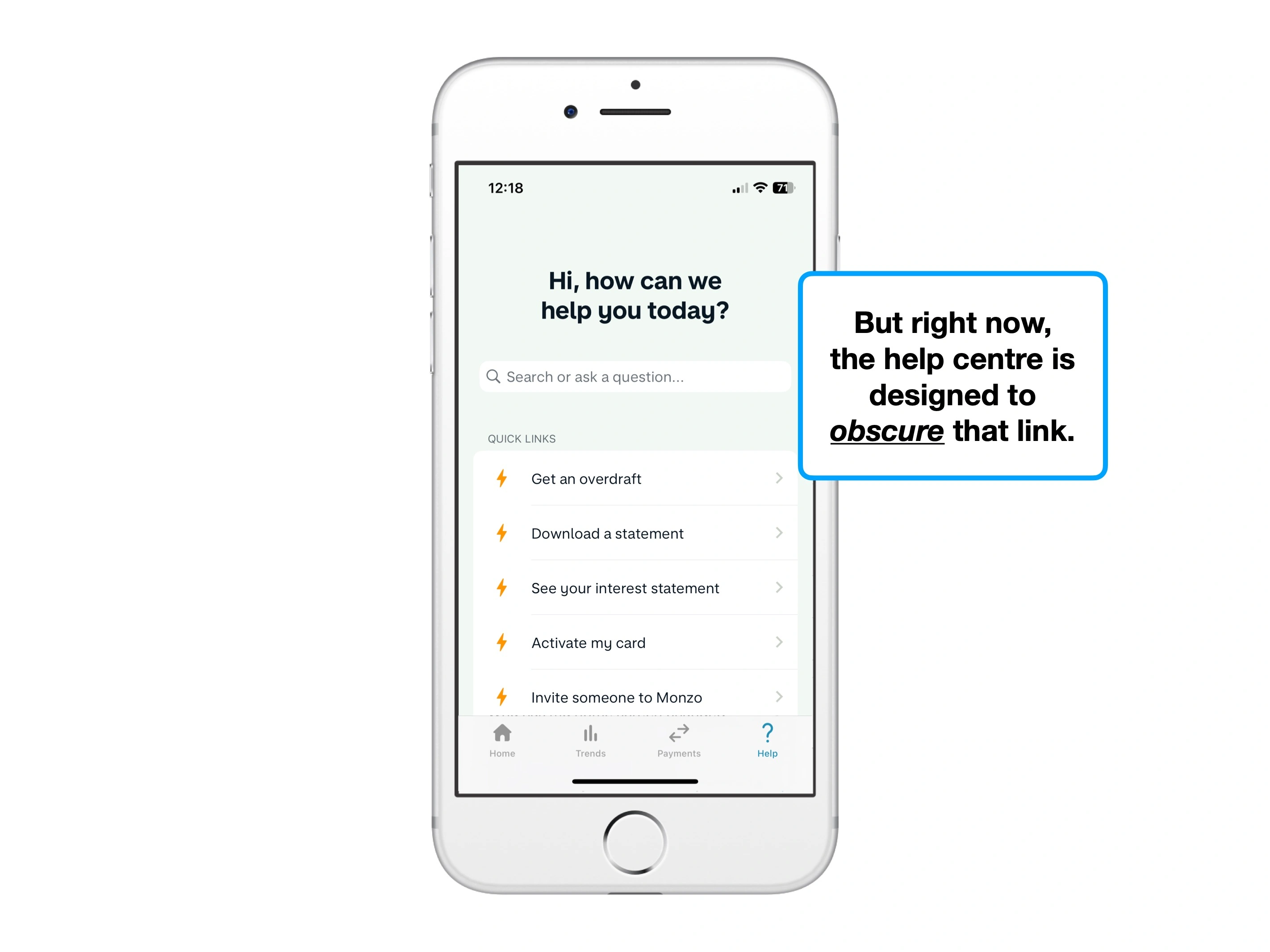

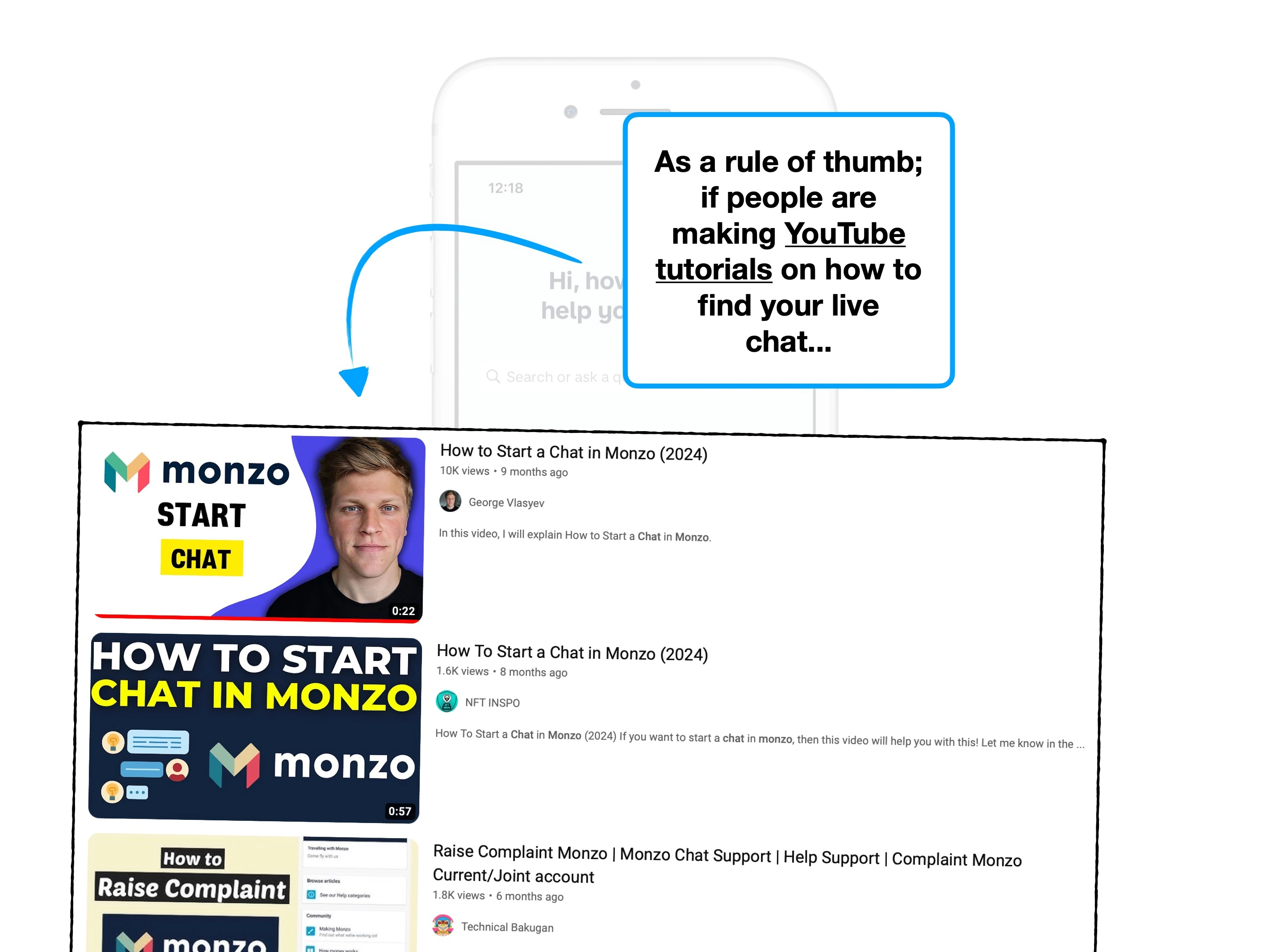

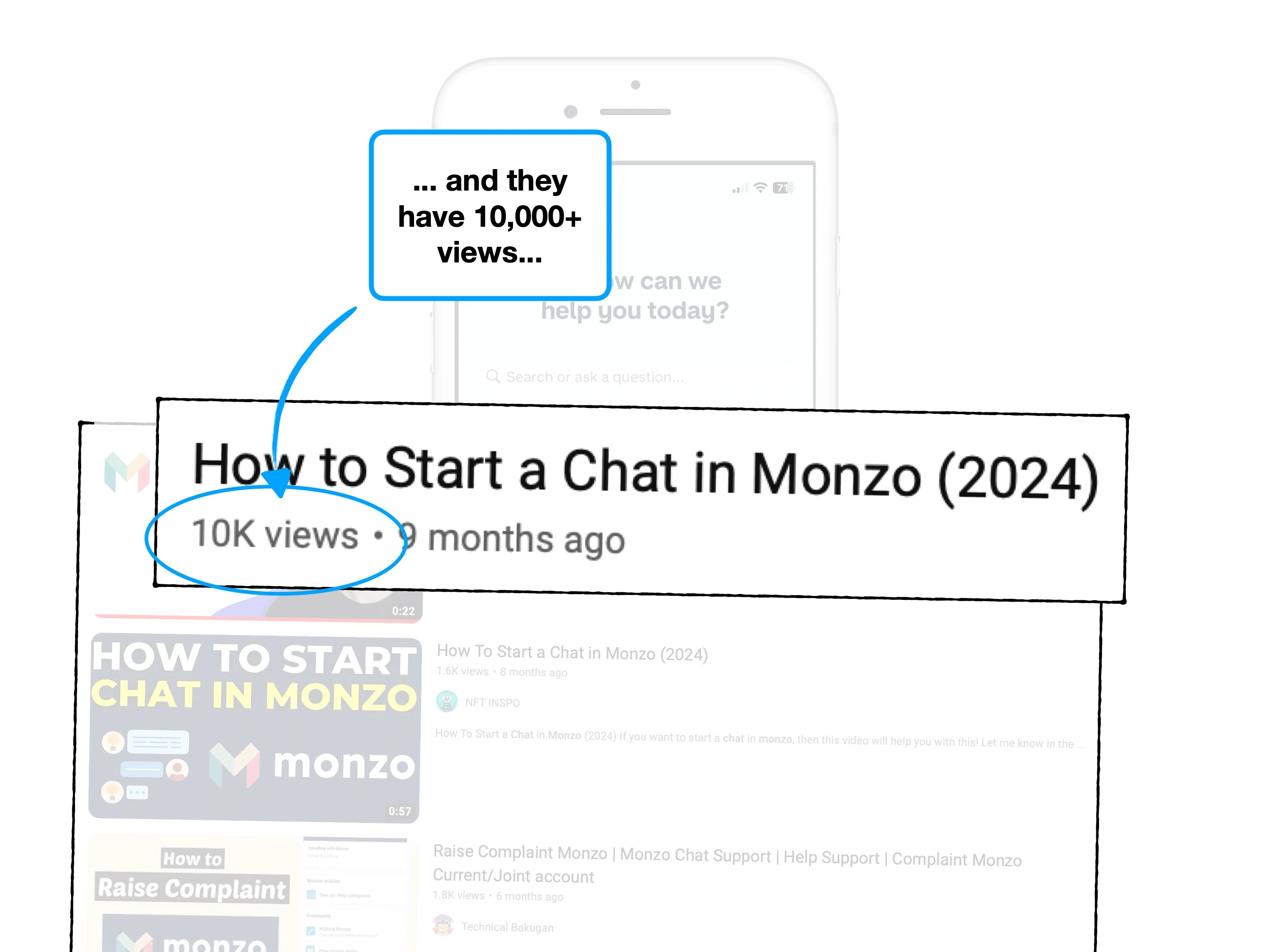

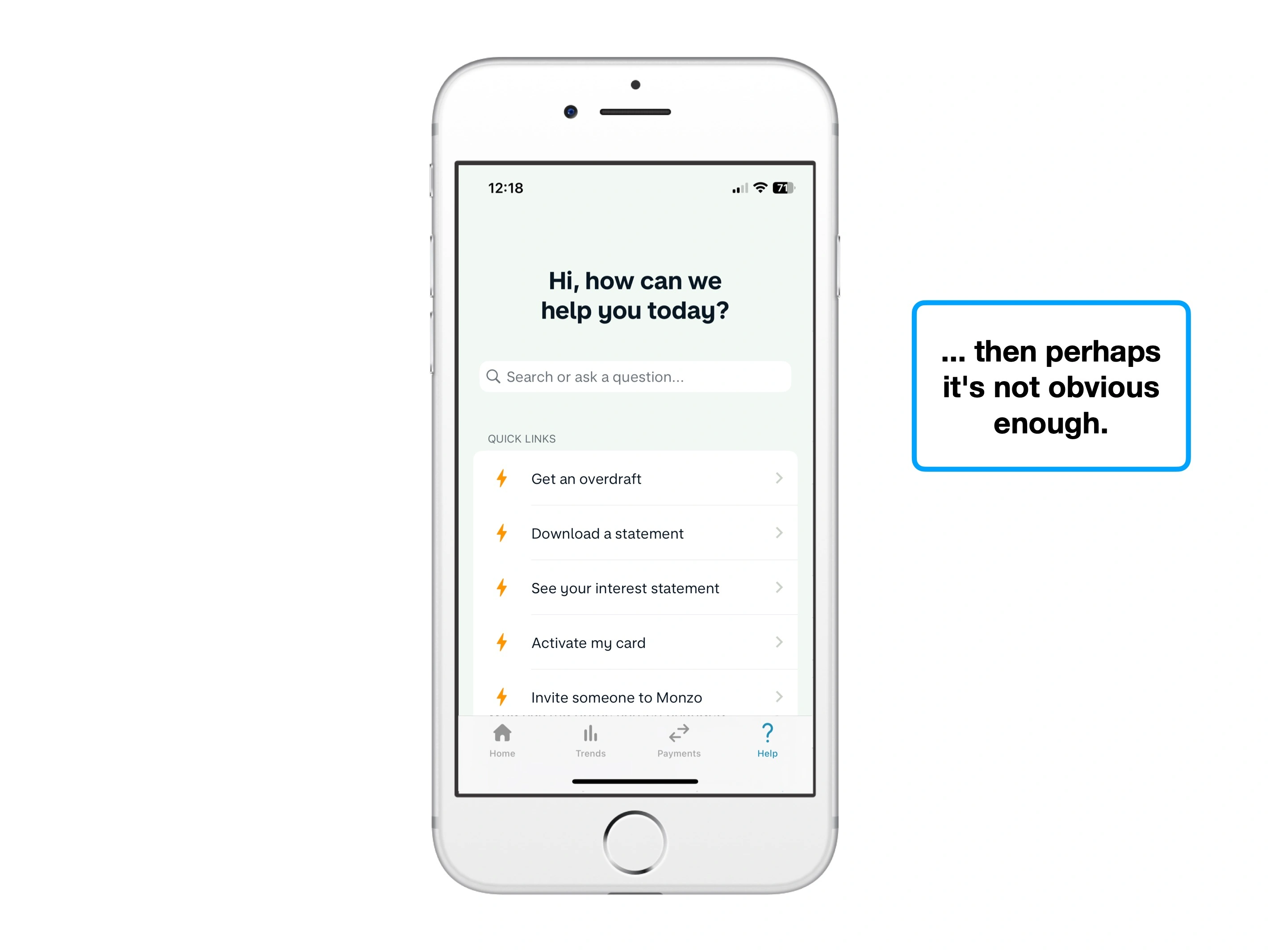

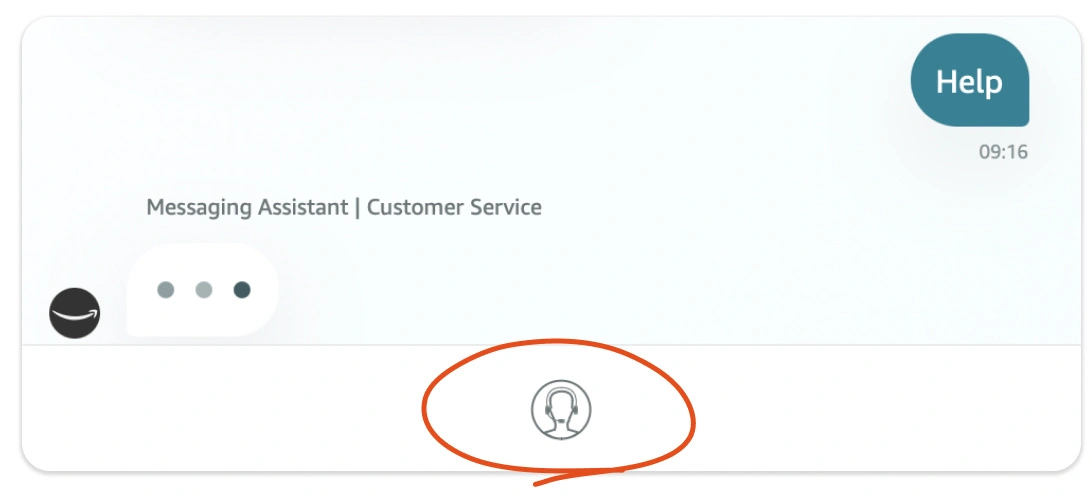

Amazon understand this. They'll show this 'chat to agent' button whenever the chatbot is thinking.

It's a defacto 'pull to escape' cord.

Even if the user doesn't touch it, it's a 🛟 Visible Lifeboat, improving the expected value of a conversation. It's reassurance that a human will be there, if you need them.

UX Exercise

What psychological bias does this 'doing stuff' indicator affect?